by Barbara Fischkin

Round about St. Patrick’s Day, in the Spring of 1984, my Jewish mother, Ida Fischkin, learned that within months I would marry Jim Mulvaney.

She offered enthusiastic congratulations, as did my father. They loved Jim. We had been living together for a few years.

“Please tell me the honeymoon is in Aruba,” my mother said, sounding hopeless. She had already guessed where we were headed: Ireland, setting up shop in Dublin then establishing an outpost in Belfast to cover the raging civil war.

Jim and I exchanged vows on June 17, underneath a proper Jewish chuppah on the outdoor deck of an Irish style pub-cum-restaurant on the shores of New York’s Jamaica Bay. In less than two weeks we would move across the Atlantic. Jim and I were both newspaper reporters and this ancient Celtic-versus-Anglo story had raged again in recent years, although it bored most American editors. Jim pitched our bosses at Newsday with a suggestion of a Ireland Bureau to appeal to the large number of potential readers who claim Irish ancestry – 6 percent of residents of New York City, double that on Long Island.

During the weeks leading up to our wedding, I realized how fortunate I was to have a mother who, like me, appreciated the value of risk and adventure, particularly if these included happy endings. As a six-year-old, in the midst of an Eastern European pogrom, my mother had saved her own life, astonishing my grandparents, who thought she was dead. A different sort of mother could have made those days of frantic preparations hellish instead of compelling.

There was, though, one small problem. Read more »

Do birds have a sense of beauty? Do they, or does any animal, have an aesthetic sense? Do they respond to beauty in ways we might find familiar – with a feeling of awe, suffused with attraction, mixed with joy? Do they seek it out, and perhaps even work to fashion it from their surroundings? Darwin thought so, and made the idea the subject of his second major work, The Descent of Man (1871). In it, he outlined a mechanism by which the sense of beauty might, by shaping mating preferences, work to shape the form of insects, fish, and birds in a manner parallel to the better known process of natural selection. The resulting beauty of form, sound, or movement, Darwin argued, is neither the result of intelligent design, nor a necessary indication of superior fitness. Beauty, as

Do birds have a sense of beauty? Do they, or does any animal, have an aesthetic sense? Do they respond to beauty in ways we might find familiar – with a feeling of awe, suffused with attraction, mixed with joy? Do they seek it out, and perhaps even work to fashion it from their surroundings? Darwin thought so, and made the idea the subject of his second major work, The Descent of Man (1871). In it, he outlined a mechanism by which the sense of beauty might, by shaping mating preferences, work to shape the form of insects, fish, and birds in a manner parallel to the better known process of natural selection. The resulting beauty of form, sound, or movement, Darwin argued, is neither the result of intelligent design, nor a necessary indication of superior fitness. Beauty, as

In a recent interview in the

In a recent interview in the

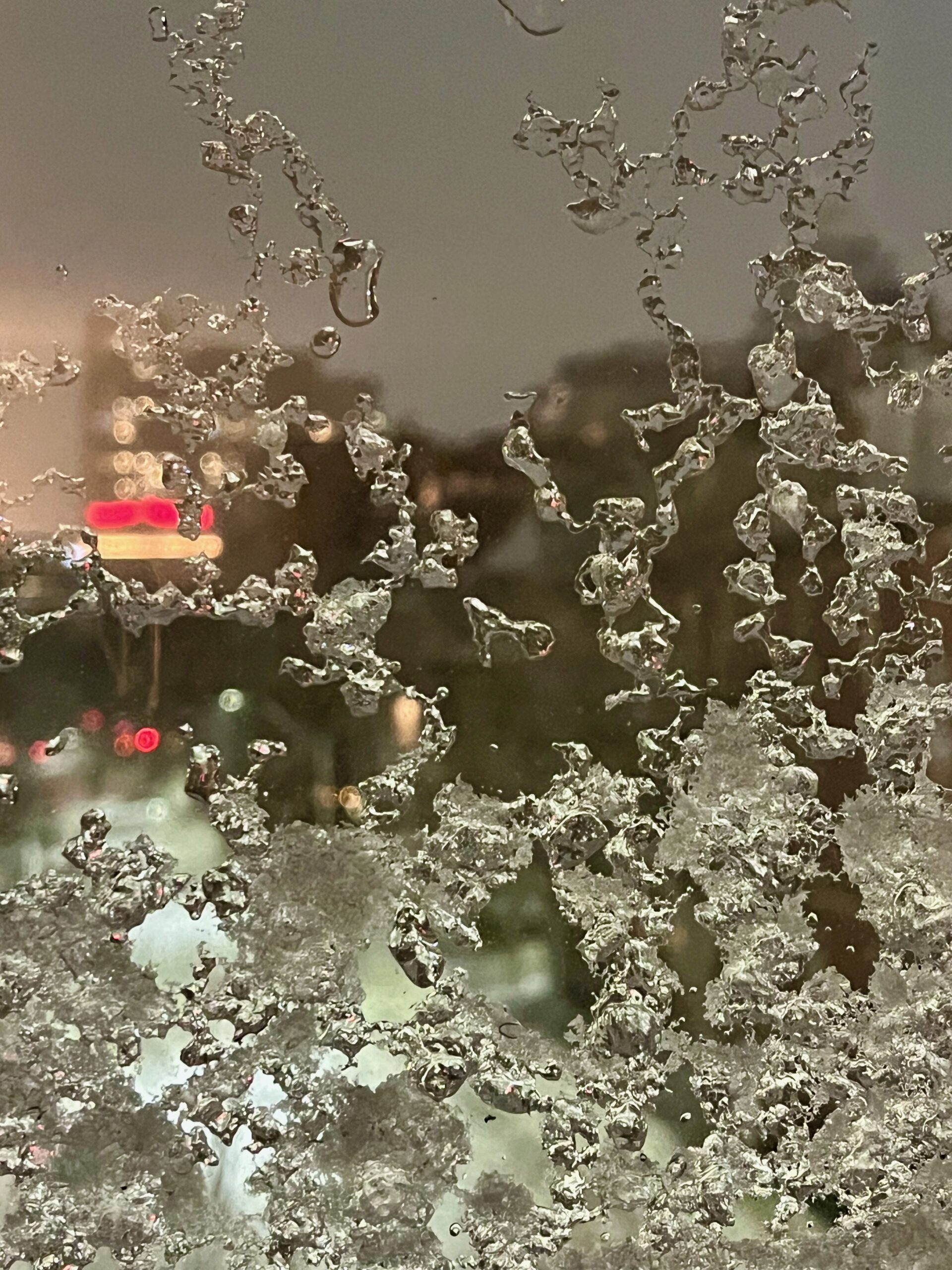

Sughra Raza. Blizzard in Fractals. Boston, February, 2026.

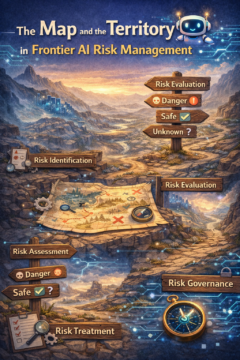

Sughra Raza. Blizzard in Fractals. Boston, February, 2026. Over the past year, there has been significant movement in AI risk management, with leading providers publishing safety frameworks over the past year that function as AI risk management. However, the problem is that these are not actually proper risk management when you compare them to established practice in other high-risk industries.

Over the past year, there has been significant movement in AI risk management, with leading providers publishing safety frameworks over the past year that function as AI risk management. However, the problem is that these are not actually proper risk management when you compare them to established practice in other high-risk industries.

C. Thi Nguyen’s The Score: How to Stop Playing Somebody Else’s Game (Penguin, 2026;

C. Thi Nguyen’s The Score: How to Stop Playing Somebody Else’s Game (Penguin, 2026;  How can we possibly approach the world today without being in a constant stage of rage? Philosopher and psychoanalyst Josh Cohen’s

How can we possibly approach the world today without being in a constant stage of rage? Philosopher and psychoanalyst Josh Cohen’s