by Ashutosh Jogalekar

There are physicists, and then there are physicists. There are engineers, and then there are engineers. There are government advisors, and then there are government advisors.

There are physicists, and then there are physicists. There are engineers, and then there are engineers. There are government advisors, and then there are government advisors.

And then there’s Dick Garwin.

Richard L. Garwin, who his friends and colleagues called Dick, has died at the age of 97. He was a man whose soul imbibed technical brilliance and whose life threaded the narrow corridor between Promethean power and principled restraint. A scientist of prodigious intellect and unyielding moral seriousness, his career spanned the detonations of the Cold War and the dimming of the Enlightenment spirit in American public life. He was, without fanfare or affectation, the quintessential citizen-scientist—at once a master of equations and a steward of consequence. When you needed objective scientific advice on virtually any technological or defense-related question, you asked Dick Garwin, even when you did not like the advice. Especially when you did not like it. And yet he was described as “the most influential scientist you have never heard of”, legendary in the world of physics and national security but virtually unknown outside it.

He was born in Cleveland in 1928 to Jewish immigrants from Eastern Europe, and quickly distinguished himself as a student whose mind moved with the inexorable clarity of first principles. His father was an electronics technician and high school science teacher who moonlighted as a movie projectionist. As a young child Garwin was already taking things apart, with the promise of reassembling them. By the age of 21 he had earned his Ph.D. under Enrico Fermi, who—legend has it—once remarked that Garwin was the only true genius he had ever met. This was not idle flattery. After Fermi, Dick Garwin might be the closest thing we have had to a universal scientist who understood the applied workings of every branch of physics and technology. There was no system whose principles he did not comprehend, whether mechanical, electrical or thermodynamic, no machine that he could not fix, no calculation that fazed him. Just two years after getting his Ph.D., Garwin would design the first working hydrogen bomb, a device of unprecedented and appalling potency, whose test, dubbed “Ivy Mike,” would usher in a new and even graver chapter of the nuclear age. Read more »

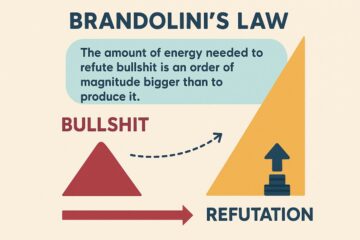

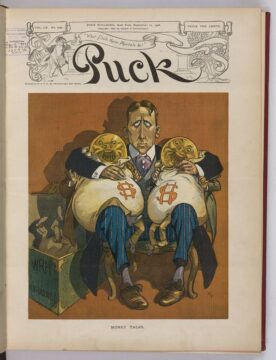

As atrocious, appalling, and abhorrent as Trump’s countless spirit-sapping outrages are, I’d like to move a little beyond adumbrating them and instead suggest a few ideas that make them even more pernicious than they first seem. Underlying the outrages are his cruelty, narcissism and ignorance, made worse by the fact that he listens to no one other than his worst enablers. On rare occasions, these are the commentators on Fox News who are generally indistinguishable from the sycophants in his cabinet, A Parliament of Whores,” to use the title of P.J. O’Rourke’s hilarious book. (No offense intended toward sex workers.) Stalin is reputed to have said that a single death is a tragedy, a million deaths is a statistic. Paraphrasing it, I note that a single mistake, insult, or consciously false statement by a politician is, of course, a serious offense, but 25,000 of them is a statistic. Continuing with a variant of another comment often attributed to Stalin, I can imagine Trump asking, “How many divisions do CNN and the NY Times have.”

As atrocious, appalling, and abhorrent as Trump’s countless spirit-sapping outrages are, I’d like to move a little beyond adumbrating them and instead suggest a few ideas that make them even more pernicious than they first seem. Underlying the outrages are his cruelty, narcissism and ignorance, made worse by the fact that he listens to no one other than his worst enablers. On rare occasions, these are the commentators on Fox News who are generally indistinguishable from the sycophants in his cabinet, A Parliament of Whores,” to use the title of P.J. O’Rourke’s hilarious book. (No offense intended toward sex workers.) Stalin is reputed to have said that a single death is a tragedy, a million deaths is a statistic. Paraphrasing it, I note that a single mistake, insult, or consciously false statement by a politician is, of course, a serious offense, but 25,000 of them is a statistic. Continuing with a variant of another comment often attributed to Stalin, I can imagine Trump asking, “How many divisions do CNN and the NY Times have.”

Sughra Raza. Seeing is Believing. Vahrner See, Südtirol, October 2013.

Sughra Raza. Seeing is Believing. Vahrner See, Südtirol, October 2013. It’s a ritual now. Every Sunday morning I go into my garage and use marker pens and sticky tape to make a new sign. Then from noon to one I stand on a street corner near the Safeway, shoulder to shoulder with two or three hundred other would-be troublemakers, waving my latest slogan at passing cars.

It’s a ritual now. Every Sunday morning I go into my garage and use marker pens and sticky tape to make a new sign. Then from noon to one I stand on a street corner near the Safeway, shoulder to shoulder with two or three hundred other would-be troublemakers, waving my latest slogan at passing cars.

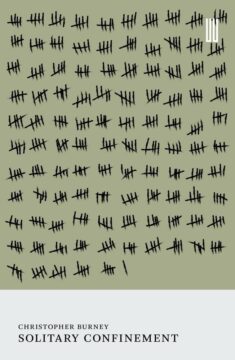

I first started reading Jon Fosse’s Septology in a bookstore. I read the first page and found myself unable to stop, like a person running on a treadmill at high speed. Finally I jumped off and caught my breath. Fosse’s book, which is a collection of seven novels published as a single volume, is one sentence long. I knew this when I picked it up, but it wasn’t as I expected. I had envisioned something like Proust or Henry James, a sentence with thousands and thousands of subordinate clauses, each one nested in the one before it, creating a sort of dizzying vortex that challenges the reader to keep track of things, but when examined closely, is found to be grammatically perfect. Fosse isn’t like that. The sentence is, if we want to be pedantic about it, one long comma splice. It could easily be split up into thousands of sentences simply by replacing the commas with periods. What this means is the book is not difficult to read—it’s actually rather easy, and once you get warmed up, just like on a long run, you settle into the pace and rhythm of the words, and you begin to move at a steady speed, your breathing and reading equilibrated.

I first started reading Jon Fosse’s Septology in a bookstore. I read the first page and found myself unable to stop, like a person running on a treadmill at high speed. Finally I jumped off and caught my breath. Fosse’s book, which is a collection of seven novels published as a single volume, is one sentence long. I knew this when I picked it up, but it wasn’t as I expected. I had envisioned something like Proust or Henry James, a sentence with thousands and thousands of subordinate clauses, each one nested in the one before it, creating a sort of dizzying vortex that challenges the reader to keep track of things, but when examined closely, is found to be grammatically perfect. Fosse isn’t like that. The sentence is, if we want to be pedantic about it, one long comma splice. It could easily be split up into thousands of sentences simply by replacing the commas with periods. What this means is the book is not difficult to read—it’s actually rather easy, and once you get warmed up, just like on a long run, you settle into the pace and rhythm of the words, and you begin to move at a steady speed, your breathing and reading equilibrated.