by Barbara Fischkin

Eight weeks have passed since I wrote about my Cousin Bernie—and how, posthumously, he adds to my own memories of him. As readers may remember from my last offering, Cousin Bernie’s widow, Joan Hamilton Morris, sent me the pages of an incomplete memoir her late husband pecked out on a vintage typewriter in an adult education class he took after retiring as a university professor of psychology and mathematics.

If Cousin Bernie were alive today he would be 102. Those pages of memoir chapters, some more worn than others, remain in a place of honor, tucked into a corner of my own writing table. I feel that “Cousin Joanie,” as I call his widow, sent them to me for safekeeping—and for presentation to the world. Originally I thought I could do this in one or two chapters. A deeper read has revealed a surprising amount of insight. Here is my fourth take on my cousin, who fascinates me despite his evergreen persona as a nerdy, chubby, lost boy from Brooklyn. There will be a fifth offering and probably a sixth. If it seems Bernie is taking over my memoir, I am fine with this. I have written a lot about my mother’s side of the family. Now it is my father’s family’s turn. And what better way to bring them into the light, than through Cousin Bernie?

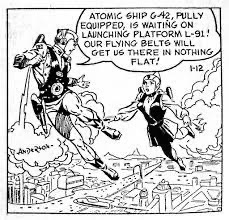

What follows is Cousin Bernie, Part Four. I’ve only edited it slightly, less so I think than his Adult Education teacher. So far, my minor editing has provoked no lightning bolts from the heavens. I have discovered another Bernie, a child who believed he could fly like his comic strip hero.

“I was eight years old, and my sister, Gertie, six. We had just been transplanted to Bridgeport, Connecticut from Brooklyn, New York. My father, a home painter and decorator, felt that he could do better in terms of finding work in a smaller city.

“Here, a whole new set of stimuli presented itself: A Benjamin Franklin stove in the kitchen, a gas water heater in the bathroom, which had to be lighted so we could bathe, and a coal bin on the back porch. There was a scuttle for bringing in coal for the stove. The Saturday Sabbath meal preparations—gefilte fish, stewed chicken and beef, challahs, cookies and pies—began as early as Wednesday night. The stove was banked and allowed to go out on Saturday night.

“We slept as the stove died down and, on Sunday mornings, my sister and I would climb into my parents’ big bed. Pop got up wearing his union suit, put on a robe, removed the ashes, kindled a new fire in the stove and came back to bed with us for my reading of the Sunday comics. My sister, Gertie, pointed to each speech bubble, as I read them. It seemed to me that Andy Gump’s nose or chin was strange looking. I disliked it when the bubbles were long. But it was here in the Sunday comics that I encountered the adventures of ‘Buck Rodgers in the Twenty-Fifth Century.’ Read more »

When I think about AI, I think about poor Queen Elizabeth.

When I think about AI, I think about poor Queen Elizabeth. In October last year, Charles Oppenheimer and I wrote a

In October last year, Charles Oppenheimer and I wrote a

have instrumental value. That is, the value of given technology lies in the various ways in which we can use it, no more, and no less. For example, the value of a hammer lies in our ability to make use of it to hit nails into things. Cars are valuable insofar as we can use them to get from A to B with the bare minimum of physical exertion. This way of viewing technology has immense intuitive appeal, but I think it is ultimately unconvincing. More specifically, I want to argue that technological artifacts are capable of embodying value. Some argue that this value is to be accounted for in terms of the

have instrumental value. That is, the value of given technology lies in the various ways in which we can use it, no more, and no less. For example, the value of a hammer lies in our ability to make use of it to hit nails into things. Cars are valuable insofar as we can use them to get from A to B with the bare minimum of physical exertion. This way of viewing technology has immense intuitive appeal, but I think it is ultimately unconvincing. More specifically, I want to argue that technological artifacts are capable of embodying value. Some argue that this value is to be accounted for in terms of the