by Ashutosh Jogalekar

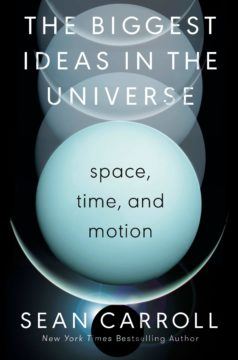

Physicists writing books for the public have faced a longstanding challenge. Either they can write purely popular accounts that explain physics through metaphors and pop culture analogies but then risk oversimplifying key concepts, or they can get into a great deal of technical detail and risk making the book opaque to most readers without specialized training. All scientists face this challenge, but for physicists it’s particularly acute because of the mathematical nature of their field. Especially if you want to explain the two towering achievements of physics, quantum mechanics and general relativity, you can’t really get away from the math. It seems that physicists are stuck between a rock and a hard place: include math and, as the popular belief goes, every equation risks cutting their readership by half or, exclude math and deprive readers of a deeper understanding. The big question for a physicist who wants to communicate the great ideas of physics to a lay audience without entirely skipping the technical detail thus is, is there a middle ground?

Physicists writing books for the public have faced a longstanding challenge. Either they can write purely popular accounts that explain physics through metaphors and pop culture analogies but then risk oversimplifying key concepts, or they can get into a great deal of technical detail and risk making the book opaque to most readers without specialized training. All scientists face this challenge, but for physicists it’s particularly acute because of the mathematical nature of their field. Especially if you want to explain the two towering achievements of physics, quantum mechanics and general relativity, you can’t really get away from the math. It seems that physicists are stuck between a rock and a hard place: include math and, as the popular belief goes, every equation risks cutting their readership by half or, exclude math and deprive readers of a deeper understanding. The big question for a physicist who wants to communicate the great ideas of physics to a lay audience without entirely skipping the technical detail thus is, is there a middle ground?

Over the last decade or so there have been a few books that have in fact tried to tread this middle ground. Perhaps the most ambitious was Roger Penrose’s “The Road to Reality” which tried to encompass, in more than 800 pages, almost everything about mathematics and physics. Then there’s the “Theoretical Minimum” series by Leonard Susskind and his colleagues which, in three volumes (and an upcoming fourth one on general relativity) tries to lay down the key principles of all of physics. But both Penrose and Susskind’s volumes, as rewarding as they are, require a substantial time commitment on the part of the reader, and both at one point become comprehensible only to specialists.

If you are trying to find a short treatment of the key ideas of physics that is genuinely accessible to pretty much anyone with a high school math background, you would be hard-pressed to do better than Sean Carroll’s upcoming “The Biggest Ideas in the Universe”. Since I have known him a bit on social media for a while, I will refer to Sean by his first name. “The Biggest Ideas in the Universe” is based on a series of lectures that Sean gave during the pandemic. The current volume is the first in a set of three and deals with “space, time and motion”. In short, it aims to present all the math and physics you need to know for understanding Einstein’s special and general theories of relativity. Read more »

‘

‘

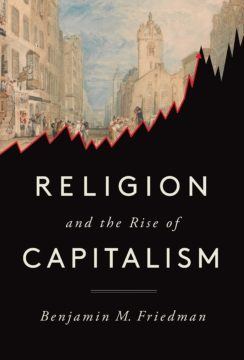

Religion has always had an uneasy relationship with money-making. A lot of religions, at least in principle, are about charity and self-improvement. Money does not directly figure in seeking either of these goals. Yet one has to contend with the stark fact that over the last 500 years or so, Europe and the United States in particular acquired wealth and enabled a rise in people’s standard of living to an extent that was unprecedented in human history. And during the same period, while religiosity in these countries varied there is no doubt, especially in Europe, that religion played a role in people’s everyday lives whose centrality would be hard to imagine today. Could the rise of religion in first Europe and then the United States somehow be connected with the rise of money and especially the free-market system that has brought not just prosperity but freedom to so many of these nations’ citizens? Benjamin Friedman who is a professor of political economy at Harvard explores this fascinating connection in his book “Religion and the Rise of Capitalism”. The book is a masterclass on understanding the improbable links between the most secular country in the world and the most economically developed one.

Religion has always had an uneasy relationship with money-making. A lot of religions, at least in principle, are about charity and self-improvement. Money does not directly figure in seeking either of these goals. Yet one has to contend with the stark fact that over the last 500 years or so, Europe and the United States in particular acquired wealth and enabled a rise in people’s standard of living to an extent that was unprecedented in human history. And during the same period, while religiosity in these countries varied there is no doubt, especially in Europe, that religion played a role in people’s everyday lives whose centrality would be hard to imagine today. Could the rise of religion in first Europe and then the United States somehow be connected with the rise of money and especially the free-market system that has brought not just prosperity but freedom to so many of these nations’ citizens? Benjamin Friedman who is a professor of political economy at Harvard explores this fascinating connection in his book “Religion and the Rise of Capitalism”. The book is a masterclass on understanding the improbable links between the most secular country in the world and the most economically developed one.

The Doomsday Scenario, also known as the Copernican Principle, refers to a framework for thinking about the death of humanity. One can read all about it in a recent

The Doomsday Scenario, also known as the Copernican Principle, refers to a framework for thinking about the death of humanity. One can read all about it in a recent  On a whim I decided to visit the gently sloping hill where the universe announced itself in 1964, not with a bang but with ambient, annoying noise. It’s the static you saw when you turned on your TV, or at least used to back when analog TVs were a thing. But today there was no noise except for the occasional chirping of birds, the lone car driving off in the distance and a gentle breeze flowing through the trees. A recent trace of rain had brought verdant green colors to the grass. A deer darted into the undergrowth in the distance.

On a whim I decided to visit the gently sloping hill where the universe announced itself in 1964, not with a bang but with ambient, annoying noise. It’s the static you saw when you turned on your TV, or at least used to back when analog TVs were a thing. But today there was no noise except for the occasional chirping of birds, the lone car driving off in the distance and a gentle breeze flowing through the trees. A recent trace of rain had brought verdant green colors to the grass. A deer darted into the undergrowth in the distance.