by David J. Lobina

The hype surrounding Large Language Models remains unbearable when it comes to the study of human cognition, no matter what I write in this Column about the issue – doesn’t everyone read my posts? I certainly do sometimes.

Indeed, this is my fourth, successive post on the topic, having already made the points that Machine/Deep Learning approaches to Artificial Intelligence cannot be smart or sentient, that such approaches are not accounts of cognition anyway, and that when put to the test, LLMs don’t actually behave like human beings at all (where? In order: here, here, and here).[i]

But, again, no matter. Some of the overall coverage on LLMs can certainly be ludicrous (a covenant so that future, sentient computer programs have their rights protected?), and even delirious (let’s treat AI chatbots as we treat people, with radical love?), and this is without considering what some tech charlatans and politicians have said about these models. More to the point here, two recent articles from some cognitive scientists offer quite the bloated view regarding what LLMs can do and contribute to the study of language, and a discussion of where these scholars have gone wrong will, hopefully, make me sleep better at night.

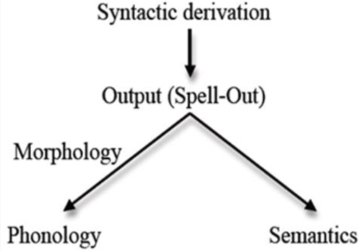

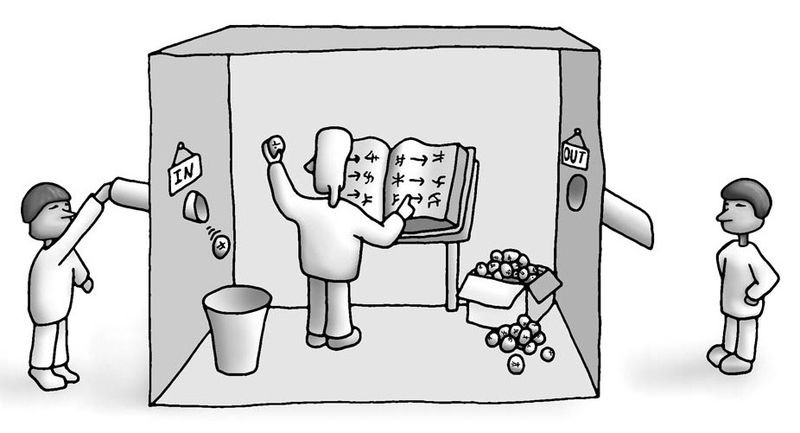

One Pablo Contreras Kallens and two colleagues have it that LLMs constitute an existence proof (their choice of words) that the ability to produce grammatical language can be learned from exposure to data alone, without the need to postulate language-specific processes or even representations, with clear repercussions for cognitive science.[ii]

And one Steven Piantadosi, in a wide-ranging (and widely raging) book chapter, claims that LLMs refute Chomsky’s approach to language, and in toto no less, given that LLMs are bona fide (his choice of words) theories of language; these models have developed sui generis representations of key linguistic structures and dependencies, thereby capturing the basic dynamics of human language and constituting a clear victory for statistical learning in so doing (Contreras Kallens and co. get a tip of the hat here), and in any case Chomskyan accounts of language are not precise or formal enough, cannot be integrated with other fields of cognitive science, have not been empirically tested, and moreover…(oh, piantala).[iii] Read more »

Despite many people’s apocalyptic response to ChatGPT, a great deal of caution and skepticism is in order. Some of it is philosophical, some of it practical and social. Let me begin with the former.

Despite many people’s apocalyptic response to ChatGPT, a great deal of caution and skepticism is in order. Some of it is philosophical, some of it practical and social. Let me begin with the former.

How intelligent is ChatGPT? That question has loomed large ever since

How intelligent is ChatGPT? That question has loomed large ever since

Environmentalists are always complaining that governments are obsessed with GDP and economic growth, and that this is a bad thing because economic growth is bad for the environment. They are partly right but mostly wrong. First, while governments talk about GDP a lot, that does not mean that they actually prioritise economic growth. Second, properly understood economic growth is a great and wonderful thing that we should want more of.

Environmentalists are always complaining that governments are obsessed with GDP and economic growth, and that this is a bad thing because economic growth is bad for the environment. They are partly right but mostly wrong. First, while governments talk about GDP a lot, that does not mean that they actually prioritise economic growth. Second, properly understood economic growth is a great and wonderful thing that we should want more of. Sughra Raza. Untitled. April 1, 2023.

Sughra Raza. Untitled. April 1, 2023.

According to my father, David Mamet once said that his scripts are about “men in confined space.” I have been unable to verify this quote, but if you look on the internet, there’s an awful lot of writing about Mamet and “confined space.” In particular, I suspect the origin of this apocryphal statement may be

According to my father, David Mamet once said that his scripts are about “men in confined space.” I have been unable to verify this quote, but if you look on the internet, there’s an awful lot of writing about Mamet and “confined space.” In particular, I suspect the origin of this apocryphal statement may be