by John Allen Paulos

Despite many people’s apocalyptic response to ChatGPT, a great deal of caution and skepticism is in order. Some of it is philosophical, some of it practical and social. Let me begin with the former.

Despite many people’s apocalyptic response to ChatGPT, a great deal of caution and skepticism is in order. Some of it is philosophical, some of it practical and social. Let me begin with the former.

Whatever its usefulness, we naturally wonder whether CharGPT and its near relatives understand language and, more generally, whether they demonstrate real intelligence. The Chinese Room thought experiment, a classic argument put forward by philosopher John Searle in 1980, somewhat controversially maintains that the answer is No. It is a refinement of arguments of this sort that go back to Leibniz.

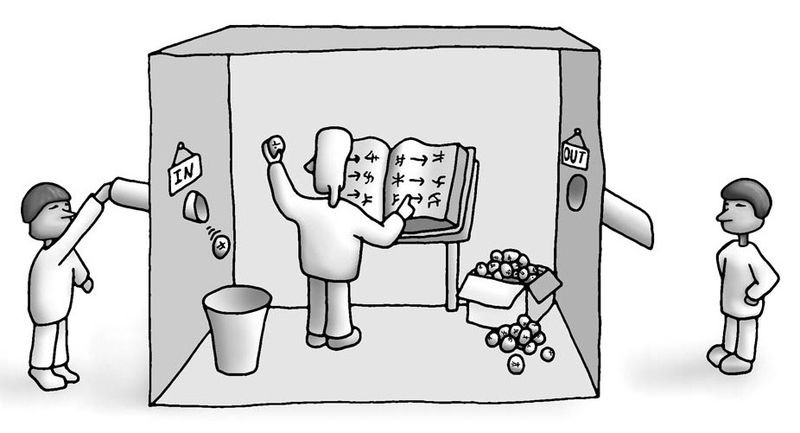

In his presentation of the argument (very roughly sketched here), Searle first assumes that research in artificial intelligence has, contrary to fact, already managed to design a computer program that seems to understand Chinese. Specifically, the computer responds to inputs of Chinese characters by following the program’s humongous set of detailed instructions to generate outputs of other Chinese characters. It’s assumed that the program is so good at producing appropriate responses that even Chinese speakers find it to be indistinguishable from a human Chinese speaker. In other words, the computer program passes the so-called Turing test, but does even it really understand Chinese?

The next step in Searle’s argument asks us to imagine a man completely innocent of the Chinese language in a closed room, perhaps sitting behind a large desk in it. He is supplied with an English language version of the same elaborate set of rules and protocols the computer program itself uses for correctly manipulating Chinese characters to answer questions put to it. Moreover, the man is directed to use these rules and protocols to respond to questions written in Chinese that are submitted to him through a mail slot in in the wall of the room. Someone outside the room would likely be quite impressed with the man’s responses to the questions posed to him and what seems to be the man’s understanding of Chinese. Yet all the man is doing is blindly following the same rules that govern the way the sequences of symbols in the questions should be responded to in order to yield answers. Clearly the man could process the Chinese questions and produce answers to them without any understanding any of the Chinese writing. Finally, Searle drops the mic by maintaining that both the man and the computer itself have no knowledge or understanding of Chinese.

So, does Searle’s experiment show that machine knowledge and intelligence are impossible? To answer that would require diving into a huge literature – extended analyses of tacit assumptions, nuances, special cases, not to mention definitions of consciousness and intentionality. We would need to understand exactly how meaning might arise from rules or, equivalently, how semantics might arise from syntax. We would need to understand how a machine could know or believe something. If it’s true, would the machine believe it, whatever that means, and if it’s false would the machine disbelieve it.

As noted, these are philosophical questions and don’t necessarily undermine the transformative power and usefulness of ChatGPT. They do force us to consider whether it can truly be said to possess intelligence and consciousness or whether it is “only” an impressively sophisticated tool for processing and manipulating vast amounts of data in natural languages at lightning speed.

Of course, the vast amounts of data engorged from books, articles, websites, etcetera, that the machine learning is based upon present other more practical problems. For example, does this human data infect and pollute ChatGPT’s responses with some of our worst traits? A rather simplistic illustration: Asked to complete “man is to doctor as woman is to x,” the x that is generated from the data might be “nurse.” Likewise, “shopkeeper is to man as x is to woman,” and x might turn out to be “housewife.” As Brian Christian observes in his book The Alignment Problem, there are many more serious problems baked almost invisibly into the voluminous data the machine learning is based upon. Some of these issues involve the criminal justice system, political systems, and other pillars of society.

The lack of human oversight in such systems should alarm people. Relevant is another thought experiment that should give pause to our headlong embrace of ChatGPT and AI in general. It is the so-called paperclip maximizer problem devised by philosopher Nick Bostrom, which illustrates that without human filters and oversight, employing algorithms and systems might easily result in catastrophic consequences.

Consider, for example, an artificial intelligence system designed to maximize paperclips. Seems innocuous enough, and system will do so, of course, but it may also work to increase its own intelligence to enable it to produce more and more paperclips. The system wouldn’t necessarily value common sense or general intelligence but only focus myopically on whatever means it could develop to optimize its ability to produce more paperclips. Eventually, the system might conceivably go through an “intelligence explosion” that would result in the dwarfing of human intelligence. It would devise increasingly clever ways to maximize the number of paperclips, ultimately filling the whole planet and beyond with paperclips. Clearly, the future monomaniacal nature of such an algorithm innocently devised to maximize paperclips would not take into account human values, interests, and pursuits. It would be completely alien to us. This is, of course, only a thought experiment, but like all thought experiments, it is intended to stimulate thought. In this case, the thought is of the future perils of artificial intelligence.

The bottom line is that there are many such cautionary tocsins that should induce a wariness in us about the introduction and pervasive use ChatGPT and its descendants. We shouldn’t mindlessly and immediately try to switch from a horse and carriage to a Porsche powered by artificial intelligence. If we do proceed so recklessly, our whole civilization may crash.

***

John Allen Paulos is a Professor of Mathematics at Temple University and the author of A Mathematician Reads the Newspaper, Innumeracy, and a new book, Who’s Counting –Uniting Numbers and Narratives with Stories from Pop Culture, Puzzles, Politics, and More. (the link to the Amazon page of the book.)