From Harvard Magazine:

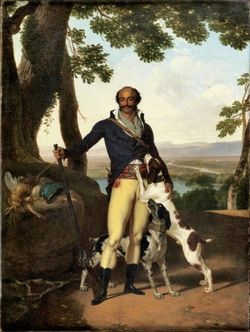

He was the son of a black slave and a renegade French aristocrat, born in Saint-Domingue (now Haiti) when the island was the center of the world sugar trade. The boy’s uncle was a rich, hard-working planter who dealt sugar and slaves out of a little cove on the north coast called Monte Cristo—but his father, Antoine, neither rich nor hard-working, was the eldest son. In 1775, Antoine sailed to France to claim the family inheritance, pawning his black son into slavery to buy passage. Only after securing his title and inheritance did he send for the boy, who arrived on French soil late in 1776, listed in the ship’s records as “slave Alexandre.” At 16, he moved with his father, now a marquis, to Paris, where he was educated in classical philosophy, equestrianism, and swordsmanship. But at 24, he decided to set off on his own: joining the dragoons at the lowest rank, he was stationed in a remote garrison town where he specialized in fighting duels. The year was 1786. When the French Revolution erupted three years later, the cause of liberty, equality, and fraternity gave him his chance. As a German-Austrian army marched on Paris in 1792 to reimpose the monarchy, he made a name for himself by capturing a large enemy patrol without firing a shot. He got his first officer’s commission at the head of a band of fellow black swordsmen, revolutionaries called the Legion of Americans, or simply la Légion Noire. In the meantime, he had met his true love, an innkeeper’s daughter, while riding in to rescue her town from brigands.

He was the son of a black slave and a renegade French aristocrat, born in Saint-Domingue (now Haiti) when the island was the center of the world sugar trade. The boy’s uncle was a rich, hard-working planter who dealt sugar and slaves out of a little cove on the north coast called Monte Cristo—but his father, Antoine, neither rich nor hard-working, was the eldest son. In 1775, Antoine sailed to France to claim the family inheritance, pawning his black son into slavery to buy passage. Only after securing his title and inheritance did he send for the boy, who arrived on French soil late in 1776, listed in the ship’s records as “slave Alexandre.” At 16, he moved with his father, now a marquis, to Paris, where he was educated in classical philosophy, equestrianism, and swordsmanship. But at 24, he decided to set off on his own: joining the dragoons at the lowest rank, he was stationed in a remote garrison town where he specialized in fighting duels. The year was 1786. When the French Revolution erupted three years later, the cause of liberty, equality, and fraternity gave him his chance. As a German-Austrian army marched on Paris in 1792 to reimpose the monarchy, he made a name for himself by capturing a large enemy patrol without firing a shot. He got his first officer’s commission at the head of a band of fellow black swordsmen, revolutionaries called the Legion of Americans, or simply la Légion Noire. In the meantime, he had met his true love, an innkeeper’s daughter, while riding in to rescue her town from brigands.

If all this sounds a bit like the plot of a nineteenth-century novel, that’s because the life of Thomas-Alexandre Davy de la Pailleterie—who took his slave mother’s surname when he enlisted, becoming simply “Alexandre (Alex) Dumas”—inspired some of the most popular novels ever written. His son, the Dumas we all know, echoed the dizzying rise and tragic downfall of his own father in The Three Musketeers and The Count of Monte Cristo. Known for acts of reckless daring in and out of battle, Alex Dumas was every bit as gallant and extraordinary as D’Artagnan and his comrades rolled into one. But it was his betrayal and imprisonment in a dungeon on the coast of Naples, poisoned to the point of death by faceless enemies, that inspired his son’s most powerful story.

More here.