Kwame Anthony Appiah in IAI:

“And now what will become of us without barbarians? / Those people were a kind of solution.”

—C. P. Cavafy, “Waiting for the Barbarians” (1898)

Perhaps you know this poem? Constantine Cavafy was a writer whose every identity came with an asterisk, a quality he shared with Italo Svevo. Born two years after Svevo, he died only a few years after him. Cavafy was a Greek who never lived in Greece. A government clerk of Eastern Orthodox Christian upbringing in a tributary state of a Muslim empire that was under British occupation for most of his life, he spent his evenings on foot, looking for pagan gods in their incarnate, carnal versions. He was a poet who resisted publication, save for broadsheets he circulated among close friends; a man whose homeland was a neighborhood, and a dream. Much of his poetry is a map of Alexandria overlaid with a map of the classical world— modern Alexandria and ancient Athens— in the way that Leopold Bloom’s Dublin neighborhood underlies Odysseus’s Ithaca. No single sentence captures this Alexandrian genius better than E. M. Forster’s evocation of him as “a Greek gentleman in a straw hat, standing absolutely motionless at a slight angle to the universe.” 1 And I conjure Cavafy, here, at journey’s end, because I want to persuade you that he is representative precisely in all his seeming anomalousness.

Perhaps you know this poem? Constantine Cavafy was a writer whose every identity came with an asterisk, a quality he shared with Italo Svevo. Born two years after Svevo, he died only a few years after him. Cavafy was a Greek who never lived in Greece. A government clerk of Eastern Orthodox Christian upbringing in a tributary state of a Muslim empire that was under British occupation for most of his life, he spent his evenings on foot, looking for pagan gods in their incarnate, carnal versions. He was a poet who resisted publication, save for broadsheets he circulated among close friends; a man whose homeland was a neighborhood, and a dream. Much of his poetry is a map of Alexandria overlaid with a map of the classical world— modern Alexandria and ancient Athens— in the way that Leopold Bloom’s Dublin neighborhood underlies Odysseus’s Ithaca. No single sentence captures this Alexandrian genius better than E. M. Forster’s evocation of him as “a Greek gentleman in a straw hat, standing absolutely motionless at a slight angle to the universe.” 1 And I conjure Cavafy, here, at journey’s end, because I want to persuade you that he is representative precisely in all his seeming anomalousness.

Poems, like identities, never have just one interpretation. But in Cavafy’s “Waiting for the Barbarians” I see a reflection on the promise and the peril of identity. All day the anticipation and the anxiety build as the locals wait for the barbarians, who are coming to take over the city. The emperor in his crown, the consuls in their scarlet togas, the silent senate and the voiceless orators wait with the assembled masses to accept their arrival. And then, as evening falls, and they do not appear, what is left is only disappointment. We never see the barbarians. We never learn what they are actually like. But we do see the power of our imagination of the stranger. And, Cavafy hints, it’s possible that the mere prospect of their arrival could have saved us from ourselves.

More here.

In his new book, “In Pursuit of Civility”, British historian Keith Thomas tells the story of the most benign developments of the past 500 years: the spread of civilised manners. In the 16th and 17th centuries many people behaved like barbarians. They delighted in public hangings and torture. They stank to high heaven. Samuel Pepys defecated in a chimney. Josiah Pullen, vice-principal of Magdalen Hall, Oxford, urinated while showing a lady around his college, “still holding the lady fast by the hand”. It took centuries of painstaking effort – sermons, etiquette manuals and stern lectures – to convert them into civilised human beings.

In his new book, “In Pursuit of Civility”, British historian Keith Thomas tells the story of the most benign developments of the past 500 years: the spread of civilised manners. In the 16th and 17th centuries many people behaved like barbarians. They delighted in public hangings and torture. They stank to high heaven. Samuel Pepys defecated in a chimney. Josiah Pullen, vice-principal of Magdalen Hall, Oxford, urinated while showing a lady around his college, “still holding the lady fast by the hand”. It took centuries of painstaking effort – sermons, etiquette manuals and stern lectures – to convert them into civilised human beings. Whatever else it will be, Tuesday will be a relief. We will finally find out where we are in the surreal dystopia of the last two years. We will see, in a tangible way, what America now is.

Whatever else it will be, Tuesday will be a relief. We will finally find out where we are in the surreal dystopia of the last two years. We will see, in a tangible way, what America now is.

How could this man have been elected to the highest office in the land? And how can Trump not only remain in office but, for the moment at least, appear to stand a reasonable chance of being renominated and even re-elected?

How could this man have been elected to the highest office in the land? And how can Trump not only remain in office but, for the moment at least, appear to stand a reasonable chance of being renominated and even re-elected? The two women who inflicted the Myers-Briggs Type Indicator on the world were less concerned with fighting evil than with optimizing daily life, according to Emre. There is Katharine, the Briggs in the equation. Unlike the men who worked in academic laboratories on both coasts, Katharine worked in “a cosmic laboratory of baby training”: her own home. She took meticulous notes on the training of her only child, Isabel (later the Myers of the MBTI), a girl who would read Pilgrim’s Progress by five despite rarely attending school. When one neighbor criticized her methods, Katharine, who wrote about child-rearing for magazines, included the neighbor’s daughter Mary in an article called “Ordinary Theodore and Stupid Mary.”

The two women who inflicted the Myers-Briggs Type Indicator on the world were less concerned with fighting evil than with optimizing daily life, according to Emre. There is Katharine, the Briggs in the equation. Unlike the men who worked in academic laboratories on both coasts, Katharine worked in “a cosmic laboratory of baby training”: her own home. She took meticulous notes on the training of her only child, Isabel (later the Myers of the MBTI), a girl who would read Pilgrim’s Progress by five despite rarely attending school. When one neighbor criticized her methods, Katharine, who wrote about child-rearing for magazines, included the neighbor’s daughter Mary in an article called “Ordinary Theodore and Stupid Mary.” As Allen W. Wood observed in 1981, while it is easy to write an above-average book on

As Allen W. Wood observed in 1981, while it is easy to write an above-average book on  Czechoslovakia would have been a hundred years old last Sunday, and Prague spent the weekend celebrating. I’ve been to better birthday parties. The gloomy weather didn’t help – it didn’t just rain on the parades, it poured – and the centennial narratives, never simple, were complicated further by the fact they were commemorating a state that dissolved itself in 1993.

Czechoslovakia would have been a hundred years old last Sunday, and Prague spent the weekend celebrating. I’ve been to better birthday parties. The gloomy weather didn’t help – it didn’t just rain on the parades, it poured – and the centennial narratives, never simple, were complicated further by the fact they were commemorating a state that dissolved itself in 1993. In the winter of 1994, a young man in his early twenties named Tim was a patient in a London psychiatric hospital. Despite a happy and energetic demeanour, Tim had bipolar disorder and had recently attempted suicide. During his stay, he became close with a visiting US undergraduate psychology student called Matt. The two quickly bonded over their love of early-nineties hip-hop and, just before being discharged, Tim surprised his friend with a portrait that he had painted of him. Matt was deeply touched. But after returning to the United States with portrait in hand, he learned that Tim had ended his life by jumping off a bridge.

In the winter of 1994, a young man in his early twenties named Tim was a patient in a London psychiatric hospital. Despite a happy and energetic demeanour, Tim had bipolar disorder and had recently attempted suicide. During his stay, he became close with a visiting US undergraduate psychology student called Matt. The two quickly bonded over their love of early-nineties hip-hop and, just before being discharged, Tim surprised his friend with a portrait that he had painted of him. Matt was deeply touched. But after returning to the United States with portrait in hand, he learned that Tim had ended his life by jumping off a bridge. Observing political and economic discourse in North America since the 1970s leads to an inescapable conclusion: The vast bulk of legislative activity favours the interests of large commercial enterprises. Big business is very well off, and successive Canadian and U.S. governments, of whatever political stripe, have made this their primary objective for at least the past 25 years.

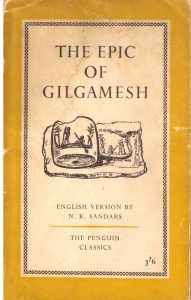

Observing political and economic discourse in North America since the 1970s leads to an inescapable conclusion: The vast bulk of legislative activity favours the interests of large commercial enterprises. Big business is very well off, and successive Canadian and U.S. governments, of whatever political stripe, have made this their primary objective for at least the past 25 years. By chance, I chose as holiday reading (awaiting my attention since student days) The Epic of Gilgamesh, a Penguin Classics bestseller, part of the great library of Ashur-bani-pal that was buried in the wreckage of Nineveh when that city was sacked by the Babylonians and their allies in 612 BCE. Gilgamesh is a surprisingly modern hero. As King, he accomplishes mighty deeds, including gaining access to the timber required for his building plans by overcoming the guardian of the forest. But this victory comes at a cost; his beloved friend Enkidu opens by hand the gate to the forest when he should have smashed his way in with his axe. This seemingly minor lapse, like Moses’ minor lapse in striking the rock when he should have spoken to it, proves fatal. Enkidu dies, and Gilgamesh, unable to accept this fact, sets out in search of the secret of immortality, only to learn that there is no such thing. He does bring back from his journey a youth-restoring herb, but at the last moment even this is stolen from him by a snake when he turns aside to bathe. In due course, he dies, mourned by his subjects and surrounded by a grieving family, but despite his many successes, what remains with us is his deep disappointment. He has not managed to accomplish what he set out to do.

By chance, I chose as holiday reading (awaiting my attention since student days) The Epic of Gilgamesh, a Penguin Classics bestseller, part of the great library of Ashur-bani-pal that was buried in the wreckage of Nineveh when that city was sacked by the Babylonians and their allies in 612 BCE. Gilgamesh is a surprisingly modern hero. As King, he accomplishes mighty deeds, including gaining access to the timber required for his building plans by overcoming the guardian of the forest. But this victory comes at a cost; his beloved friend Enkidu opens by hand the gate to the forest when he should have smashed his way in with his axe. This seemingly minor lapse, like Moses’ minor lapse in striking the rock when he should have spoken to it, proves fatal. Enkidu dies, and Gilgamesh, unable to accept this fact, sets out in search of the secret of immortality, only to learn that there is no such thing. He does bring back from his journey a youth-restoring herb, but at the last moment even this is stolen from him by a snake when he turns aside to bathe. In due course, he dies, mourned by his subjects and surrounded by a grieving family, but despite his many successes, what remains with us is his deep disappointment. He has not managed to accomplish what he set out to do. On his journey, Gilgamesh meets the one man who has achieved immortality, Utnapishtim, survivor of a flood remarkably similar, even in its details, to the Flood in the Bible. Reading of this sent me back to Genesis, and hence to two other books,

On his journey, Gilgamesh meets the one man who has achieved immortality, Utnapishtim, survivor of a flood remarkably similar, even in its details, to the Flood in the Bible. Reading of this sent me back to Genesis, and hence to two other books, Comparing Hebrew with Cuneiform may seem like a suitable gentlemanly occupation for students of ancient literature, but of no practical importance. On the contrary, I maintain that what emerges is of major contemporary relevance.

Comparing Hebrew with Cuneiform may seem like a suitable gentlemanly occupation for students of ancient literature, but of no practical importance. On the contrary, I maintain that what emerges is of major contemporary relevance.

“They all go the same way. Look up, then down and to the left,” the EMT said. “Always.”

“They all go the same way. Look up, then down and to the left,” the EMT said. “Always.”

In Tian Shan mountains of the legendary snow leopard, errant wisps of mist float with the speed of scurrying ghosts, there is a climbers’ cemetery, Himalayan Griffin vultures and golden eagles are often sighted, though my attention is completely arrested by a Blue whistling thrush alighting on a rock— its plumage, its slender, seemingly weightless frame, and its long drawn, ventriloquist song remind me of the fairies of Alif Laila that were turned to birds by demons inhabiting barren mountains.

In Tian Shan mountains of the legendary snow leopard, errant wisps of mist float with the speed of scurrying ghosts, there is a climbers’ cemetery, Himalayan Griffin vultures and golden eagles are often sighted, though my attention is completely arrested by a Blue whistling thrush alighting on a rock— its plumage, its slender, seemingly weightless frame, and its long drawn, ventriloquist song remind me of the fairies of Alif Laila that were turned to birds by demons inhabiting barren mountains.

On a recent windy morning, walking past the Soldiers’ and Sailors’ Monument on West 89th Street in New York City, seeing the flag at half mast, just days before the

On a recent windy morning, walking past the Soldiers’ and Sailors’ Monument on West 89th Street in New York City, seeing the flag at half mast, just days before the  Ronald Reagan was elected President in 1980 with an attitude and agenda similar to Trump’s—to restore America to its rightful place where “someone can always get rich.” His administration arrived in Washington firm in its resolve to uproot the democratic style of feeling and thought that underwrote FDR’s New Deal. What was billed as the Reagan Revolution and the dawn of a New Morning in America recruited various parties of the dissatisfied right (conservative, neoconservative, libertarian, reactionary and evangelical) under one flag of abiding and transcendent truth—money ennobles rich people, making them healthy, wealthy and wise; money corrupts poor people, making them ignorant, lazy and sick.

Ronald Reagan was elected President in 1980 with an attitude and agenda similar to Trump’s—to restore America to its rightful place where “someone can always get rich.” His administration arrived in Washington firm in its resolve to uproot the democratic style of feeling and thought that underwrote FDR’s New Deal. What was billed as the Reagan Revolution and the dawn of a New Morning in America recruited various parties of the dissatisfied right (conservative, neoconservative, libertarian, reactionary and evangelical) under one flag of abiding and transcendent truth—money ennobles rich people, making them healthy, wealthy and wise; money corrupts poor people, making them ignorant, lazy and sick.