Alison Flood in The Guardian:

The Polish novelist and activist Olga Tokarczuk and the controversial Austrian author Peter Handke have both won the Nobel prize in literature.

The Polish novelist and activist Olga Tokarczuk and the controversial Austrian author Peter Handke have both won the Nobel prize in literature.

The choice of Tokarczuk and Handke comes after the Swedish Academy promised to move away from the award’s “male-oriented” and “Eurocentric” past.

Tokarczuk, an activist, public intellectual, and critic of Poland’s politics, won the 2018 award, and was cited by the committee for her “narrative imagination that with encyclopedic passion represents the crossing of boundaries as a form of life”. She is a bestseller in her native Poland, and has become much better known in the UK after winning the International Booker prize for her sixth novel Flights. The Nobel committee’s Anders Olsson said her work, which “centres on migration and cultural transitions”, was “full of wit and cunning”.

Picking Handke as 2019’s winner, cited for “an influential work that with linguistic ingenuity has explored the periphery and the specificity of human experience”, has already provoked controversy. The Ambassador of Kosovo to the US, Vlora Çitaku, called the decision “scandalous … a preposterous and shameful decision”.

More here.

There is no agreed criterion to distinguish science from pseudoscience, or just plain ordinary bullshit, opening the door to all manner of metaphysics masquerading as science. This is ‘post-empirical’ science, where truth no longer matters, and it is potentially very dangerous.

There is no agreed criterion to distinguish science from pseudoscience, or just plain ordinary bullshit, opening the door to all manner of metaphysics masquerading as science. This is ‘post-empirical’ science, where truth no longer matters, and it is potentially very dangerous. A parade of American presidents on the left and the right argued that by cultivating China as a market — hastening its economic growth and technological sophistication while bringing our own companies a billion new workers and customers — we would inevitably loosen the regime’s hold on its people. Even Donald Trump, who made bashing China a theme of his campaign, sees the country mainly through the lens of markets. He’ll eagerly prosecute a pointless trade war against China, but when it comes to the millions in Hong Kong who are protesting China’s creeping despotism over their territory,

A parade of American presidents on the left and the right argued that by cultivating China as a market — hastening its economic growth and technological sophistication while bringing our own companies a billion new workers and customers — we would inevitably loosen the regime’s hold on its people. Even Donald Trump, who made bashing China a theme of his campaign, sees the country mainly through the lens of markets. He’ll eagerly prosecute a pointless trade war against China, but when it comes to the millions in Hong Kong who are protesting China’s creeping despotism over their territory,  There are many debutante balls in Texas, and a number of pageants that feature historical costumes, but the Society of Martha Washington Colonial Pageant and Ball in Laredo is the most opulently patriotic among them. In the late 1840s, a number of European American settlers from the East were sent to staff a new military base in southwest Texas, a region that had recently been ceded to the United States after the Mexican-American War. They found themselves in a place that was tenuously and unenthusiastically American. Feeling perhaps a little forlorn at being so starkly in the minority, these new arrivals established a local chapter of the lamentably named Improved Order of Red Men. (Members of the order dressed as “Indians,” called their officers “chiefs,” and began their meetings, or “powwows,” by banging a tomahawk instead of a gavel.)

There are many debutante balls in Texas, and a number of pageants that feature historical costumes, but the Society of Martha Washington Colonial Pageant and Ball in Laredo is the most opulently patriotic among them. In the late 1840s, a number of European American settlers from the East were sent to staff a new military base in southwest Texas, a region that had recently been ceded to the United States after the Mexican-American War. They found themselves in a place that was tenuously and unenthusiastically American. Feeling perhaps a little forlorn at being so starkly in the minority, these new arrivals established a local chapter of the lamentably named Improved Order of Red Men. (Members of the order dressed as “Indians,” called their officers “chiefs,” and began their meetings, or “powwows,” by banging a tomahawk instead of a gavel.) L

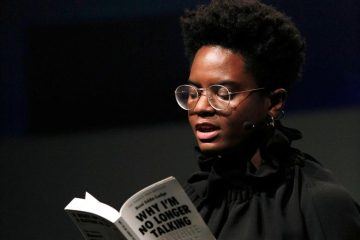

L So why are publishers suddenly bending over backwards to fill their schedules with people of colour? Interviewing a range of writers, publishers and other industry professionals throws up complex and sometimes disturbing answers. It’s certainly not only about capitalizing on a trend. Sharmaine Lovegrove, publisher of the Little, Brown imprint Dialogue Books, tells me that it’s partly the result of an evolving culture of shame and embarrassment: “Agents are asking people who have a little bit of a social media presence to come up quite quickly with ideas that they can sell to publishers who are desperate because no list wants to be all white, as it has been”. With the dread of being associated with the hashtag #publishingsowhite, the simplest and cheapest way of adding black names to their lists is to put out anthologies packed with malleable first-time authors and one or two seasoned writers seeded through the collections. (A – presumably – more expensive way is to recruit Stormzy, who launched the #Merky Books imprint at Penguin Random House last year.)

So why are publishers suddenly bending over backwards to fill their schedules with people of colour? Interviewing a range of writers, publishers and other industry professionals throws up complex and sometimes disturbing answers. It’s certainly not only about capitalizing on a trend. Sharmaine Lovegrove, publisher of the Little, Brown imprint Dialogue Books, tells me that it’s partly the result of an evolving culture of shame and embarrassment: “Agents are asking people who have a little bit of a social media presence to come up quite quickly with ideas that they can sell to publishers who are desperate because no list wants to be all white, as it has been”. With the dread of being associated with the hashtag #publishingsowhite, the simplest and cheapest way of adding black names to their lists is to put out anthologies packed with malleable first-time authors and one or two seasoned writers seeded through the collections. (A – presumably – more expensive way is to recruit Stormzy, who launched the #Merky Books imprint at Penguin Random House last year.) In May 1949, a year after the establishment of the state of Israel, the American Jewish literary critic Leslie Fiedler published in Commentary an essay about the fundamental challenge facing American Jewish writers: that is, novelists, poets, and intellectuals like Fiedler himself. Entitled “What Can We Do About Fagin?”—Fagin being the Jewish villain of Charles Dickens’s novel Oliver Twist—the essay shows that the modern Jew who adopts English as his language is joining a culture riddled with negative stereotypes of . . . himself. These demonic images figure in some of the best works of some of the best writers, and form an indelible part of the English literary tradition—not just in the earlier form of Dickens’ Fagin, or still earlier of Shakespeare’s Shylock, but in, to mention only two famous modern poets, Ezra Pound’s wartime broadcasts inveighing against “Jew slime” or such memorable lines by T.S. Eliot as “The rats are underneath the piles. The jew is underneath the lot” and the same venerated poet’s 1933 admonition that, in any well-ordered society, “reasons of race and religion combine to make any large number of free-thinking Jews undesirable.”

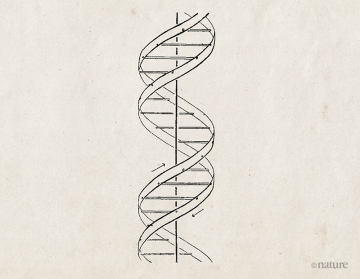

In May 1949, a year after the establishment of the state of Israel, the American Jewish literary critic Leslie Fiedler published in Commentary an essay about the fundamental challenge facing American Jewish writers: that is, novelists, poets, and intellectuals like Fiedler himself. Entitled “What Can We Do About Fagin?”—Fagin being the Jewish villain of Charles Dickens’s novel Oliver Twist—the essay shows that the modern Jew who adopts English as his language is joining a culture riddled with negative stereotypes of . . . himself. These demonic images figure in some of the best works of some of the best writers, and form an indelible part of the English literary tradition—not just in the earlier form of Dickens’ Fagin, or still earlier of Shakespeare’s Shylock, but in, to mention only two famous modern poets, Ezra Pound’s wartime broadcasts inveighing against “Jew slime” or such memorable lines by T.S. Eliot as “The rats are underneath the piles. The jew is underneath the lot” and the same venerated poet’s 1933 admonition that, in any well-ordered society, “reasons of race and religion combine to make any large number of free-thinking Jews undesirable.” On 25 April 1953, James Watson and Francis Crick announced

On 25 April 1953, James Watson and Francis Crick announced The arrival of superhuman machine intelligence will be the biggest event in human history. The world’s great powers are finally waking up to this fact, and the world’s largest corporations have known it for some time. But what they may not fully understand is that how A.I. evolves will determine whether this event is also our last.

The arrival of superhuman machine intelligence will be the biggest event in human history. The world’s great powers are finally waking up to this fact, and the world’s largest corporations have known it for some time. But what they may not fully understand is that how A.I. evolves will determine whether this event is also our last. Our observable universe started out in a highly non-generic state, one of very low entropy, and disorderliness has been growing ever since. How, then, can we account for the appearance of complex systems such as organisms and biospheres? The answer is that very low-entropy states typically appear simple, and high-entropy states also appear simple, and complexity can emerge along the road in between. Today’s podcast is more of a discussion than an interview, in which behavioral neuroscientist Kate Jeffery and I discuss how complexity emerges through cosmological and biological evolution. As someone on the biological side of things, Kate is especially interested in how complexity can build up and then catastrophically disappear, as in mass extinction events.

Our observable universe started out in a highly non-generic state, one of very low entropy, and disorderliness has been growing ever since. How, then, can we account for the appearance of complex systems such as organisms and biospheres? The answer is that very low-entropy states typically appear simple, and high-entropy states also appear simple, and complexity can emerge along the road in between. Today’s podcast is more of a discussion than an interview, in which behavioral neuroscientist Kate Jeffery and I discuss how complexity emerges through cosmological and biological evolution. As someone on the biological side of things, Kate is especially interested in how complexity can build up and then catastrophically disappear, as in mass extinction events. Adding to the

Adding to the  In the iconic frontispiece to Thomas Henry Huxley’s Evidence as to Man’s Place in Nature (1863), primate skeletons march across the page and, presumably, into the future: “Gibbon, Orang, Chimpanzee, Gorilla, Man.” Fresh evidence from anatomy and palaeontology had made humans’ place on the scala naturae scientifically irrefutable. We were unequivocally with the animals — albeit at the head of the line. Nicolaus Copernicus had displaced us from the centre of the Universe; now Charles Darwin had displaced us from the centre of the living world. Regardless of how one took this demotion (Huxley wasn’t troubled; Darwin was), there was no doubting Huxley’s larger message: science alone can answer what he called the ‘question of questions’: “Man’s place in nature and his relations to the Universe of things.”

In the iconic frontispiece to Thomas Henry Huxley’s Evidence as to Man’s Place in Nature (1863), primate skeletons march across the page and, presumably, into the future: “Gibbon, Orang, Chimpanzee, Gorilla, Man.” Fresh evidence from anatomy and palaeontology had made humans’ place on the scala naturae scientifically irrefutable. We were unequivocally with the animals — albeit at the head of the line. Nicolaus Copernicus had displaced us from the centre of the Universe; now Charles Darwin had displaced us from the centre of the living world. Regardless of how one took this demotion (Huxley wasn’t troubled; Darwin was), there was no doubting Huxley’s larger message: science alone can answer what he called the ‘question of questions’: “Man’s place in nature and his relations to the Universe of things.”

The nature of mind and consciousness had been one of the biggest and trickiest issues in philosophy for a century. Neuroscience was developing fast, but most philosophers resisted claims that it was solving the philosophical problems of mind. Scientists who trod on philosophers’ toes were accused of “scientism”: the belief that the only true explanations are scientific explanations and that once you had described the science of a phenomenon there was nothing left to say. Those rare philosophers like the Churchlands, who shared many of the enthusiasms and interest of these scientists, were even more despised. A voice in the head of Patricia Churchland told her how to deal with these often vicious critics: “outlast the bastards.”

The nature of mind and consciousness had been one of the biggest and trickiest issues in philosophy for a century. Neuroscience was developing fast, but most philosophers resisted claims that it was solving the philosophical problems of mind. Scientists who trod on philosophers’ toes were accused of “scientism”: the belief that the only true explanations are scientific explanations and that once you had described the science of a phenomenon there was nothing left to say. Those rare philosophers like the Churchlands, who shared many of the enthusiasms and interest of these scientists, were even more despised. A voice in the head of Patricia Churchland told her how to deal with these often vicious critics: “outlast the bastards.” Machine translation, an enduring dream of A.I. researchers, was, until three years ago, too error-prone to do much more than approximate the meaning of words in another language. Since switching to neural machine translation, in 2016, Google Translate has begun to replace human translators in certain domains, like medicine. A recent study published in Annals of Internal Medicine found Google Translate accurate enough to rely on in translating non-English medical studies into English for the systematic reviews that health-care decisions are based on.

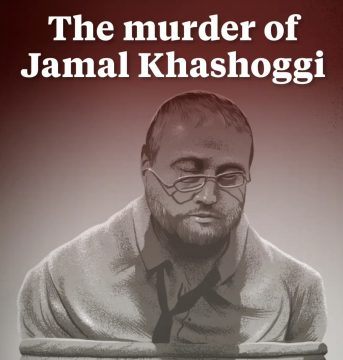

Machine translation, an enduring dream of A.I. researchers, was, until three years ago, too error-prone to do much more than approximate the meaning of words in another language. Since switching to neural machine translation, in 2016, Google Translate has begun to replace human translators in certain domains, like medicine. A recent study published in Annals of Internal Medicine found Google Translate accurate enough to rely on in translating non-English medical studies into English for the systematic reviews that health-care decisions are based on. One year ago, the journalist Jamal Khashoggi walked into the Saudi Consulate in Istanbul and never walked out. In the months that followed, the facts of his disappearance and murder would emerge in fragments: an international high-tech spy game, a diabolical plot, a gruesome killing, and a preposterous cover-up reaching the highest levels of the Saudi government, aided by the indifference and obstinacy of the White House. Eventually those fragments came to comprise a macabre mosaic.

One year ago, the journalist Jamal Khashoggi walked into the Saudi Consulate in Istanbul and never walked out. In the months that followed, the facts of his disappearance and murder would emerge in fragments: an international high-tech spy game, a diabolical plot, a gruesome killing, and a preposterous cover-up reaching the highest levels of the Saudi government, aided by the indifference and obstinacy of the White House. Eventually those fragments came to comprise a macabre mosaic.