Morgan Meis in Slant Books:

I want to write about a certain kind of prose. It is the kind of prose that gets lost in itself. The kind of writing that tumbles head over heels and threatens to drown in its own wake. But not quite. The kind of prose that drowns completely is not so interesting. And the prose that never gets lost is not so interesting either. In my opinion. You’ve got to teeter around and stumble just at the edge there. In my opinion.

I want to write about a certain kind of prose. It is the kind of prose that gets lost in itself. The kind of writing that tumbles head over heels and threatens to drown in its own wake. But not quite. The kind of prose that drowns completely is not so interesting. And the prose that never gets lost is not so interesting either. In my opinion. You’ve got to teeter around and stumble just at the edge there. In my opinion.

I’m thinking right now of Ingeborg Bachmann. She was a poet but she gave up poetry. She was a philosopher but she gave up philosophy. She was a person but she gave up being a person. Or tried to. I don’t know. She died in Rome in 1972 from the results of smoking a cigarette in bed. She was hooked, at that point, on pills and drink. So it was a kind of lazy, slow moving suicide. She couldn’t teeter on the edge anymore and so she just went over. Maybe.

She wrote a novel before she died. I guess you can call it a novel. Better, let’s say she wrote a piece of prose just a couple of years before that cigarette and the pills got her. It is called Malina. It is impossible to say what Malina is about and so I will not try. It isn’t about anything. It is about everything. It is the story of a love affair, but not really.

More here.

Imagine spilling a plate of food into your lap in front of a crowd. Afterwards, you might fix your gaze on your cell phone to avoid acknowledging the bumble to onlookers. Similarly, after disappointing your family or colleagues, it can be hard to look them in the eye. Why do people avoid acknowledging faux pas or transgressions that they know an audience already knows about?

Imagine spilling a plate of food into your lap in front of a crowd. Afterwards, you might fix your gaze on your cell phone to avoid acknowledging the bumble to onlookers. Similarly, after disappointing your family or colleagues, it can be hard to look them in the eye. Why do people avoid acknowledging faux pas or transgressions that they know an audience already knows about? It was February 20, 1939, two days before George Washington’s birthday. Fritz Kuhn, leader of the prominent pro-Nazi German American Bund, took the stage at Madison Square Garden. Behind him stood a towering 30-foot portrait of the first US president between giant swastikas, and around him twenty thousand rally-goers. Posters at this infamous Pro-America Rally promised a “mass-demonstration for true Americanism,” bringing National Socialist ideals to the American people. Participants waved American flags, marched to loud drum rolls, and heard pro-fascist speeches. Speakers urged the audience to embrace National Socialism, not merely to show support for Germany, but above all because it was fundamentally American.

It was February 20, 1939, two days before George Washington’s birthday. Fritz Kuhn, leader of the prominent pro-Nazi German American Bund, took the stage at Madison Square Garden. Behind him stood a towering 30-foot portrait of the first US president between giant swastikas, and around him twenty thousand rally-goers. Posters at this infamous Pro-America Rally promised a “mass-demonstration for true Americanism,” bringing National Socialist ideals to the American people. Participants waved American flags, marched to loud drum rolls, and heard pro-fascist speeches. Speakers urged the audience to embrace National Socialism, not merely to show support for Germany, but above all because it was fundamentally American. The United States of America was founded on a conspiracy theory. In the lead-up to the War of Independence, revolutionaries argued that a tax on tea or stamps is not just a tax, but the opening gambit in a sinister plot of oppression. The signers of the Declaration of Independence were convinced — based on “a long train of abuses and usurpations” — that the king of Great Britain was conspiring to establish “an absolute Tyranny” over the colonies.

The United States of America was founded on a conspiracy theory. In the lead-up to the War of Independence, revolutionaries argued that a tax on tea or stamps is not just a tax, but the opening gambit in a sinister plot of oppression. The signers of the Declaration of Independence were convinced — based on “a long train of abuses and usurpations” — that the king of Great Britain was conspiring to establish “an absolute Tyranny” over the colonies. My daughter, a Pakistani American mother of two young children, married to an African American man of Jamaican parentage, is understandably excited about our new Veep-to-be, Kamala Harris. She keeps sending me articles by “desi” women like herself in relationships with Black men, who are excited about this new chapter dawning in American history.

My daughter, a Pakistani American mother of two young children, married to an African American man of Jamaican parentage, is understandably excited about our new Veep-to-be, Kamala Harris. She keeps sending me articles by “desi” women like herself in relationships with Black men, who are excited about this new chapter dawning in American history. During the mad rush of leaving, they had to find homes for 60 animals, a menagerie of horses, snakes, turtles, and various other creatures. Only two made the cut to tag along with them: their blue budgie parakeet, Bird, who went eerily still as they crossed the Sonoran Desert, and their Doberman, Kinch, who panted in the scorching heat.

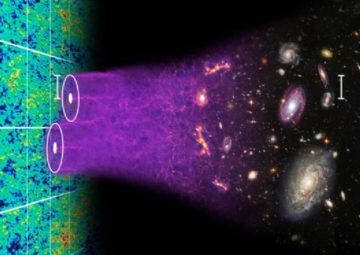

During the mad rush of leaving, they had to find homes for 60 animals, a menagerie of horses, snakes, turtles, and various other creatures. Only two made the cut to tag along with them: their blue budgie parakeet, Bird, who went eerily still as they crossed the Sonoran Desert, and their Doberman, Kinch, who panted in the scorching heat. No matter how much we might try and hide it, there’s an enormous problem staring us all in the face when it comes to the Universe. If we understood just three things:

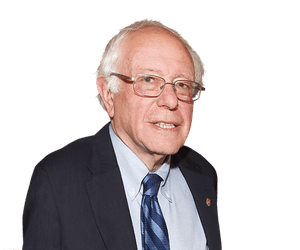

No matter how much we might try and hide it, there’s an enormous problem staring us all in the face when it comes to the Universe. If we understood just three things: A record-breaking 4,000 Americans are now dying each day from Covid-19, while the federal government fumbles vaccine production and distribution, testing and tracing. In the midst of the worst pandemic in 100 years, more than 90 million Americans are uninsured or underinsured and can’t afford to go to a doctor when they get sick. The isolation and anxiety caused by the pandemic has resulted in a huge increase in mental illness.

A record-breaking 4,000 Americans are now dying each day from Covid-19, while the federal government fumbles vaccine production and distribution, testing and tracing. In the midst of the worst pandemic in 100 years, more than 90 million Americans are uninsured or underinsured and can’t afford to go to a doctor when they get sick. The isolation and anxiety caused by the pandemic has resulted in a huge increase in mental illness. “K

“K In all its varied symptomology, menopause put me on intimate terms with what Virginia Woolf, writing about the perspective-shifting properties of illness, called “the daily drama of the body.” Its histrionics demanded notice.

In all its varied symptomology, menopause put me on intimate terms with what Virginia Woolf, writing about the perspective-shifting properties of illness, called “the daily drama of the body.” Its histrionics demanded notice. Build a working coalition

Build a working coalition  The

The

Like his many previous literary endeavors, Turkish novelist Orhan Pamuk’s new book Orange is about Istanbul, or rather how the city appears in his eyes. The book consists of color photographs of the city’s streets which Pamuk has been perpetually constantly taking for several years, always with the same technique and choice of motif. The result is a visual essay dedicated to the alleys and corners of his hometown. Over the author’s more than six decades living in Istanbul, Pamuk has witnessed the constant transformation of the city, notably from the gradual change from orange street lamps to white over the last ten years or so, not that the actual duration of the change matters. What does matter is the stark visible disappearance of the yellow-hued fluorescent lamps bringing a loss of the magical moments in a city landscape he dearly loves; the change is one he accepts only with some bitterness.

Like his many previous literary endeavors, Turkish novelist Orhan Pamuk’s new book Orange is about Istanbul, or rather how the city appears in his eyes. The book consists of color photographs of the city’s streets which Pamuk has been perpetually constantly taking for several years, always with the same technique and choice of motif. The result is a visual essay dedicated to the alleys and corners of his hometown. Over the author’s more than six decades living in Istanbul, Pamuk has witnessed the constant transformation of the city, notably from the gradual change from orange street lamps to white over the last ten years or so, not that the actual duration of the change matters. What does matter is the stark visible disappearance of the yellow-hued fluorescent lamps bringing a loss of the magical moments in a city landscape he dearly loves; the change is one he accepts only with some bitterness. The daily press conferences from Downing Street since March 2020 underline the prominence given to epidemiologists, behavioural scientists and the medical profession in driving policy reaction to the Covid-19 crisis. This may be evidence of a welcome return of scientific expertise to the heart of government after a period when much of the population and elements of the government had, in the words of

The daily press conferences from Downing Street since March 2020 underline the prominence given to epidemiologists, behavioural scientists and the medical profession in driving policy reaction to the Covid-19 crisis. This may be evidence of a welcome return of scientific expertise to the heart of government after a period when much of the population and elements of the government had, in the words of