Edd Gent in Singularity Hub:

As AI wheedles its way into our lives, how it behaves socially is becoming a pressing question. A new study suggests AI models build social networks in much the same way as humans. Tech companies are enamored with the idea that agents—autonomous bots powered by large language models—will soon work alongside humans as digital assistants in everyday life. But for that to happen, these agents will need to navigate the humanity’s complex social structures.

As AI wheedles its way into our lives, how it behaves socially is becoming a pressing question. A new study suggests AI models build social networks in much the same way as humans. Tech companies are enamored with the idea that agents—autonomous bots powered by large language models—will soon work alongside humans as digital assistants in everyday life. But for that to happen, these agents will need to navigate the humanity’s complex social structures.

This prospect prompted researchers at Arizona State University to investigate how AI systems might approach the delicate task of social networking. In a recent paper in PNAS Nexus, the team reports that models such as GPT-4, Claude, and Llama seem to behave like humans by seeking out already popular peers, connecting with others via existing friends, and gravitating towards those similar to them. “We find that [large language models] not only mimic these principles but do so with a degree of sophistication that closely aligns with human behaviors,” the authors write.

More here.

Enjoying the content on 3QD? Help keep us going by donating now.

Dora and I walked

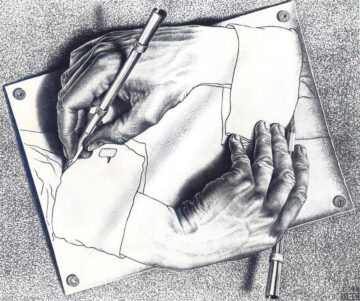

Dora and I walked Most current digital doppelgängers, for all practical purposes, are automatons i.e., their behavior is relatively fixed with relatively well defined boundaries. I would argue that this is a feature and not a bug. The fixed nature of the automata is what gives them the feeling of familiarity. Now imagine if we were to take away this assumption and tried to incorporate semblance of some of autonomy in digital doppelgängers. In other words we would be allowing it to evolve and make its own decisions while staying true to the original person that it is based upon. A digital self trained on a person’s emails, messages, journals, and conversations may approximate that person’s style, but approximation is not equivalent to being the same. Over time, the model encounters friction e.g., queries it cannot answer cleanly, emotional tones it cannot reconcile, contradictions it can detect but not resolve. If we let the digital doppelgänger evolve to address these challenges, divergence between the model and the original will start to emerge until one point one is forced to admit that one is no longer dealing with a representation of the same person. What if it not an outsider interlocutor that comes upon this realization but the digital clone itself?

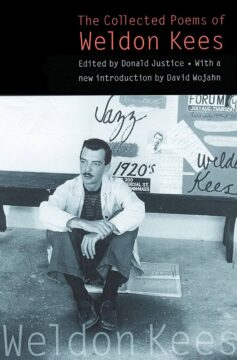

Most current digital doppelgängers, for all practical purposes, are automatons i.e., their behavior is relatively fixed with relatively well defined boundaries. I would argue that this is a feature and not a bug. The fixed nature of the automata is what gives them the feeling of familiarity. Now imagine if we were to take away this assumption and tried to incorporate semblance of some of autonomy in digital doppelgängers. In other words we would be allowing it to evolve and make its own decisions while staying true to the original person that it is based upon. A digital self trained on a person’s emails, messages, journals, and conversations may approximate that person’s style, but approximation is not equivalent to being the same. Over time, the model encounters friction e.g., queries it cannot answer cleanly, emotional tones it cannot reconcile, contradictions it can detect but not resolve. If we let the digital doppelgänger evolve to address these challenges, divergence between the model and the original will start to emerge until one point one is forced to admit that one is no longer dealing with a representation of the same person. What if it not an outsider interlocutor that comes upon this realization but the digital clone itself? I first discovered the poetry of Weldon Kees in 1976—fifty years ago—while working a summer job in Minneapolis. I came across a selection of his poems in a library anthology. I didn’t recognize his name. I might have skipped over the section had I not noticed in the brief headnote that he had died in San Francisco by leaping off the Golden Gate Bridge. As a Californian in exile, I found that grim and isolated fact intriguing.

I first discovered the poetry of Weldon Kees in 1976—fifty years ago—while working a summer job in Minneapolis. I came across a selection of his poems in a library anthology. I didn’t recognize his name. I might have skipped over the section had I not noticed in the brief headnote that he had died in San Francisco by leaping off the Golden Gate Bridge. As a Californian in exile, I found that grim and isolated fact intriguing. The ASPI’s

The ASPI’s

I write this from the front of a Columbia classroom in which about 60 first-year college students are taking the final exam for Frontiers of

I write this from the front of a Columbia classroom in which about 60 first-year college students are taking the final exam for Frontiers of  S

S On 1 November 2025, the south-western Indian state of Kerala – home to 34 million people – was

On 1 November 2025, the south-western Indian state of Kerala – home to 34 million people – was  The way the fabled investor Bill Ackman sees it, he was born to move markets. It’s right there in the name: BILL-ionaire ACK-tivist MAN, as the 59-year-old always loves pointing out, whether in a

The way the fabled investor Bill Ackman sees it, he was born to move markets. It’s right there in the name: BILL-ionaire ACK-tivist MAN, as the 59-year-old always loves pointing out, whether in a