From The New Yorker:

In 1833, Ralph Waldo Emerson, a New England pastor who’d recently given up the ministry, delivered his first public lecture in America. The talk was held in Boston, and its nebulous-sounding subject (“The Uses of Natural History,” a title that conceals its greatness well) helped lay the groundwork for the nineteenth-century philosophy of transcendentalism. It also changed Emerson’s life. In a world that regarded higher thought largely as a staid pursuit, Emerson was a vivid, entertaining speaker—he lived for laughter or spontaneous applause—and his talk that day marked the beginning of a long career behind the podium. Over the next year, he delivered seven talks, Robert D. Richardson, Jr., tells us in his 1996 biography, “Emerson: The Mind on Fire.” By 1838, he was up to thirty. Then his career exploded. In the early eighteen-fifties, Emerson was giving as many as eighty lectures a year, and his reputation reached beyond the tight paddock of intellectual New England. The lecture circuit may not have shaped Emerson’s style of thinking, but it made that style a compass point of nineteenth-century American thought.

In 1833, Ralph Waldo Emerson, a New England pastor who’d recently given up the ministry, delivered his first public lecture in America. The talk was held in Boston, and its nebulous-sounding subject (“The Uses of Natural History,” a title that conceals its greatness well) helped lay the groundwork for the nineteenth-century philosophy of transcendentalism. It also changed Emerson’s life. In a world that regarded higher thought largely as a staid pursuit, Emerson was a vivid, entertaining speaker—he lived for laughter or spontaneous applause—and his talk that day marked the beginning of a long career behind the podium. Over the next year, he delivered seven talks, Robert D. Richardson, Jr., tells us in his 1996 biography, “Emerson: The Mind on Fire.” By 1838, he was up to thirty. Then his career exploded. In the early eighteen-fifties, Emerson was giving as many as eighty lectures a year, and his reputation reached beyond the tight paddock of intellectual New England. The lecture circuit may not have shaped Emerson’s style of thinking, but it made that style a compass point of nineteenth-century American thought.

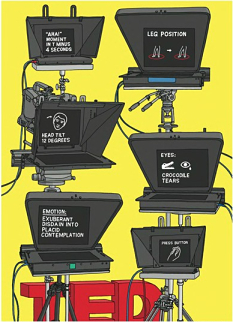

Whether Emerson has a modern heir remains an open question, but, more than a century after his death, the speaking trade he enjoyed continues to thrive. In this week’s issue of the magazine, I write about TED, a constellation of conferences whose style and substance has helped color our own moment in public intellectual life. As many media companies trading in “ideas” are struggling to stay afloat, TED has created a product that’s sophisticated, popular, lucrative, socially conscious, and wildly pervasive—the Holy Grail of digital-age production. The conference serves a king-making function, turning obscure academics and little-known entrepreneurs into global stars. And, though it’s earned a lot of criticism (as I explain in the article, some thinkers find TED to be narrow and dangerously slick), its “TED Talks” series of Web videos, which so far has racked up more than eight hundred million views, puts even Emerson to shame. Why? Trying to understand the appeal of TED talks, I found myself paying close attention to the video series’ distinctive style and form. Below, five key TED talks, and what they illuminate about the most successful lecture series ever given.

More here.

I took that photo at the Museum of the City of New York, at its exhibit on the history of New York’s banks. The quotation sure sounds a lot like some of the prose used to describe the excesses of the recent credit bubble. (Except maybe the “over ploughing” bit, now that we’re no longer an agrarian economy.)