Vijay Prashad in The Hindu:

Vijay Prashad in The Hindu:

On Sunday, August 12, Samir Amin died. With him went a generation of Egyptian Marxists who came of age in the time of Nasserism and departed with the world in tatters. Amin was born in 1931 in Cairo. He was doing his PhD in Paris when Gamal Abdel Nasser and the Free Officers overthrew the British-dominated monarchy in Egypt in 1952 and directed their country towards a path of non-alignment.

Amin’s thesis — in economics — was written while he was active in the French Communist Party. In the thesis, he thought hard about the problems of his native land and other countries despoiled by the colonial menace. For Amin, as with other dependency theorists, the Third World suffered from theft, plunder as well as deindustrialisation, and then unequal exchange. The policy space for the new Third World states — Nasser’s Egypt amidst them — was narrow. Emancipation would be difficult. It would take courage to break the yoke of monopoly capitalism, to rise from the penalty of colonialism and advance towards a necessary socialist future.

Amin, like others in his generation such as India’s Ashok Mitra and Brazil’s Celso Furtado, did not go immediately into the academy. He went home to Cairo, where he worked in Nasser’s Institute for Economic Management (1957-1960) and then to Bamako (Mali), where he worked as an adviser in the Ministry of Planning (1960-1963). Amin would talk fondly of these years, of the experience he had in trying to move an agenda for the development of his country and that of other African countries. The limitations set by the powerful countries of the world — the imperialist bloc led by the U.S. — and by the system of monopoly capitalism prevented any major breakthrough for states such as Egypt and Mali. Amin’s first book, published in the 1960s, was on the experience of development undertaken by Mali, Guinea and Ghana. It warned against any facile belief in progress. The unequal system in the world generated profits for the powerful and generated poverty for the weak.

More here.

Matthew Yglesias in Vox:

Matthew Yglesias in Vox: Grafton Tanner in The LA Review of Books:

Grafton Tanner in The LA Review of Books: Forty-five years after his death, Pablo Neruda’s poetry still has the power to astonish and appall, awaken and chill us and leave us shaking our heads in bafflement or respect. There is such breadth and profligate intelligence in the work, which ranges from opaque surrealism to bighearted populism to Pan-American epic to shocking propaganda, that one hardly knows where to place it in our era of thwarted emotions. Clearly it is not of our time. Given Neruda’s relations with women, it is certainly not of the time of #MeToo. The work will not always sit well beside a mature feminist consciousness, and of course it will not please ideologues who can’t tell one form of socialism from another. Neruda changed, and his circumstances changed. As a man he could be a monster of egotism and a courageous dissident, a purblind Stalinist and a Roosevelt democrat. His poetry incarnates these shifts and siftings and restless experiments. The past is a moving target. Poetry keeps it alive.

Forty-five years after his death, Pablo Neruda’s poetry still has the power to astonish and appall, awaken and chill us and leave us shaking our heads in bafflement or respect. There is such breadth and profligate intelligence in the work, which ranges from opaque surrealism to bighearted populism to Pan-American epic to shocking propaganda, that one hardly knows where to place it in our era of thwarted emotions. Clearly it is not of our time. Given Neruda’s relations with women, it is certainly not of the time of #MeToo. The work will not always sit well beside a mature feminist consciousness, and of course it will not please ideologues who can’t tell one form of socialism from another. Neruda changed, and his circumstances changed. As a man he could be a monster of egotism and a courageous dissident, a purblind Stalinist and a Roosevelt democrat. His poetry incarnates these shifts and siftings and restless experiments. The past is a moving target. Poetry keeps it alive. Susan Carlile explains with good judgement in her introduction why it is time for a new, full, critical biography of Charlotte Lennox, who, along with Eliza Haywood in particular, acts as a linking presence between the Aphra Behn-inflected, rackety experiments of Delariviere Manley, in the early eighteenth century, and the more solidly respectable achievements of Austen and Frances Burney. This biography, “the first to consider Lennox’s entire oeuvre and all her extant correspondence”, gives the fullest account of her life yet (following pioneering work by Miriam Small, Gustavus Maynardier and Philippe Séjourné), and conducts readers through all of her major works. It arrives as a handsome, substantial volume, complete with full scholarly apparatus and a proselytizing zeal of application that is both good to see and a little perplexing in tenor: Lennox is simultaneously “representative and exceptional, innovative and illustrative”. Lennox did have an “independent mind”, in whatever degree this was possible as one negotiated the path to Grub Street solvency, and Carlile was right to make this the book’s subtitle and leitmotif, rather than giving Lennox the “dangerous” or “powerful” mind she initially considered.

Susan Carlile explains with good judgement in her introduction why it is time for a new, full, critical biography of Charlotte Lennox, who, along with Eliza Haywood in particular, acts as a linking presence between the Aphra Behn-inflected, rackety experiments of Delariviere Manley, in the early eighteenth century, and the more solidly respectable achievements of Austen and Frances Burney. This biography, “the first to consider Lennox’s entire oeuvre and all her extant correspondence”, gives the fullest account of her life yet (following pioneering work by Miriam Small, Gustavus Maynardier and Philippe Séjourné), and conducts readers through all of her major works. It arrives as a handsome, substantial volume, complete with full scholarly apparatus and a proselytizing zeal of application that is both good to see and a little perplexing in tenor: Lennox is simultaneously “representative and exceptional, innovative and illustrative”. Lennox did have an “independent mind”, in whatever degree this was possible as one negotiated the path to Grub Street solvency, and Carlile was right to make this the book’s subtitle and leitmotif, rather than giving Lennox the “dangerous” or “powerful” mind she initially considered. For the burgeoning fields of environmental humanities, it has long since become a commonplace notion that there isn’t really any such thing as “nature” or “wilderness”: both words used to connote real places—pristine and untouched places—but with the increasing knowledge that such a state of being likely never existed, the words come up empty. There are, however, new narratives: Through a case study of the global matsutake mushroom trade, anthropologist Anna Tsing shows compellingly in

For the burgeoning fields of environmental humanities, it has long since become a commonplace notion that there isn’t really any such thing as “nature” or “wilderness”: both words used to connote real places—pristine and untouched places—but with the increasing knowledge that such a state of being likely never existed, the words come up empty. There are, however, new narratives: Through a case study of the global matsutake mushroom trade, anthropologist Anna Tsing shows compellingly in  Yuval Noah Harari’s

Yuval Noah Harari’s When Kathleen Morrison stepped onto the stage to present her research on the effects of stress on the brains of mothers and infants, she was nearly seven and a half months pregnant. The convergence was not lost on Morrison, a postdoctoral researcher at the University of Maryland School of Medicine, nor on her audience. If there ever was a group of scientists that would be both interested in her findings and unphased by her late-stage pregnancy, it was this one. Nearly 90 percent were women. It is uncommon for any field of science to be dominated by women. In 2015, women received only 34.4 percent of all STEM degrees.1 Even though women now earn more than half of PhDs in biology-related disciplines, only 36 percent of assistant professors and 18 percent of full professors in biology-related fields are women.2 Yet, 70 percent of the speakers at this year’s meeting of the Organization for the Study of Sex Differences (OSSD), where Morrison spoke, were women. Women make up 67 percent of the regular members and 81 percent of trainee members of OSSD, which was founded by the Society for Women’s Health Research. Similarly, 68 percent of the speakers at the annual meeting of the Society for Behavioral Neuroendocrinology (SBN) in 2017 were women. In the field of behavioral neuroendocrinology, 58 percent of professors and 62 percent of student trainees are women. The leadership of both societies also skews female, and the current and recent past presidents of both societies are women.

When Kathleen Morrison stepped onto the stage to present her research on the effects of stress on the brains of mothers and infants, she was nearly seven and a half months pregnant. The convergence was not lost on Morrison, a postdoctoral researcher at the University of Maryland School of Medicine, nor on her audience. If there ever was a group of scientists that would be both interested in her findings and unphased by her late-stage pregnancy, it was this one. Nearly 90 percent were women. It is uncommon for any field of science to be dominated by women. In 2015, women received only 34.4 percent of all STEM degrees.1 Even though women now earn more than half of PhDs in biology-related disciplines, only 36 percent of assistant professors and 18 percent of full professors in biology-related fields are women.2 Yet, 70 percent of the speakers at this year’s meeting of the Organization for the Study of Sex Differences (OSSD), where Morrison spoke, were women. Women make up 67 percent of the regular members and 81 percent of trainee members of OSSD, which was founded by the Society for Women’s Health Research. Similarly, 68 percent of the speakers at the annual meeting of the Society for Behavioral Neuroendocrinology (SBN) in 2017 were women. In the field of behavioral neuroendocrinology, 58 percent of professors and 62 percent of student trainees are women. The leadership of both societies also skews female, and the current and recent past presidents of both societies are women. The left has an obvious and pressing need to unperson him; what he and the other members of the so-called “

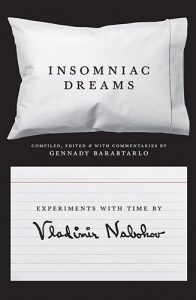

The left has an obvious and pressing need to unperson him; what he and the other members of the so-called “ The authorship of Insomniac Dreams is surprisingly ambiguous. Yes, it contains the unpublished dream diary of Vladimir Nabokov – but that takes up just over sixty pages of a two hundred-odd page book. So what about the rest? The cover gives some indication. ‘Experiments with Time by Vladimir Nabokov’ is displayed on an index card – Nabokov’s favourite piece of stationery – that sits beneath a big white pillow emblazoned with the book’s title. Nabokov’s name is printed as a reproduction of his signature, as if the cover’s index card had been signed by Nabokov himself. And in a way it was; in its archival form, the dream diary consists of 118 index cards on which Nabokov recorded his dreams over about eighty days – from 14 October 1964 to 3 January 1965. Nestled modestly between the card and the pillow is the crux of the ambiguity – ‘Compiled, edited & with commentaries by Gennady Barabtarlo’.

The authorship of Insomniac Dreams is surprisingly ambiguous. Yes, it contains the unpublished dream diary of Vladimir Nabokov – but that takes up just over sixty pages of a two hundred-odd page book. So what about the rest? The cover gives some indication. ‘Experiments with Time by Vladimir Nabokov’ is displayed on an index card – Nabokov’s favourite piece of stationery – that sits beneath a big white pillow emblazoned with the book’s title. Nabokov’s name is printed as a reproduction of his signature, as if the cover’s index card had been signed by Nabokov himself. And in a way it was; in its archival form, the dream diary consists of 118 index cards on which Nabokov recorded his dreams over about eighty days – from 14 October 1964 to 3 January 1965. Nestled modestly between the card and the pillow is the crux of the ambiguity – ‘Compiled, edited & with commentaries by Gennady Barabtarlo’. IN HIS AUTOBIOGRAPHY

IN HIS AUTOBIOGRAPHY Framed as a book of ‘lessons’, his new work seems obviously inspired by such bestsellers as Jordan Peterson’s 12 Rules for Life. In the acknowledgements, he explains that he wrote it ‘in conversation with the public’, since many of the chapters originated in answer to ‘questions I was asked by readers, journalists and colleagues’. If somebody asks Harari a question, and he then gives a 5,000-word answer, does that genuinely count as a ‘conversation’? In any case, it would surely be more accurate, as well as less pretentious, to describe it as a compilation of previously published articles, many of which appeared in the Financial Times and The Guardian and on Bloomberg View.

Framed as a book of ‘lessons’, his new work seems obviously inspired by such bestsellers as Jordan Peterson’s 12 Rules for Life. In the acknowledgements, he explains that he wrote it ‘in conversation with the public’, since many of the chapters originated in answer to ‘questions I was asked by readers, journalists and colleagues’. If somebody asks Harari a question, and he then gives a 5,000-word answer, does that genuinely count as a ‘conversation’? In any case, it would surely be more accurate, as well as less pretentious, to describe it as a compilation of previously published articles, many of which appeared in the Financial Times and The Guardian and on Bloomberg View. Elephants are one of nature’s biggest improbabilities—literally. Their colossal bodies somehow manage to defy the odds: Despite the fact that their cells outnumber humans’ by a factor of

Elephants are one of nature’s biggest improbabilities—literally. Their colossal bodies somehow manage to defy the odds: Despite the fact that their cells outnumber humans’ by a factor of  On October 10, 1953, V.S. Naipaul sent a telegram home to his family in Trinidad. At that time,

On October 10, 1953, V.S. Naipaul sent a telegram home to his family in Trinidad. At that time,  Humor me please, and consider the pun. Though some may quibble over the claim, the oft-maligned wordplay is clever and creative, writer

Humor me please, and consider the pun. Though some may quibble over the claim, the oft-maligned wordplay is clever and creative, writer  The case seems like a familiar story turned on its head:

The case seems like a familiar story turned on its head: