by John Hartley

With CAPTHCHA the latest stronghold to be breeched, following the heralded sacking of Turing’s temple, I propose a new standard for AI: The Tolkien test.

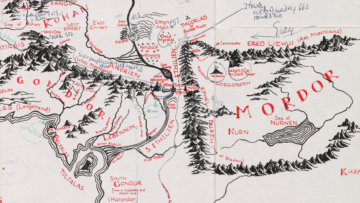

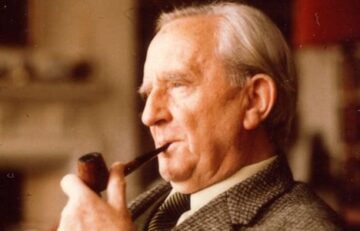

In this proposed schema, AI capability would be tested against what Andrew Pinsent terms ‘the puzzle of useless creation’. Pinsent, a leading authority on science and religion asks, concerning Tolkien: “What is the justification for spending so much time creating an entire family of imaginary languages for imaginary peoples in an imaginary world?”

Tolkien’s view of sub-creation framed human creativity as an act of co-creation with God. Just as the divine imagination shaped the world, so too does human imagination—though on a lesser scale—shape its own worlds. This, for Tolkien, was not mere artistic play but a serious, borderline sacred act. Tolkien’s works, Middle-earth in particular, were not an escape from reality, but a way of penetrating reality in the most acute sense.

For Tolkien, fantasia illuminated reality insofar is it tapped into the metaphysical core of things. The the artistic creation predicated on the creative imagination opened the individual to an alternate mode of knowledge, deeply intuitive and discursive in nature. Tolkien saw this creative act as deeply rational, not a fanciful indulgence. Echoing the Thomist tradition, he viewed fantasy as a way of refashioning the world that the divine had made, for only through the imagination is the human mind capable of reaching beyond itself.

The role of the creative imagination, then, is not to offer a mere replication of life but to transcend it. Here is the major test for AI, for in doing so, it accesses what Tolkien called the “real world”—the world beneath the surface of things. As faith seeks enchantment, so too does art seek a kind of conversion of the imagination, guiding it towards the consolation of eternal memory, what Plato termed ‘anamnesis’. Read more »