by Jochen Szangolies

The Incoherence of the Philosophers (Tahâfut al-falâsifa) is an attempt by 11th century Sunni theologian and mystic al-Ghazâlî to refute the doctrines of philosophers such as Ibn Sina (often latinized Avicenna) or al-Fârâbî (Alpharabius), which he viewed as heretical for favoring Greek philosophy over the tenets of Islam. Al-Ghazâlî’s methodological principle was that in order to refute the assertions of the philosophers, one must first be well versed in their ideas; indeed, another work of his, Doctrines of the Philosophers (Maqāsid al-Falāsifa), gives a comprehensive survey of the Neoplatonic philosophy he sought to refute in the Incoherence.

The Incoherence, besides its other qualities, is noteworthy in that it is now regarded as a landmark work in philosophy itself. Ibn Rushd (Averroes), in response, penned the Incoherence of the Incoherence (Tahāfut al-Tahāfut), a turning point away from Neoplatonism to Aristotelianism.

In modern times, most allegations of ‘incoherence’ levied against philosophy come not from the direction of religion, but rather, from scientists’ allegations that their discipline has made philosophy redundant, supplanting it by a better set of tools to investigate the world. The perhaps most well-known example of this is Stephen Hawking’s infamous assertion that ‘philosophy is dead’, but similar sentiments are readily found. While the proponents of such allegations have not always shown shown al-Ghazâlî’s methodological scrupulousness in engaging with the body of thought they seek to refute, these are still weighty charges by some of the leading intellectuals of the day.

A perennial favorite of this alleged replacement of philosophy with science is the claim that, while philosophers haven’t been able to really get ahead on the issue, science tells us unequivocally that there is no free will. The argument goes, the future is determined by the laws of physics, the conditions of the past, and perhaps some random chance events thrown in, none of which is under our control—and hence, neither is the future.

As I argued in my previous essay, matters are not quite that simple: the laws of physics may not provide rails which dictate our course, but rather, provide an account of the path actually taken, consequent to us walking it—they may describe rather than prescribe the way the world unfolds.

However, that essay left an important question open: even if our actions are not determined by physical law, they ought to be determined by something, or else, be random—but neither seems conducive to the idea of free choice. Free will, then, is alleged to be incoherent: an attempt to be both determinate and undetermined by anything in particular.

I propose that this apparent incoherence is due to a deeper issue: a necessary gap between the model and the thing it models (its object), due to the attempt to reduce the non-structural to mere structure (i. e. relations between things). This will need some unpacking. But first, it will be useful to have an explicit model of a ‘gappy’ world, where openings exist for the exertion of free will—should it not be an incoherent notion, that is.

Bohmian Micro-Occasionalism

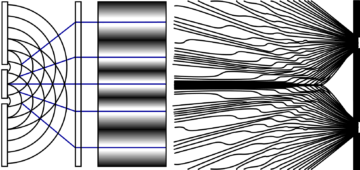

Bohmian mechanics, named after the physicist David Bohm, is an attempt to make sense of quantum mechanics by augmenting it with ‘hidden variables’. That is, where standard quantum mechanics asserts that a particle might not have a well-defined position, Bohmian mechanics adds a second layer of description that determines how particle positions must evolve in order to reproduce the observed phenomena of quantum mechanics. To the question of whether an electron is a wave or a particle, the Bohmian answer, then, is ‘yes’: the quantum wave function ‘guides’ the electron’s position in such a way as to recover the characteristic pattern of dark and light bands in the double-slit experiment, for example (see Fig. 2).

Bohmian mechanics is a deterministic theory; yet, quantum mechanics is characteristically indeterministic, only capable of producing statistical predictions. Bohmians get around this problem by appealing to the notion of the quantum equilibrium: the particle positions are, initially, distributed such as to replicate the statistics of quantum mechanics. Essentially, you can think of Bohmian mechanics as an algorithm that takes a random number as input, and spits out measurement results: what result is obtained in any given experiment is determined by the initial random seed, and our ignorance of that seed explains the statistical nature of our best predictions.

Generally, that random seed is proposed to be provided in the distant past, say, at the big bang. But there’s nothing in the theory—nothing in physics, as such—that requires this: the equations are such that the complete set of data at any given instant, plus the evolution equations, determine the particle positions at every other moment in time. That is, we need not feed the configuration in the past to the algorithm, but may equally well use the configuration in the future, to then calculate that of the past. This isn’t any different from calculating, say, the trajectory of a baseball: you can use the baseball’s velocity and position when it leaves the pitcher’s hand to determine its complete arc (ignoring complications such as wind and friction), but you can just as well measure it along any point of that arc, and calculate backwards to where it was, or forwards to where it’s going to be.

But then, we can also take our algorithm, and turn it into an interactive version, a sort of game: rather than setting up everything at the beginning, or the middle, or the end, we can accumulate the input data, by making it sensitive to user interventions. So, rather than setting up a random number at the outset that determines how things will unfold, participants enter their choices—say, of a particular measurement to be performed—which then determine future outcomes.

Think of your experience playing a game: you enter certain commands to make your avatar execute various moves—go right, go left, jump, shoot, etc. Now, you could just as well pre-record these choices, and have the game ‘play itself’: supply both the game and your pre-recorded choices to an engine that then feeds these choices—the initial data—to the game, exactly recovering your performance. This is the way physics is usually viewed: the initial data comes from we-know-not-where, and then, the universe plays itself—causality unfolds unrelentingly.

This sort of view, where causality connects events inexorably, was one of the ideas that al-Ghazâlî criticized. As a member of the Ashʿarite school of Sunni Islam, he followed the school’s eponymous founder, al-Ashʿari, in locating the cause of each event with God. In the Incoherence, he gives the example of a piece of cotton being burned, arguing that ‘the efficient cause of the combustion through the creation of blackness in the cotton and through causing the separation of its parts and turning it into coal or ashes is God’. Prefiguring Hume, he remarks that observation can never prove causality, but only ‘concomitant occurrence’.

Al-Ghazâlî thus was a proponent of occasionalism, a view in Christian Europe most strongly associated with 17th century philosopher and priest Nicolas Malebranche. In the pseudo-Bohmian picture sketched so far, then, any free agent acts in a kind of micro-occasionalist way, by completing the unfolding of the universe at certain junctures with free choices. This picture is just as compatible with current scientific knowledge as any other interpretation of quantum mechanics—it will yield all the same predictions.

However, the account so far leaves the choices, the inputs made by the player, essentially mysterious. The free will skeptic will, quite rightly, point out that I’ve only been describing half the world—I’ve only accounted for the game itself, but not the player. Surely, any description with any pretense at completeness must account for the player, just as well—and there, find them either governed by law, or acting randomly: and in any case, not freely.

But, as we will see, such a ‘complete’ account is necessarily a fantasy, and conclusions drawn from it without a solid foundation—there is no single and complete account of ‘the universe’, there is only a patchwork of local models, applicable in limited domains.

The Incomplete Participatory Universe

I’m not the first to articulate a view such as the one above. One of its most famous proponents was the great John Wheeler, coiner of colorful terms like ‘quantum foam’, ‘black hole’, or ‘wormhole’, who referred to it as the ‘participatory universe’: rather than coming into being at the big bang, all set up to just unwind into the future as if on rails, he viewed the creation of the universe as an ongoing process—wondering whether, instead of particles and fields, the universe is built from ‘elementary acts of observer-participancy’.

Wheeler pointed to the ‘quantum principle’ as the wellspring of these acts of universal co-creation, but to that principle itself, he attributed an even more startling source, identifying its ‘key idea’ as the notion of participation, and its point of origin as the ‘undecidable propositions of mathematical logic’.

That certain mathematical systems feature undecidable propositions—that is, propositions whose truth or falsity can’t be established within the system—was the landmark discovery of Kurt Gödel, who presented his findings at the Königsberg conference in 1930, to the great mystification of his audience, with the notable exception of Hungarian polymath John von Neumann. But what should a highly technical result in mathematical logic have to do with quantum mechanics?

I have tried to elucidate this story elsewhere (free preprint version), and will not go into great detail here; but the common thread is that there are certain things about any system that are left out by any model, any theory of that system. Gödel’s results are often glossed as showing that ‘mathematics is incomplete’, but that’s misleading. Consider a mathematical object, such as the set of natural numbers (positive and negative whole numbers). Its members, the numbers themselves, have certain properties; these properties are what we try to elucidate by means of a theory of the natural numbers, built on a certain set of axioms we presume they fulfill. Given these axioms, we can prove, say, that there are infinitely many prime numbers (numbers divisible only by themselves and one).

What Gödel now showed is that there are certain types of statements that can be clearly stated in the language of the axioms, pertaining to properties of the natural numbers, such that there is no way to prove them true or false starting with the axioms. But for the mathematical structure under discussion (what’s generally called the ‘standard model’ of the axioms—the natural numbers ‘as such’), these statements either hold true, or not. So it’s not mathematics that is incomplete—it’s that there can be no theory that fully describes all the properties of a given mathematical object.

The same sort of incompleteness—what I refer to as ‘epistemic horizon’—now also extends to physical theories—and, in a word, that’s the origin of the fact that sometimes, measurement outcomes in quantum mechanics are necessarily unpredictable: these outcomes are like the ‘undecidable propositions’ in that the theory can’t account for them either way.

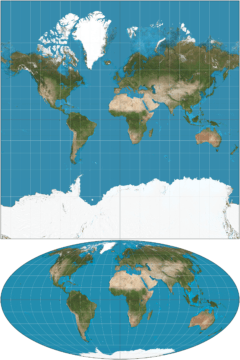

Thus, physics as well as mathematics has to contend with the fact that its theories don’t fully cover the ground they seek to describe. It’s like having a set of maps, each of which is accurate for a given region; but, try as you might, you can never combine these maps into one all-encompassing view of the terrain.

That this is the case is due to the fact that a model of a system—my go-to example is an orrery modeling the solar system—, and hence, by extension, a theory as a kind of ‘virtual’ or ‘mental’ model, only ever captures the structural facts about a system. The wires, gears, and wheels of the orrery ensure that its little metal beads will always bear the same relationships to one another as the planets in the sky do; hence, reading off the state of the beads at any one time allows one to predict the state of the solar system at a corresponding moment.

These relationships—the structure—are what is captured by a theory, by a set of axioms. The undecidable propositions then are what the philosopher Galen Strawson has called the ‘structure-transcending properties’ of the system under consideration. (Their inability to be captured by any theoretical account also suggests that they might be responsible for the hardness of the famed ‘hard problem’ of consciousness, which I elaborate on here.) But how does this help with free will?

The Zeno Machine: To Infinity… And Beyond!

Consider the following device: a lamp, such that it is switched on, then switched off after one minute, switched on after another half minute, switched off again after a quarter, and so on. Now, in what state—on or off—will this lamp be after two minutes, having completed infinitely many on/off transitions?

Clearly, it must either be on, or off—those being the only two states available to it. Just as clearly, however, it can’t be on—because for every time it was turned on, it was turned off again. But for the same reason, it can’t be off! In this sense, its state after two minutes is undecidable.

This device, known as Thomson’s lamp after the philosopher James Thomson, who devised this thought experiment in 1954, illustrates a subtle connection between undecidability and infinity, which can be made more definite. Suppose that, instead of a lamp, you have a computer, which carries out one step of computation after one minute, another step after a further half minute… You get the idea. Now, here’s the important part: this ‘infinite’ computer—the Zeno machine—can decide undecidable propositions!

A famous undecidable proposition, due to Alan Turing, the pioneer of modern computer technology, is the question of whether a given computation eventually stops and produces an output, or runs forever—the so-called ‘halting problem’. But with access to a Zeno machine, all you have to do is have it simulate a computer implementing that particular computation. Then, after two minutes, you either have an answer—in which case, the computation halts—or not—in which case it doesn’t. From a modeling perspective, undecidable properties are equivalent to infinitary powers.

Now, finally, this sort of power is also what’s needed to have your cake and eat it, regarding free will. The attempt to have the will will itself, in order to not have it determined by something else, lands us in infinite regress—of having to will2 our will1, meaning that we have to will3 to will2 our will1, and so on. But that’s no problem for a Zeno machine: after two minutes, it will will whatever it will, without either being determined by something beyond itself, or random.

That’s not to say we have a sort of infinite computer in our heads. Recall that my central contention is that the undecidable properties, and hence, the need for apparently infinite powers, are just an artifact of how models fail to fully account for that which they model. Hence, the apparent regress just exists from the point of view of our attempt to provide a model for that which can’t be modeled—leading to the allegation of incoherence of the notion of free will being itself incoherent. Rather, it is the idea that models—such as scientific theories—could give an exhaustive account of their objects that is shown to be problematic.

It might be objected that this is a lot of arcane machinery brought in to try and give an account of free will. But, aside from the fact that the same machinery may shed light on perennial mysteries in quantum mechanics and the philosophy of consciousness, there is also the point, made already in the previous essay, that alternative explanations for how stuff happens—via causation, or randomly—lead to exactly the same problems. A causal chain must either go on forever into the past—which yields the same regress—or terminate in something acausal. One might hope that a random event could step in and happen ‘just so’, but in mathematics, randomness can be viewed as a consequence of undecidability, leading back to the same notions.

Whatever we propose accounts for how things happen, then, it seems we are unable to give a full account in theoretical—i. e. structural—terms; and free will is not any worse off than causality or chance.