Steven Poole reviews The Housekeeper and the Professor by Yoko Ogawa, over at his blog:

Steven Poole reviews The Housekeeper and the Professor by Yoko Ogawa, over at his blog:

Number theory — what Gauss called “the queen of mathematics”, devoted to the study of numbers and their arcane interrelationships — does not perhaps sound like the most fruitful basis for a poignant domestic drama. And yet this novel, with its skilful admixture of tender atmospherics and stealthy education, has sold more than four million copies in its native Japan. Its unnamed characters suggest archetype or myth; its rapturous concentration on the details of weather and cooking provide a satisfyingly textured foundation.

The book is narrated by the housekeeper of the title, a single mother employed by an agency, who is assigned a new client. He lives in a dingy two-room apartment, and his suit jacket is covered with reminder notes he scribbles to himself. This is the Professor, a brilliant mathematician who suffered brain damage in a car accident in 1975, and since then cannot remember anything for more than an hour and 20 minutes at a time. “It’s as if he has a single, eighty-minute videotape inside his head,” the narrator explains, “and when he records anything new, he has to record over the existing memories.”

What he can remember is mathematics. He asks for her shoe size and telephone number, and reflects on the mathematical properties of each. Once he has drawn a picture of her and clipped it to his suit so that he is not altogether surprised to see her every day, he begins to induct her into number theory.

But more than that, it’s the world I live in. The buses in Harlem heave under the weight of wrecked bodies. New York will not super-size itself, so you’ll see whole rows in which one person is taking up two seats and aisles in which people strain to squeeze past each other. And then there are the middle-age amputees in wheelchairs who’ve lost a leg or two way before their time. When I lived in Brooklyn, the most depressing aspect of my day was the commute back home. The deeper the five train wended into Brooklyn, the blacker it became, and the blacker it became, the fatter it got. I was there among them–the blacker and fatter–and filled with a sort of shameful self-loathing at myself and my greater selves around me. One of the hardest thing about being black is coming up dead last in almost anything that matters. As a child, and a young adult, I was lucky. Segregation was a cocoon brimming with all the lovely variety of black life. But out in the world you come to see, in the words of Peggy Olson, that they have it all–and so much of it. Working on the richest island in the world, then training through Brooklyn, or watching the buses slog down 125th has become a kind of corporeal metaphor–the achievement gap of our failing bodies, a slow sickness as the racial chasm.

more from Ta-Nehisi Coates at The Atlantic here.

Hyman Bloom’s name is usually associated with 1940s expressionism. He was discovered in 1942 by Museum of Modern Art curator Dorothy Miller, who launched his reputation by including thirteen of his paintings in one of her regular exhibitions of contemporary American art. In 1950, together with Arshile Gorky, Jackson Pollock, and Willem de Kooning, Bloom represented the United States at the Venice Biennale. By 1954, he was having a full retrospective at the Whitney Museum. The news of his death a few weeks ago, at the age of 96, must have come as a surprise to many: Hyman Bloom was still around? I visited him for the first time in 2000. He was 87 years old and I expected he might express some of the bitter feelings not uncommon among older artists who are no longer household names. Quite the contrary. I found a courteous, smart-looking, humorous, and highly intellectual man, who, after I started asking him a few questions about his career, recommended that I try LSD. He was only half-joking. In the mid-fifties he had volunteered to participate in an experiment on the effects of LSD on creativity. He called it “an eye-opener.” The experiment was only one of many avenues Bloom explored in search of spiritual adventures and new sources of inspiration to give pictorial form to his profound need for transcendence. For the same reason he participated in séances (though he admitted never seeing spirits) and immersed himself in Eastern philosophy, theosophy, and other esoteric systems of thought.

more from Isabelle Dervaux at artcritical here.

From The Washington Post:

On Saturdays when I was a boy of 14 or 15, it was my habit to ride my red Roadmaster bicycle to the various thrift shops in my home town. One afternoon, at Clarice's Values, I unearthed a beat-up paperback of Martin Gardner's “Fads and Fallacies in the Name of Science,” a collection of essays debunking crank beliefs and pseudoscientific quackery, with wonderful chapters about flying saucers, the hollow Earth, ESP and Atlantis. The book, Gardner's second, was originally published in 1952 under the title “In the Name of Science.” I probably read it around 1962 and found it — as newspaper critics of that era were wont to say — unputdownable.

On Saturdays when I was a boy of 14 or 15, it was my habit to ride my red Roadmaster bicycle to the various thrift shops in my home town. One afternoon, at Clarice's Values, I unearthed a beat-up paperback of Martin Gardner's “Fads and Fallacies in the Name of Science,” a collection of essays debunking crank beliefs and pseudoscientific quackery, with wonderful chapters about flying saucers, the hollow Earth, ESP and Atlantis. The book, Gardner's second, was originally published in 1952 under the title “In the Name of Science.” I probably read it around 1962 and found it — as newspaper critics of that era were wont to say — unputdownable.

In 1981 as a young staffer at The Washington Post Book World, I reviewed Gardner's “Science: Good, Bad and Bogus,” a kind of sequel to “Fads and Fallacies in the Name of Science,” and found it . . . unputdownable. A few years later, in 1989, I wrote about “Gardner's Whys & Wherefores,” a volume that opened with appreciations of wonderful, if slightly unfashionable, writers such as G.K. Chesterton, Lord Dunsany and H.G. Wells. I wrote at much greater length in 1996 about Gardner's so-called “collected essays” — really just a minuscule selection — gathered together as the nearly 600-page compendium “The Night Is Large.” There I called its author our most eminent man of letters and numbers.

More here.

From Scientific American:

The astronomical discoveries made by Galileo Galilei in the 17th century have secured his place in scientific lore, but a lesser known aspect of the Italian astronomer's life is his role as a father.

The astronomical discoveries made by Galileo Galilei in the 17th century have secured his place in scientific lore, but a lesser known aspect of the Italian astronomer's life is his role as a father.

Galileo had three children out of wedlock with Marina Gamba—two daughters and a son. The two young girls, whether by their illegitimate birth or Galileo's inability to provide a suitable dowry, were deemed unfit for marriage and placed in a convent together for life.

The eldest of Galileo's children was his daughter Virginia, who took the name Suor Maria Celeste in the convent. With Maria Celeste, apparently his most gifted child, Galileo carried on a long correspondence, from which 124 of her letters survive. Author Dava Sobel translated the correspondence from Italian into English, weaving the letters and other historical accounts into the unique portrait Galileo's Daughter: A Historical Memoir of Science, Faith and Love (Walker, 1999).

More here.

Thursday, October 22, 2009

Over at Crooked Timber, Daniel Davies offers some advice to Levitt and Drubner in the wake of the debate on climate change section of Superfreakanomics on contrarianism:

I like to think that I know a little bit about contrarianism. So I’m disturbed to see that people who are making roughly infinity more money than me out of the practice aren’t sticking to the unwritten rules of the game.

Viz Nathan Mhyrvold:

Once people with a strong political or ideological bent latch onto an issue, it becomes hard to have a reasonable discussion; once you’re in a political mode, the focus in the discussion changes. Everything becomes an attempt to protect territory. Evidence and logic becomes secondary, used when advantageous and discarded when expedient. What should be a rational debate becomes a personal and venal brawl.

Okay, point one. The whole idea of contrarianism is that you’re “attacking the conventional wisdom”, you’re “telling people that their most cherished beliefs are wrong”, you’re “turning the world upside down”. In other words, you’re setting out to annoy people. Now opinions may differ on whether this is a laudable thing to do – I think it’s fantastic – but if annoying people is what you’re trying to do, then you can hardly complain when annoying people is what you actually do. If you start a fight, you can hardly be surprised that you’re in a fight. It’s the definition of passive-aggression and really quite unseemly, to set out to provoke people, and then when they react passionately and defensively, to criticise them for not holding to your standards of a calm and rational debate. If Superfreakonomics wanted a calm and rational debate, this chapter would have been called something like: “Geoengineering: Issues in Relative Cost Estimation of SO2 Shielding”, and the book would have sold about five copies.

Economic Job Market Rumors is a board read and used largely by economics graduate students, post-docs and junior faculty. [H/t: Geoff Hodgson via Mark Blyth.] I don't know to what extent these comments on the Economics Nobel Prize award to Elinor Ostrom are representative of the mood of the field, but here they are and are they something:

Anonymous

Unregistered

why don't you read about her contribution instead of just counting publications and talk about rankings. These are imperfect measures of impact or quality of published work.

as for williamson – i was wondering who he is, but then i started reading his contribution and realized that i was tought his work in econ 101.

^ you almost sound like and angry woman

ostrom – female and environmental economics, nuff said.

The fact that most of us have not heard about her says enough about her contributions.

Read more »

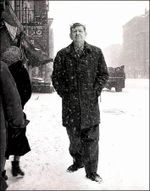

In 1960 Richard Avedon photographed the poet W. H. Auden on St. Mark’s Place, New York, in the middle of a snow storm. A few passers-by and buildings are visible to the left of the frame but the blizzard is in the process of freezing Auden in the midst of what is meteorologically termed “a white-out.” Avedon had by then already patented his signature approach to portraiture, so it is tempting to see this picture as a God-given endorsement of his habit of isolating people against a sheer expanse of white, as evidence that his famously severe technique is less a denial of naturalism than its apotheosis. Auden is shown full-length, bundled up in something that seems a cross between an old-fashioned English duffle coat and a prototype of the American anorak. Avedon, in this image, keeps his distance.

more from Geoff Dyer at Threepenny Review here.

Whenever Ayn Rand met someone new—an acolyte who’d traveled cross-country to study at her feet, an editor hoping to publish her next novel—she would open the conversation with a line that seems destined to go down as one of history’s all-time classic icebreakers: “Tell me your premises.” Once you’d managed to mumble something halfhearted about loving your family, say, or the Golden Rule, Rand would set about systematically exposing all of your logical contradictions, then steer you toward her own inviolable set of premises: that man is a heroic being, achievement is the aim of life, existence exists, A is A, and so forth—the whole Objectivist catechism. And once you conceded any part of that basic platform, the game was pretty much over. She’d start piecing together her rationalist Tinkertoys until the mighty Randian edifice towered over you: a rigidly logical Art Deco skyscraper, 30 or 40 feet tall, with little plastic industrialists peeking out the windows—a shining monument to the glories of individualism, the virtues of selfishness, and the deep morality of laissez-faire capitalism. Grant Ayn Rand a premise and you’d leave with a lifestyle.

more from Sam Anderson at New York Magazine here.

In truth, the essence of 1989 lies in the multiple interactions not merely of a single society and party-state, but of many societies and states, in a series of interconnected three-dimensional chess games. While the French Revolution of 1789 always had foreign dimensions and repercussions, and became an international event with the revolutionary wars, it originated as a domestic development in one large country. The European revolution of 1989 was, from the outset, an international event—and by international I mean not just the diplomatic relations between states but also the interactions of both states and societies across borders. So the lines of causation include the influence of individual states on their own societies, societies on their own states, states on other states, societies on other societies, states on other societies (for example, Gorbachev’s direct impact on East-Central Europeans), and societies on other states (for example, the knock-on effect on the Soviet Union of popular protest in East-Central Europe). These portmanteau notions of state and society have themselves to be disaggregated into groups, factions, and individuals, including unique actors such as Pope John Paul II.

more from Timothy Garton Ash at the NYRB here.

Café

In that café in a foreign town bearing a French writer’s

name I read Under the Volcano

but with diminishing interest. You should heal yourself,

I thought. I’d become a philistine.

Mexico was distant, and its vast stars

no longer shone for me. The day of the dead continued.

A feast of metaphors and light. Death played the lead.

Alongside a few patrons at the tables, assorted fates:

Prudence, Sorrow, Common Sense. The Consul, Yvonne.

Rain fell. I felt a little happiness. Someone entered,

someone left, someone finally discovered the perpetuum mobile.

I was in a free country. A lonely country.

Nothing happened, the heavy artillery lay still.

The music was indiscriminate: pop seeped

from the speakers, lazily repeating: many things will happen.

No one knew what to do, where to go, why.

I thought of you, our closeness, the scent

of your hair in early autumn.

A plane ascended from the runway

like an earnest student who believes

the ancient masters’ sayings.

Soviet cosmonauts insisted that they didn’t find

God in space, but did they look?

by Adam Zagajewsky

translation: Clare Cavanagh

from Anteny (Antennas)

publisher: Znak, Kraków, 2005

David Bromwich in the London Review of Books:

Long before he became president, there were signs in Barack Obama of a tendency to promise things easily and compromise often. He broke a campaign vow to filibuster a bill that immunised telecom outfits against prosecution for the assistance they gave to domestic spying. He kept his promise from October 2007 until July 2008, then voted for the compromise that spared the telecoms. As president, he has continued to support their amnesty. It was always clear that Obama, a moderate by temperament, would move to the middle once elected. But there was something odd about the quickness with which his website mounted a slogan to the effect that his administration would look to the future and not the past. We all do. Then again, we don’t: the past is part of the present. Reduced to a practice, the slogan meant that Obama would rather not bring to light many illegal actions of the Bush administration. The value of conciliation outweighed the imperative of truth. He stood for ‘the things that unite not divide us’. An unpleasant righting of wrongs could be portrayed as retribution, and Obama would not allow such a misunderstanding to get in the way of his ecumenical goals.

Long before he became president, there were signs in Barack Obama of a tendency to promise things easily and compromise often. He broke a campaign vow to filibuster a bill that immunised telecom outfits against prosecution for the assistance they gave to domestic spying. He kept his promise from October 2007 until July 2008, then voted for the compromise that spared the telecoms. As president, he has continued to support their amnesty. It was always clear that Obama, a moderate by temperament, would move to the middle once elected. But there was something odd about the quickness with which his website mounted a slogan to the effect that his administration would look to the future and not the past. We all do. Then again, we don’t: the past is part of the present. Reduced to a practice, the slogan meant that Obama would rather not bring to light many illegal actions of the Bush administration. The value of conciliation outweighed the imperative of truth. He stood for ‘the things that unite not divide us’. An unpleasant righting of wrongs could be portrayed as retribution, and Obama would not allow such a misunderstanding to get in the way of his ecumenical goals.

The message about uniting not dividing was not new. It was spoken in almost the same words by Bill Clinton in 1993; and after his midterm defeat in 1994, Clinton borrowed Republican policies in softened form – school dress codes, the repeal of welfare. The Republican response was unappreciative: they launched a three-year march towards impeachment. Obama’s appeals for comity and his many conciliatory gestures have met with a uniform negative. If anything, the Republicans are treating him more roughly than Clinton.

More here.

Colin Nissan in McSweeney's:

I don't know about you, but I can't wait to get my hands on some fucking gourds and arrange them in a horn-shaped basket on my dining room table. That shit is going to look so seasonal. I'm about to head up to the attic right now to find that wicker fucker, dust it off, and jam it with an insanely ornate assortment of shellacked vegetables. When my guests come over it's gonna be like, BLAMMO! Check out my shellacked decorative vegetables, assholes. Guess what season it is—fucking fall. There's a nip in the air and my house is full of mutant fucking squash.

I don't know about you, but I can't wait to get my hands on some fucking gourds and arrange them in a horn-shaped basket on my dining room table. That shit is going to look so seasonal. I'm about to head up to the attic right now to find that wicker fucker, dust it off, and jam it with an insanely ornate assortment of shellacked vegetables. When my guests come over it's gonna be like, BLAMMO! Check out my shellacked decorative vegetables, assholes. Guess what season it is—fucking fall. There's a nip in the air and my house is full of mutant fucking squash.

I may even throw some multi-colored leaves into the mix, all haphazard like a crisp October breeze just blew through and fucked that shit up. Then I'm going to get to work on making a beautiful fucking gourd necklace for myself. People are going to be like, “Aren't those gourds straining your neck?” And I'm just going to thread another gourd onto my necklace without breaking their gaze and quietly reply, “It's fall, fuckfaces. You're either ready to reap this freaky-assed harvest or you're not.”

Carving orange pumpkins sounds like a pretty fitting way to ring in the season. You know what else does? Performing a all-gourd reenactment of an episode of Different Strokes—specifically the one when Arnold and Dudley experience a disturbing brush with sexual molestation.

More here. [Thanks to Anjuli Kolb.]

Excerpt from Robert B. Talisse's new book, at the Cambridge University Press website:

Democracy is in crisis. So we are told by nearly every outlet of political comment, from politicians and pundits to academicians and ordinary citizens. This is not surprising, given that the new millennium seems to be off to a disconcerting and violent start: terrorism, genocide, torture, assassination, suicide bombings, civil war, human rights abuse, nuclear proliferation, religious extremism, poverty, climate change, environmental disaster, and strained international relations all forebode an uncertain tomorrow for democracy. Some hold that democracy is faltering because it has lost the moral clarity necessary to lead in a complicated world. Others hold that “moral clarity” means little more than moral blindness to the complexity of the contemporary world, and thus that what is needed is more reflection, self-criticism, and humility. Neither side thinks much of the other. Consequently our popular democratic politics is driven by insults, scandal, name-calling, fear-mongering, mistrust, charges of hypocrisy, and worse.

Democracy is in crisis. So we are told by nearly every outlet of political comment, from politicians and pundits to academicians and ordinary citizens. This is not surprising, given that the new millennium seems to be off to a disconcerting and violent start: terrorism, genocide, torture, assassination, suicide bombings, civil war, human rights abuse, nuclear proliferation, religious extremism, poverty, climate change, environmental disaster, and strained international relations all forebode an uncertain tomorrow for democracy. Some hold that democracy is faltering because it has lost the moral clarity necessary to lead in a complicated world. Others hold that “moral clarity” means little more than moral blindness to the complexity of the contemporary world, and thus that what is needed is more reflection, self-criticism, and humility. Neither side thinks much of the other. Consequently our popular democratic politics is driven by insults, scandal, name-calling, fear-mongering, mistrust, charges of hypocrisy, and worse.

Political theorists who otherwise agree on very little share the sense that inherited categories of political analysis are no longer apt. Principles and premises that were widely accepted only a few years ago are now disparaged as part of a Cold War model that is wholly irrelevant to our post-9/11 context. An assortment of new paradigms for analysis are on offer, each promising to set matters straight and thus to ease the cognitive discomfort that comes with tumultuous times.

The diversity of approaches and methodologies tends to employ one of two general narrative strategies. On the one hand, there is the clash of civilizations account, which holds that the world is on the brink of, perhaps engaged in the early stages of, a global conflict between distinct and incompatible ways of life. On the other hand, there is the democracy deficit narrative, according to which democracy is in decline and steadily unraveling around us. Despite appearances, both narratives come in local and global versions.

More here.

From The Guardian:

In 1984, a history graduate at the University of Toronto upped sticks and moved to Indiana. His grades weren't good enough to stay on for postgraduate work, he'd been rejected by more than a dozen advertising agencies, and his application for a fellowship “somewhere exotic” went nowhere. The only thing left was writing – but it turned out that Malcolm Gladwell knows how to write. Gladwell's journalistic trajectory from junior writer on the Indiana-based American Spectator to the doors of the New Yorker makes for a story in itself, but only after arriving at the magazine did he become established as one of the most imaginative non-fiction writers of his generation. As of last year, he had three bestsellers under his belt and was named one of Time magazine's 100 most influential people. Gladwell owes his success to the trademark brand of social psychology he honed over a decade at the magazine. His confident, optimistic pieces on the essence of genius, the flaws of multinational corporations and the quirks of human behaviour have been devoured by businessmen in search of a new guru. His skill lies in turning dry academic hunches into compelling tales of everyday life: why we buy this or that; why we place trust in flakey ideas; why we are hopeless at joining the dots between cause and effect. He is the master of pointing out the truths under our noses (even if they aren't always the whole truth).

In 1984, a history graduate at the University of Toronto upped sticks and moved to Indiana. His grades weren't good enough to stay on for postgraduate work, he'd been rejected by more than a dozen advertising agencies, and his application for a fellowship “somewhere exotic” went nowhere. The only thing left was writing – but it turned out that Malcolm Gladwell knows how to write. Gladwell's journalistic trajectory from junior writer on the Indiana-based American Spectator to the doors of the New Yorker makes for a story in itself, but only after arriving at the magazine did he become established as one of the most imaginative non-fiction writers of his generation. As of last year, he had three bestsellers under his belt and was named one of Time magazine's 100 most influential people. Gladwell owes his success to the trademark brand of social psychology he honed over a decade at the magazine. His confident, optimistic pieces on the essence of genius, the flaws of multinational corporations and the quirks of human behaviour have been devoured by businessmen in search of a new guru. His skill lies in turning dry academic hunches into compelling tales of everyday life: why we buy this or that; why we place trust in flakey ideas; why we are hopeless at joining the dots between cause and effect. He is the master of pointing out the truths under our noses (even if they aren't always the whole truth).

Gladwell's latest book, What the Dog Saw, bundles together his favourite articles from the New Yorker since he joined as a staff writer in 1996. It makes for a handy crash course in the world according to Gladwell: this is the bedrock on which his rise to popularity is built. A warning, though: it's hard to read the book without the sneaking suspicion that you're unwittingly taking part in a social experiment he's masterminded to provide grist for his next book. Times are hard, good ideas are scarce: it may just be true. But more about that later.

More here.

From Science:

Red-eye flights, all-night study sessions, and extra-inning playoff games all deprive us of sleep and can leave us forgetful the next day. Now scientists have discovered that lost sleep disrupts a specific molecule in the brain's memory circuitry, possibly leading to treatments for tired brains. Neuroscientists studying rodents and humans have found that sleep deprivation interrupts the storage of episodic memories: information about who, what, when, and where. To lay down these memories, neurons in our brains form new connections with other neurons or strengthen old ones. This rewiring process, which occurs over a period of hours, requires a rat's nest of intertwined molecular pathways within neurons that turn genes on and off and fine-tune how proteins behave.

Red-eye flights, all-night study sessions, and extra-inning playoff games all deprive us of sleep and can leave us forgetful the next day. Now scientists have discovered that lost sleep disrupts a specific molecule in the brain's memory circuitry, possibly leading to treatments for tired brains. Neuroscientists studying rodents and humans have found that sleep deprivation interrupts the storage of episodic memories: information about who, what, when, and where. To lay down these memories, neurons in our brains form new connections with other neurons or strengthen old ones. This rewiring process, which occurs over a period of hours, requires a rat's nest of intertwined molecular pathways within neurons that turn genes on and off and fine-tune how proteins behave.

Neuroscientist Ted Abel of the University of Pennsylvania and colleagues wanted to untangle these molecular circuits and pinpoint which one sleep deprivation disrupts. The researchers started by studying electrical signals in slices of the hippocampus–the brain's memory center–from sleep-deprived mice. They tested for long-term potentiation (LTP), a strengthening of connections between neurons that neuroscientists think underlies memory. When the scientists tried to trigger LTP in these brain slices with electrical stimulation or chemicals, they found that methods that fired up cellular pathways involving the molecule cyclic adenosine monophosphate (cAMP) didn't work. Brain cells from sleep-deprived mice also held about 50% less cAMP than did cells from well-rested mice. In the brain, cAMP acts as a molecular messenger, passing signals between proteins that regulate activity of genes responsible for memory formation.

More here.

Wednesday, October 21, 2009

The robots are coming. We’ve heard this claim frequently over the past 30 years: that someday soon robots will be ironing our clothes, washing our windows, and serving our morning coffee. In fact, the nearest we’ve come to achieving this vision of domestic automation is embodied by the iRobot Roomba, a puck-shaped robotic vacuum cleaner that does decent work on tile and hardwood, but won’t venture near pile. As a working roboticist, however, I can attest that the vision of domestic robotics is finally, if incrementally, becoming a reality. Robots will not be serving our coffee any time soon, but they will be entertaining our children and caring for our – hopefully not my – elderly relatives. And the likely form of these robots is decidedly humanoid. But what should a humanoid robot look like?

more from Karl Iagnemma at Frieze here.

Alas, peak is fleeting. In its wake comes past peak: “Brightness and depth of color have begun to fade. Leaf drop has begun and will accelerate from this point.” Past peak is indicated by a that’s-all-she-wrote, see-you-in-the-spring burgundy that slowly creeps down from Canada and spreads out across the country. This shouldn’t come as a shock, I suppose. What does is how this depiction creates a sense of pressure one doesn’t normally encounter when thinking about leisure time spent outdoors. Looking at the landscape, our eyes are drawn to the trees that make peak such an appealing moment. Looking at these maps, we also see that peak is here, but we simultaneously notice that past peak is following close behind. There is, of course, a melancholy to the sight of changing foliage. We know the transformation reveals the trees’ hunkering down for a long winter. We face those same cold, dark months. The fall foliage map charts this somber process, but it has a melancholy all its own. Looking at foliage, you’re watching summer disappear. Looking at the maps, you’re watching the same thing happen to fall.

more from Jesse Smith at The Smart Set here.

Weighing in early on what academics call “periodization” is a dicey proposition. If you try to locate the moment of a major paradigm shift, in the moment, perhaps by calling your album “Hip Hop Is Dead,” as Nas did in 2006, you’re slipping into weatherman territory. Will it rain tomorrow? Will another great rap album pop up? The life spans of genres and art forms are best perceived from the distance of ten or twenty years, if not more. With that in mind, I still suspect that Nas—along with a thousand bloggers—was not fretting needlessly. If I had to pick a year for hip-hop’s demise, though, I would choose 2009, not 2006. Jay-Z’s new album, “The Blueprint 3,” and some self-released mixtapes by Freddie Gibbs are demonstrating, in almost opposite ways, that hip-hop is no longer the avant-garde, or even the timekeeper, for pop music. Hip-hop has relinquished the controls and splintered into a variety of forms. The top spot is not a particularly safe perch, and every vital genre eventually finds shelter lower down, with an organic audience, or moves horizontally into combination with other, sturdier forms. Disco, it turns out, is always a good default move.

more from Sasha Frere-Jones at The New Yorker here.

Steven Poole reviews The Housekeeper and the Professor by Yoko Ogawa, over at his blog: