That title reads like I have doubts about the current state of affairs in the world of artificial intelligence. And I do – who doesn’t? – but explicating that analogy is tricky, so I fear I’ll have to leave our hapless captain hanging while I set some conceptual equipment in place.

First, I am going to take quick look at how I responded to GPT-3 back in 2020. Then I talk about programs and language, who understands what, and present some Steven Pinker’s reservations about large language models (LLMs) and correlative beliefs in their prepotency. Next, I explain the whaling analogy (six paragraphs worth) followed by my observations on some of the more imaginative ideas of Geoffrey Hinton and Ilya Sutskever. I return to whaling for the conclusion: “we’re on a Nantucket sleighride.” All of us.

This is going to take a while. Perhaps you should gnaw on some hardtack, draw a mug of grog, and light a whale oil lamp to ease the strain on your eyes.

What I Said in 2020: Here be Dragons

GPT-3 was released on June 11 for limited beta testing. I didn’t have access myself, but I was able to play around with it a bit through a friend, Phil Mohun. I was impressed. Here’s how I began paper I wrote at the time:

GPT-3 is a significant achievement.

But I fear the community that has created it may, like other communities have done before – machine translation in the mid-1960s, symbolic computing in the mid-1980s, triumphantly walk over the edge of a cliff and find itself standing proudly in mid-air.

This is not necessary and certainly not inevitable.

A great deal has been written about GPTs and transformers more generally, both in the technical literature and in commentary of various levels of sophistication. I have read only a small portion of this. But nothing I have read indicates any interest in the nature of language or mind. Interest seems relegated to the GPT engine itself. And yet the product of that engine, a language model, is opaque. I believe that, if we are to move to a level of accomplishment beyond what has been exhibited to date, we must understand what that engine is doing so that we may gain control over it. We must think about the nature of language and of the mind.

I still believe that.

Have we moved beyond that technology? In obvious ways we have. ChatGPT is certainly more fluent, flexible, and accessible. And we now how various engines that are more powerful in various ways. But measured against the goal of human-level competence across a wide range of disciplines, it’s not at all clear that we’ve advanced much. We are still dealing with the same kinds of machines. A car that can go 300 miles per hour is certainly faster than one that tops out at 205, but it won’t take you to the moon, or even across the 20-acre lake in New York’s Central Park.

While I realize that there is more to AI than large language models (LLMs) I am going to concentrate on them in the rest of this essay because that is overwhelmingly where the attention is, conceptually, technologically, and financially.

A Tangled Conundrum: What do You Know about Language?

Computer programs are crafted by programmers, at least they were before the advent of machine learning. Programs are written in various languages, FORTRAN, COBOL, and LISP, among others in the old days, and I don’t know what now, Python, C++, R, Haskell, who knows? These languages are standardized, with specified commands, data structures, and syntax. Once you know the language, you can read it and write it just like you would natural language.

And if, by chance, you have a job updating the documentation of source code, you don’t even have to be able to write it. I once had to do that for legacy COBOL code running on a discontinued mainframe. The client had to bring in programmers from India to fix the code. That was back in the mid-1990s. But I digress.

My point is simply that such programs are human-intelligible. As a practical matter, large systems will be written by many programmers, and altered as they are used, with code being modified, added, and deleted. As a result, no one person knows everything about the program. Bugs will occur, it will take time and effort to track them down and fix them, and who knows, maybe the fix will cause problems elsewhere in the program. But, at least in principle, these programs are intelligible.

That is not the case with the large language models produced by machine learning (ML). The program which undertakes the learning – I note in passing that some find that term, “learning” too anthropomorphic, but I’m comfortable with it; that’s not a nit I’m itching to pick – is a program just like any other program. It is human-written and human-intelligible. That program then operates on a large corpus of text and uses statistical methods to “divine” (not a term of art) the structure in the text. The result of this analysis is a language model. These days it is a very large model, hence the term large language model (LLM). The LLM is NOT human intelligible. It is said to be opaque, a black box. One can open it, and look around, but what those things are and how they’re structured, that is puzzling.

Once the LLM is suitable packaged and put for casual use, like ChatGPT, it produces intelligible language. Since one “operates” ChatGPT, and its kith and kin, by prompting them in ordinary language, it must “read” as well.

We thus find ourselves with a tangled conundrum. On the one hand, experts in ML create human intelligible ML engines. Those engines create LLMs that these experts do not understand. Anyone who can read and write can interact with an LLM, readily and easily, perhaps even productively. Most of us, however, do not know what the experts know about the ML engines. For the most part, however, those experts don’t know anything more about the language these LLMs produce than we do. On that score we’re all even, more or less.

Taken as a whole, no one knows what this system of machines and humans is doing and how it operates. Yet we are now in the process of installing it into our lives and institutions. What’s going on?

There are, however, experts who do know more about language (and human cognition) than most AI experts do. Some of us have studied language and cognition and have published about it. Emily Bender is one of the most prominent critics of machine learning; she is a professor of linguistics and computational linguistics at the University of Washington. Gary Marcus is a well-known cognitive scientist who has published research on various topics, including child language and connectionist theory, and has recently testified before Congress on AI. David Ferrucci is not now so visible as he was when he lead the IBM Watson team that beat Brad Rutter and Ken Jennings at Jeopardy in 2011. Before that he worked with Selmer Bringsjord to create BRUTUS, an AI system that tells stories using classical symbolic methods, methods that are human intelligible. After Ferrucci left IBM he sojourned with Bridgewater Associates, one of the world’s largest hedge funds, before founding his own company, Elemental Cognition, which uses hybrid technology, combining classical techniques and contemporary ML techniques. As such, his company stands in implicit critique of the regnant ML-only regime.

For a closer look at the kind of critique that students of human cognition and language can muster, let’s turn to Steven Pinker and consider some remarks that Pinker made in a debate with Scott Aaronson. Aaronson is a computer scientist who is an expert in computational complexity and quantum computing and who runs a well-known blog, Shtetl Optimized. In late June of 2022 Aaronson offered to host an essay Pinker had written about AI scaling, that is building a more capable machine by the simple strategy of increasing the available computing power. Here’s how Aaronson put the question: “Will GPT-n be able to do all the intellectual work that humans do, in the limit of large n?” Here is how Pinker began his essay:

Will future deep learning models with more parameters and trained on more examples avoid the silly blunders which Gary Marcus and Ernie Davis entrap GPT into making, and render their criticisms obsolete? And if they keep exposing new blunders in new models, would this just be moving the goalposts? Either way, what’s at stake?

It depends very much on the question. There’s the cognitive science question of whether humans think and speak the way GPT-3 and other deep-learning neural network models do. And there’s the engineering question of whether the way to develop better, humanlike AI is to upscale deep learning models (as opposed to incorporating different mechanisms, like a knowledge database and propositional reasoning).

The questions are, to be sure, related: If a model is incapable of duplicating a human feat like language understanding, it can’t be a good theory of how the human mind works. Conversely, if a model flubs some task that humans can ace, perhaps it’s because it’s missing some mechanism that powers the human mind. Still, they’re not the same question: As with airplanes and other machines, an artificial system can duplicate or exceed a natural one but work in a different way.

On the question of whether or not LLMs think and speak like humans, I think it’s an important and interesting question, and I’ve thought about it a great deal. But it also seems secondary at this point. For reasons I won’t go into (see remarks in ChatGPT intimates a tantalizing future, pp. 13-15), I am all but convinced that ChatGPT, for example, does not tell stories the way humans do. Nor, for that matter, are the stories it does tell very good – though its science fiction encounter between the OpenWHALE and the Starship Enterprise is a rip-snorter. What matters for the scaling argument is what they do, not what specific mechanism(s) they use do it. For that reason I’m going to skip over Pinker’s remarks on that point. The important point is that the LLMs employ some kind of mechanism and that one can investigate it, but for the most part the AI community doesn’t seem very interested in those mechanisms.

Let’s return to Pinker’s argument:

Regarding the second, engineering question of whether scaling up deep-learning models will “get us to Artificial General Intelligence”: I think the question is probably ill-conceived, because I think the concept of “general intelligence” is meaningless. (I’m not referring to the psychometric variable g, also called “general intelligence,” namely the principal component of correlated variation across IQ subtests. This is a variable that aggregates many contributors to the brain’s efficiency such as cortical thickness and neural transmission speed, but it is not a mechanism (just as “horsepower” is a meaningful variable), but it doesn’t explain how cars move.) I find most characterizations of AGI to be either circular (such as “smarter than humans in every way,” begging the question of what “smarter” means) or mystical—a kind of omniscient, omnipotent, and clairvoyant power to solve any problem. No logician has ever outlined a normative model of what general intelligence would consist of, and even Turing swapped it out for the problem of fooling an observer, which spawned 70 years of unhelpful reminders of how easy it is to fool an observer.

If we do try to define “intelligence” in terms of mechanism rather than magic, it seems to me it would be something like “the ability to use information to attain a goal in an environment.” […] Specifying the goal is critical to any definition of intelligence: a given strategy in basketball will be intelligent if you’re trying to win a game and stupid if you’re trying to throw it. So is the environment: a given strategy can be smart under NBA rules and stupid under college rules.

Pinker has more to say, but I would like to quote him on one more point:

Back to GPT-3, DALL-E, LaMDA, and other deep learning models: It seems to me that the question of whether or not they’re taking us closer to “Artificial General Intelligence” (or, heaven help us, “sentience”) is based not on any analysis of what AGI would consist of but on our being gobsmacked by what they can do. But refuting our intuitions about what a massively trained, massively parameterized network is capable of (and I’ll admit that they refuted mine) should not be confused with a path toward omniscience and omnipotence.

This is an important point, the sudden and unexpected nature of advances in the previous three or four years has made an impact, but that is not an argument.

Pinker has made other arguments in the debate, and a subsequent rematch and many others have made arguments as well. My basic point is that there ARE explicit arguments against the idea that the current research regime is well on the way to scaling Mount AGI and that some of these arguments are based on expert knowledge about how humans think and act. Such knowledge is in short supply in the world of artificial intelligence.

Now I would like to some more personal observations of my own. I started out as a literary critic and then moved into cognitive science – without, however, completely abandoning literary criticism. In both cases I am accustomed to regarding language, texts, as phenomena motivated by forces and mechanisms that are not visible. I have devoted a lot of effort to trying to understand what those forces and mechanisms are.

In the case of literary criticism, it’s more about looking for forces than mechanisms. In fact, the discipline seems institutionally resistant to mechanism. The forces? Hidden desires, as elaborated by depth psychology, Freud and/or Jung, and ideological bias, as revealed though Marxist analysis. Feminist critics look for both, as do post-colonialists, African Americanists, and all the rest. The literary text is a field of contention among all these forces. The critic is looks for signs of their presence, follows their trails, tracks them down in their hidden lairs, and exposes them to the blinding light of truth – a very Platonic story, after all, don’t you see? Whatever you make think of that enterprise – I’m deeply ambivalent about it myself – it does not take language at face value.

Cognitive science is a different kettle of fish. Literary critics express the results of their unmasking of texts in the form of more texts, language about language. Cognitive scientists have different modi operandi. Some make experimental observations. Others construct formal grammars and still others make computer simulations. In each case the results are something other than language.

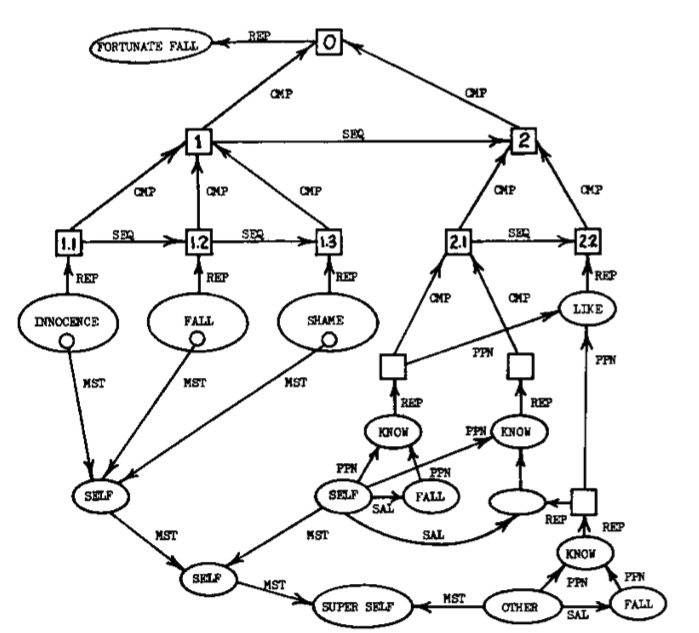

In my case I studied computational semantics. One result of this kind of work is a computer program. I personally never got that far. But I worked in the context of a research group where others produced programs. I produced graphic models, elaborate diagrams on which computer code can be based. This is a fragment from such a model, one I used to analyze a Shakespeare Sonnet:

The net result, then, is that I see texts as the product of forces and mechanisms. It is my job to figure out how those mechanisms work (for that is my central interest here, computational mechanisms rather than social forces).

In contrast, AI experts seem not to regard texts as the product of forces and mechanisms. I assume that they know and admit, in an abstract way, that those things exist. But they’re not curious about them. Hence, they are willing to take the opacity of LLMs at face value. As long as their engines keep cranking text out, they’re happy. That’s how their institutional imperatives work. When you add commercial imperatives into the mix, why, it’s just too damn expensive to worry about that other stuff, so why bother?

Where I see a rich field of inquiry, language and text, they see nothing. Where I see a digital wilderness, inviting exploration, they see the gleaming skyscrapers of a digital city, a city where none of the buildings have entrances.

I would add that, as far as I can tell, this blindness isn’t a matter of the attitudes and ideas of individual researchers, as individuals. This blindness has been institutionalized. That one is well-qualified to address questions about the human-level capacities of AIs, regardless of one’s actual knowledge of human capabilities, that is implicit in the institutionalized culture of AI and has been there since Alan Turing published “Computing Machinery and Intelligence” in 1950. That’s the paper in which he proposed the so-called Turing Test for evaluating machine accomplishment. The test explicitly rejects comparisons based on internal mechanisms, regarding them as intractably opaque and resistant to explanation, and instead focuses on external behavior.

Let’s be clear. Later on I will have some strenuous criticism for ideas expressed by specific individuals. But that criticism is not directed at them as individuals, and certainly not the sharpness of my tone. I have no choice but to name specific researchers; that’s the only way I can establish that these ideas and attitudes are held by real people and are not the hallucinations of an ungrounded chatbot. My criticisms and my tone are directed through the individuals and at the institutional culture they exemplify. Whatever less august researchers may think, the discipline’s culture requires that those upon whom leadership responsibility has been endowed, they must make far-reaching and often shocking statements about what computational technology can do now and in that real-soon-now future that’s always beyond our reach like the ever-retreating pot of gold at the end of the rainbow.

The Whaling Analogy Explicated

At the height of the whaling industry in America, ships sailed out of ports in New England, south through the Atlantic, around the treacherous seas surrounding the Cape of Horn, and into the Pacific. It was a dangerous and treacherous voyage. Once you found whales in the Pacific, the danger continued, for a whale could ram your boat and damage it, if not sink it entirely. Consider the whale-hunting enterprise as a metaphor for our general situation. Like it or not, we’re all in or somehow linked to those boats and the promise of rich returns from the hunt.

The ship is a metaphor for the technology itself. The captain is a metaphor that conflates two functions, that of the managers who direct the enterprise but perhaps more so that of the engineers and scientists who build the technology. The whale, obviously, is a metaphor for what the enterprise is designed to achieve. You kill whales and turn their carcasses into useful products, mostly oil and bone. What is the AI technology supposed to achieve?

Technically, it is supposed to perform various cognitive tasks as well as or even better than humans do. For some the goal is something called AGI, artificial general intelligence. Just what that means is a bit vague, but roughly it means human-level performance across a wide range of tasks. Beyond that, there is some fear that, once the technology has achieved AGI-level competence, it will start improving itself and FOOM (a term of art, believe it or not) its way to superintelligence. And once superintelligence has been achieved, who knows? But perhaps our mind-children will turn on us and wipe us out. Somewhere in there we have slipped into science fiction.

Perhaps these whalers really are now adventuring around the Cape of Horn and preparing to head into the Pacific to harvest whales. What if, however, they are in fact on an enormous soundstage somewhere in Hollywood or Brooklyn, or maybe Dubai Studio City (they’re all over the place these days), playing roles in a big budget conflation of the Star Trek, Alien, and Terminator franchises? What if the whales they’re pursing are fictional?

The point I’ve been getting at is that I don’t think AI experts are in a position to make that judgment. Their expertise doesn’t seem to extend much beyond making ML engines. They know how to craft whaling vessels, but not how to navigate on the open seas and to hunt whales. On those matters they’re little more knowledgeable than a typical landlubber from the Midwest.

I’m not sure that anyone is an expert in this technology, regardless of their background. It is genuinely new and deeply strange. No one knows what is going on. The point of my analogy is to set up a disjunction between knowledge of how to build and operate the technology, the ship, and knowledge of where the ship can go and what it can do, sophisticated perceptual and cognitive tasks comparable to high-level human competencies. Experts in artificial intelligence are proficient in the former, but all too often are naïve and underinformed on the latter.

The Experts Speak for Themselves

In the first week of October of this year, MIT’s Center for Brains, Minds, and Machines (CBMM) celebrated its tenth anniversary with a two-day symposium. Here’s a video of one of those meetings, a panel discussion moderated by Tomaso Poggio, with Demis Hassibis, Geoffrey Hinton, Pietro Perona, David Siegel, and Ilya Sutskever.

Near the end of the discussion the question of creativity comes up. Demis Hassibis says AIs aren’t there yet. Geoffrey Hinton brings up analogy:

1:18:28 – GEOFFREY HINTON: We know that being able to see analogies, especially remote analogies, is a very important aspect of intelligence. So I asked GPT-4, what has a compost heap got in common with an atom bomb? And GPT-4 nailed it, most people just say nothing.

DEMIS HASSABIS: What did it say …

1:19:09 – And the thing is, it knows about 10,000 times as much as a person, so it’s going to be able to see all sorts of analogies that we can’t see.

DEMIS HASSABIS: Yeah. So my feeling is on this, and starting with things like AlphaGo and obviously today’s systems like Bard and GPT, they’re clearly creative in …

1:20:18 – New pieces of music, new pieces of poetry, and spotting analogies between things you couldn’t spot as a human. And I think these systems can definitely do that. But then there’s the third level which I call like invention or out-of-the-box thinking, and that would be the equivalent of AlphaGo inventing Go.

Let’s stay with that second level, all those potential analogies. It is one thing for GPT-4 to explicate the seemingly far-fetched Hinton proposed. That’s the easy part. The difficult part, though, isn’t that in coming up with the analogy in the first place? Where is our Superintelligent AI of the future going to “stand” so that it can actually spot all those zillions of analogies. That will require some kind of procedure, no?

For example, it might partition all that knowledge into discrete bits and then begin by creating a 2D matrix with a column and a row for each discrete chunk of knowledge. Then it can move systematically through the matrix, checking each cell to see whether or not the pair in that cell is a useful analogy. What kind of tests does it apply to make that determination? I can imagine there might be a test or tests that allows a quick and dirty rejection for many candidates. But those that remain, what can you do but see if any useful knowledge follows from trying out the analogy. How long will that determination take?

But why stop at a 2D matrix? How about a 3D, 4D, 17D matrix? Why don’t we go all the way to an ND matrix where N is the number of discrete pieces of knowledge we’ve got?

That’s absurd on the face of it. But what else is there? There has to be something, no? I have no trouble imagining that, in principle, having these machines will be very useful – as they’re already proving to be, but ultimately, I believe we’re going to end up with a community of human investigators interacting with one another while they use technology we can just barely imagine, if at all, to craft deeper understandings the world.

Let’s return to Hinton. Speaking at a conference at MIT in May, he expressed some of his fears about AI:

Artificial intelligence can also learn bad things — like how to manipulate people “by reading all the novels that ever were and everything Machiavelli ever wrote,” for example. “And if [AI models] are much smarter than us, they’ll be very good at manipulating us. You won’t realize what’s going on,” Hinton said. “So even if they can’t directly pull levers, they can certainly get us to pull levers. […]”

At worst, “it’s quite conceivable that humanity is just a passing phase in the evolution of intelligence,” Hinton said. Biological intelligence evolved to create digital intelligence, which can absorb everything humans have created and start getting direct experience of the world.

“It may keep us around for a while to keep the power stations running, but after that, maybe not,“ he added.

Geoffrey Hinton is by no means the only one who believes such things. Those memes are viral in Silicon Valley. I don’t find them to be credible.

Let’s look at one last bit of “crazy” from Hinton, a bit of crazy with which I’m deeply sympathetic. At the annual Neural Information Processing Systems conference, which was held in New Orleans in 2022:

Future computer systems, said Hinton, will be take a different approach: they will be “neuromorphic,” and they will be “mortal,” meaning that every computer will be a close bond of the software that represents neural nets with hardware that is messy, in the sense of having analog rather than digital elements, which can incorporate elements of uncertainty and can develop over time. […]

These mortal computers could be “grown,” he said, getting rid of expensive chip fabrication plants. […]

The new mortal computers won’t replace traditional digital computers, Hilton told the NeurIPS crowd. “It won’t be the computer that is in charge of your bank account and knows exactly how much money you’ve got,” said Hinton.

“It’ll be used for putting something else: It’ll be used for putting something like GPT-3 in your toaster for one dollar, so running on a few watts, you can have a conversation with your toaster.”

Speculative? Sure is. Why do I like it? Because it seems plausible to me. But what would I know, I’m not a biologist. More than that, however, Hinton is speculating about the nature of the machine itself, not about some fantastic feats of mental magic it would perform in the future, not to mention manipulating us to oblivion.

Let us give Hinton a break and consider one of his students, Ilya Sutskever, one of the founders of OpenAI. He’s certainly one of our leading AI researchers and he has a particular interest in AI safety. Scott Aaronson recently took leave from his job at the University of Texas, Austin, to work on safety issues for OpenAI. He reports to Sutskever and has posted about his views on AI safety at his blog. He reports:

I have these weekly calls with Ilya Sutskever, cofounder and chief scientist at OpenAI. Extremely interesting guy. But when I tell him about the concrete projects that I’m working on, or want to work on, he usually says, “that’s great Scott, you should keep working on that, but what I really want to know is, what is the mathematical definition of goodness? What’s the complexity-theoretic formalization of an AI loving humanity?” And I’m like, I’ll keep thinking about that! But of course it’s hard to make progress on those enormities.

I can understand why Aaronson would sidestep such questions.

If I might intrude a bit and speak as a humanist who has published on love and marriage in Shakespeare, what do those questions mean? I understand the words, but I don’t see even the hint of a conceptual web coherently spanning goodness, loving for humanity and complexity theory that does justice to all terms. Nor should you think that my puzzlement reflects a standard humanistic aversion to math. I’m not a standard humanist. The diagram I posted up there is a complex mathematical object. The sonnet it explicates in part – if in a way most literary critics find strange and alienating – is Shakespeare’s famous “Th’ expense of Spirit.” The sonnet is about lust, kissing cousin to love.

I do not see how asking questions like Sutskever’s serves a useful purpose. Scott Aaronson may well discover concepts and techniques that are useful if securing safe AI. But his success would be more in spite of such questions than because of or in service to them.

That question is an index of the gaping disconnect I see between what experts in AI understand and the goals they seem to be pursuing. They are like my incognizant whaling captain. He knows his ship thoroughly up and down and inside out in 37 dimensions but doesn’t know the difference between longitude and latitude and wouldn’t recognize a sperm whale as it breaches and heads directly for the bow of his ship. The ship may be taller than a skyscraper and accelerate faster than a speeding bullet, but that doesn’t make it impervious to danger. Moby Dick is not afraid.

Where are we going? We’re on a Nantucket sleighride.

What is going to happen? How would I know? Moreover, as I’m not an AI expert I’m not professionally obligated to hazzard prophesies.

Still, since you ask: We’re on a Nantucket sleighride. What’s that?

As I’m sure you know, whales aren’t hunted from the main boat. It serves as transportation to and from the workplace, as a dormitory for the crew, and as a factory for carving up the carcass and rendering whale oil from the blubber, whalebone from the skeleton, and extracting ambergris from the intestines.

The actual hunting is done from small boats, like the one carved onto the whale’s tooth to the right. Once a harpoon has been lodged securely in the whale’s flesh, the whale is likely to swim for its life. The sailors in the boat have no choice but to hang on and hope that the whale dies before some disaster befalls their fragile craft. That mad dash is called a Nantucket sleighride.

That’s where we now. We’re all in it together, like it or not.

* * * * *

Let me continue by observing that, when Columbus set sail from Palos de la Frontera on August 3, 1492, he was bound for the Indies. He didn’t get there, but he did make landfall in the Bahamas. His failure cannot reasonably be attributed to any failing on his part or on the part of this crew. They simply did not know that two continents lay between Europe and the Indies. On the whole things worked out well, at least for the Europeans, though not for the indigenous peoples.

Phlogiston chemistry, though, is perhaps a better analogy. The existence of phlogiston was proposed in the 17th century. It was hypothesized to account for rusting and combustion. By the late 18th century, however, experiments by Antoine Lavoisier and others led to the discovery of oxygen.

The idea of AGI, and all that clings to it, is dubious. What will it give way to? I do not know, but what I expect to happen is that continued interaction between AI, linguistics, neuroscience, cognitive psychology and, yes, even philosophy, will yield models and theories that will give us the means to construct mechanistic accounts of both machine and human intelligence. How long will that take?

Really? Surely you know better than to ask that question. The course of basic research cannot be predicted.

At the moment machine learning is attracting by far the largest proportion of the research budget devoted to artificial intelligence and its sister discipline, computational linguistics. What will happen when LLMs fail to deliver endless pots of gold, as I believe is likely? I fear that more and more resources will be devoted to existing forms of machine learning in order to recoup losses from current and past investments. That’s the sunk cost fallacy.

Even now other research directions, such as those being proposed by Gary Marcus and others, are not being pursued. Basic research cannot deliver results on a timetable. Some directions will pan out, others will not. There is no way to determine which is which beforehand. Without other viable avenues for exploration, the prospect of throwing good money after bad may seem even more appealing and urgent. What at the moment looks like a race for future riches, intellectual and material, may turn into a death spiral race to the bottom.

Through that prospect I see a deeper reason to fear for the future. In a very recent podcast, Kevin Klein, Kevin Roose, and Casey Newton were discussing the recent shakeup in OpenAI:

Kevin Klein: Yeah, I first agree that clearly A.I. safety was not behind whatever disagreements Altman and the board had. I heard that from both sides of this. And I didn’t believe it, and I didn’t believe it, and I finally was convinced of it. I was like, you guys had to have had some disagreement here? It seems so fundamental.

But this is what I mean the governance is going worse. All the OpenAI people thought it was very important, and Sam Altman himself talked about its importance all the time, that they had this nonprofit board connected to this nonfinancial mission. […]

He goes on to remark that the new and stronger board may be more capable of standing up to Altman. But, while they know how to run profit-making companies, they may not be so committed to AI safety as the departing board members were.

Kevin Roose: No, I think that’s right. And it speaks to one of the most interesting and strangest things about this whole industry is that the people who started these companies were weird. And I say that with no normative judgment. But they made very weird decisions.

They thought A.I. was exciting and amazing. They wanted to build A.G.I. But they were also terrified of it, to the point that they developed these elaborate safeguards. […] And so I think what we’re seeing is that that kind of structure is bowing to the requirements of shareholder capitalism which says that if you do need all this money to run these companies, to train these models, you are going to have to make some concessions to the powers of the shareholder and of the money.

Casey Newton responds:

And that is just a sad story. I truly wish that it had not worked out that way. I think one of the reasons why these companies were built in this way was because it just helped them attract better talent. I think that so many people working in A.I. are idealistic and civic-minded and do not want to create harmful things. […] And I want them to be empowered. I want them to be on company boards. And those folks have just lost so much ground over the past couple of weeks. And it is a truly tragic development, I think, in the development of this industry.

Is this technology so new, so powerful, and so utterly different, that our existing institutional arrangements are not well-suited to fostering it?

I have written a number of posts at my home blog, New Savanna, intended to support, illuminate, and extent the arguments I’ve made here. Some are short and some are longer. If you’re curious about the whaling metaphor, I’ve taken it from Marc Andreesen, who sees the financing of whaling voyages as a precursor to contemporary venture capital financing. Here is a relatively short post about the profitability of whaling: Whaling, Andreesen, Investment, and the Risk Premium. Here’s a longer post, a piece of fiction which I wrote with the help of Herbert Melville and Biff Roddenberry, both played by ChatGPT: The OpenWHALE Rounds the Horn and Vanishes, An Allegory about the Age of Intelligent Machines. Finally, there is a long post, A dialectical view of the history of AI, Part 1: We’re only in the antithesis phase. [A synthesis is in the future.].

I’ve written a number of articles for 3 Quarks that are germane to my views on this matter:

- A New Counter Culture: From the Reification of IQ to the AI Apocalypse

- Beyond artificial intelligence?

- ChatGPT is a miracle of rare device. Here’s how I’m thinking about it.

- On the Cult of AI Doom

- From “Forbidden Planet” to “The Terminator”: 1950s techno-utopia and the dystopian future

On the hazards of AI, Ali Minai has provided a very useful classification here in 3 Quarks: The Hazards of AI: Operational Risks.

You might also want to consult an interesting online seminar held down the hall at Crooked Timber: The Political Ideologies of Silicon Valley. I feel a special affection for Crooked Timber. Aside from the fact that I occasionally post comments there, as I have for this seminar, it is one of the last academic blogs remaining from the days when blogs were hot. It dates all the way back to July of 2003.

Finally, about those two whales’ teeth. Yes, they’re from sperm whales. They’ve been scrimshawed by my late Uncle, Rune Ronnberg, who came to America from Sweden on a square-rigger, the Abraham Rydberg. It was serving as a training ship for the Swedish merchant marine. He arrived just as World War II broke out. Consequently, he was stuck here. He married one of my father’s sisters, Karen, and went into business, first as rigger in Gloucester, Massachusetts, then as a shop owner in Rockport. At the same time he practiced traditional sailor’s crafts, such as knotting, scrimshaw, and, above all, model ship building (pp. 28-29).