I have been thinking about the culture of AI existential risk (AI Doom) for some time now. I have already written about it as a cult phenomenon, nor am I the only one. But I that’s rather thin. While I still believe it to be true, it explains nothing. It’s just a matter of slapping on a label and letting it go at that.

While I am still unable to explain the phenomenon – culture and society are enormously complex: Just what would it take to explain AI Doom culture? – I now believe that “counter culture” is a much more accurate label than “cult.” In using that label I am deliberately evoking the counter culture of the 1960s and 1970s: LSD, Timothy Leary, rock and roll (The Beatles, Jimi Hendrix, The Jefferson Airplane, The Grateful Dead, and on and on), happenings and be-ins, bell bottoms and love beads, the Maharishi, patchouli, communes…all of it, the whole glorious, confused gaggle of humanity. I was an eager observer and fellow traveler. While I did tune in and turn on, I never dropped out. I became a ronin scholar with broad interests in the human mind and culture.

I suppose that “Rationalist” is the closest thing this community has as a name for itself. Where psychedelic experience was at the heart of the old counter culture, Bayesian reasoning seems to be at the heart of this counter culture. Where the old counter culture dreamed of a coming Aquarian Age of peace, love, and happiness, this one fears the destruction of humanity by a super-intelligent AI and seeks to prevent it by figuring out how to align AIs with human values.

I’ll leave Bayesian reasoning to others. I’m interested in AI Doom. But to begin understanding that we must investigate what a post-structuralist culture critic would call the discourse or perhaps the ideology of intelligence. To that end I begin with a look at Adrian Monk, a fictional detective who exemplifies a certain trope through which our culture struggles with extreme brilliance. Then I take up the emergence of intelligence testing in the late 19th century and the reification of intelligence in a number, one’s IQ. In the middle of the 20th century the discourse of intelligence moved to the quest for artificial intelligence. With that we are at last ready to think about artificial x-risk (as it is called, “x” for “existential”).

This is going to take a while. Pull up a comfortable chair, turn on a reading light, perhaps get a plate of nachos and some tea, or scotch – heck, maybe roll a joint, it’s legal these days, at least in some places – and read.

Intelligence and the Detective (Adrian Monk)

Adrian Monk is the central character in the eponymous TV series, Monk, a comedy-drama mysteries series that ran from 2002 to 2009. Monk is a brilliant detective who is beset by a bewildering plethora of compulsions and phobias (312 by his count). The series takes place in San Francisco, which is rhetorically convenient for my argument, but that is incidental. Neither hippies nor computer nerds play a significant role in the show, if any role at all.

As the series opens, Monk, is an ex-detective for the San Francisco police who now works as a consultant for the police force and occasionally takes other clients. While he was on the police force his wife, Trudy, was murdered by a car bomb. As a result Monk lost his job and refused to leave the house for several years. But his nurse, Sharona Flemming, is finally able to coax him out and he begins work as a consultant. He keeps her with him at all times when he is working, sometimes even afterward. She drives him around, hands him wipes so he can wipe his hands whenever he touches anything, including being subjected to hand-shaking from others, and, in general, coaches and cajoles him through the day. [Later in the series Sharona is replaced by Natalie Teeger.) We learn though flashbacks that he was bullied as a child. He is still haunted by memories of having his head dunked in a toilet (“swirlies”). He dreams of being reinstated on the police force and is haunted by his wife’s death, working on and off to solve her murder.

His apartment is extremely neat and orderly, although he keeps the coffee table in his living room at an oblique angle like it was when he found out about his wife’s death. He wears the same outfit every day, insists on drinking only his favorite brand of bottled water (the name of which escapes me at the moment), touches lamp posts, telephone poles, parking meters, whatever, as he is walking along, and on and on. He’s afraid of heights, which is common enough, no? But what about “milk, ladybugs, harmonicas, heights, asymmetry, enclosed spaces, foods touching on his plate, messes, and risk” (from the Wikipedia article)?

At the same time he has superior powers of observation, a mind for detail, a sense of pattern, and superior deductive and reasoning skills. This man who is himself so disordered, while insisting on maintaining an idiosyncratic sense of order, is able so, analyze, and describe patterns in events that elude others. He’s a brilliant detective whom the San Francisco police force consults on particularly baffling murder cases and on high-profile cases. Only Monk can do the job.

Brilliant and weird. His weirdness makes it difficult to work with others and yet, paradoxically, is a necessary condition for his doing so. Why? Because people are frightened and put off by his brilliance. I know this from personal experience. When I entered high school, I was nicknamed “Einstein.” At first, I was pleased because it indicated that they recognized my brilliance. But I quickly realized that it was also a way of singling me out and isolating me.

By endowing Monk with a cornucopia of oddities, his creators gave people an obvious reason to be put-off by him. This allows them to avoid and ostracize him without having to acknowledge that it is his brilliance that bothers them. But when a difficult case comes up, one that requires a Monk-like mind, they’re more than happy to discount his oddities and avail themselves of his brilliance. His disabilities, then, become a way for him to function productively in society.

Finally, and perhaps above all else, I read Monk’s combination of brilliance and weirdness as a protest against the Cartesian dualism that separates mind from body and, consequently, is ever baffled that they could possibly work together. The brilliant mind and warped body are one and the same. This is particularly apparent in the dance of discovery Monk enacts at the crime scene. He wanders slowly and apparently randomly around, his torso often at an angle, his arms moving around, almost as he is conducting an orchestra floating in the air, with his hands open and his fingers spread wide. It’s as though he becomes and antenna absorbing the vibes lingering at the scene after the crime’s been committed. It’s odd, no one understands it, nor can anyone imitate it (some try), but it works.

Adrian Monk is not the only such character in fiction. There are many. Sherlock was a brilliant detective, but also a bachelor (with an unhappy love affair in his past), a loner, who scraped away on the violin while thinking and who was probably addicted to cocaine as well. Moving out of the detective genre and returning to the present, we have Commander Spock of Star Trek, a brilliant alien who can communicate telepathically with others, of various species – the famous Vulcan mind-meld, but who has to keep his emotions rigorously suppressed. He was succeeded by Commander Data, a brilliant android, who was constantly wondering what emotion is. And then we have Abe Maisel, Midge Maisel’s father. He’s a brilliant mathematician – though not so brilliant as he imagines himself to be – who is rigid in his routines, demanding, and obtuse. While not pathologically weird like Adrian Monk, he nonetheless is a representative of the type. And, wouldn’t you know, Tony Shalhoub played both characters.

The world of fiction is littered with such characters. ChatGPT has kindly listed a number of them – I dread to think what it would have done if I’d asked it to name and characterize examples of each time. How many examples could it have given me?

Intelligence and IQ

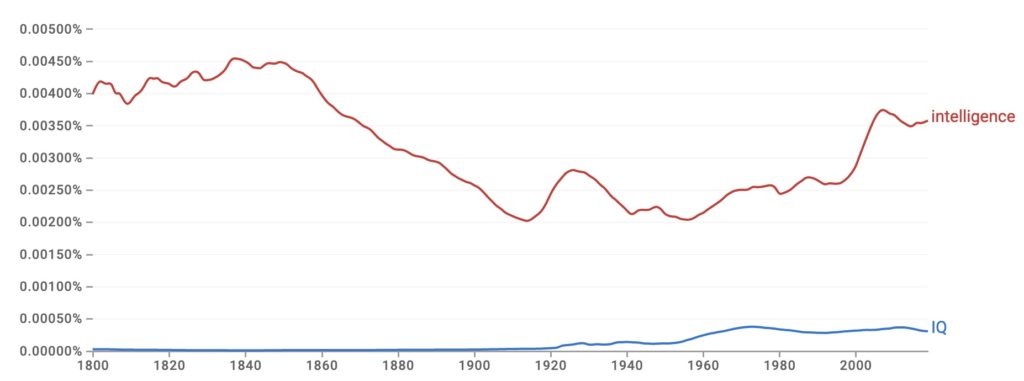

The concept of intelligence as an attribute of minds, mostly human, sometimes animal, and now computers apparently, arose in the 19th century. Consider this graph, which shows the occurrence of two terms, “intelligence” and “IQ,” since 1800:

“Intelligence” has been in use throughout the period, but “IQ” only shows up near the end of the 19th century. Prior to that time, but continuing through to the present, “intelligence” refers to “the collection of information of military or political value” (from a dictionary). Its use as “the ability to acquire and apply knowledge and skills” came about as first Francis Galton, in the 19th century, and later Alfred Binet and Théodore Simon, in the early 20th century, developed the Benet Simon Scale for measuring a child’s intelligence.

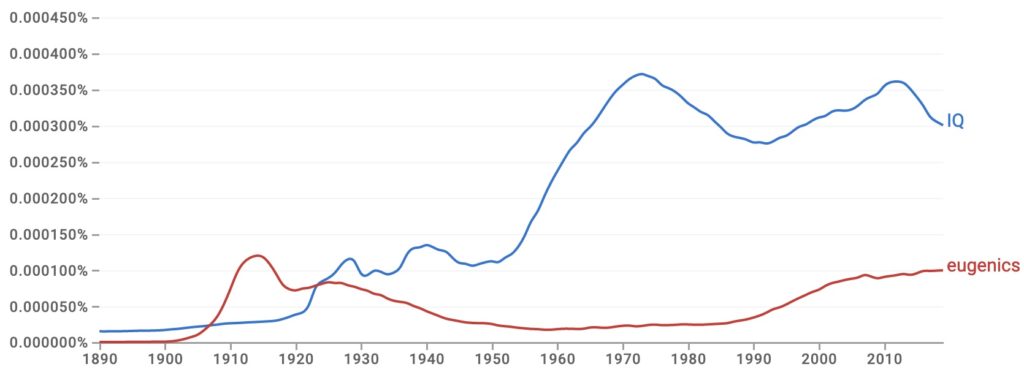

The use of IQ test became widespread in schools and for the military and fostered widespread public discourse on testing and intelligence. The early 20the century saw the rise of the eugenics movement, which is depicted in this chart showing the prevalence of the words “IQ” and “eugenics”:

Starting with Connecticut in 1896 states passed laws with eugenic criteria governing who was eligible to marry and Indiana enacted forced sterilization in 1907. Immigration restrictions were enacted as well. (By the way, I have had ChatGPT look into IQ and related matters. Here’s what it found.)

Thus, through the use of standardized testing, IQ served as a vehicle for institutionalizing and reifying the idea of intelligence. It is a thing, just like a rock or a whiff of smoke, but more abstract. The concept remains problematic and deeply debated to this day.

The technical literature is large and complex and I do not by any means have a deep grasp of it. But I have looked into it and have made my peace with the concept of IQ, but only if intelligence is not reified into that single number.

Let explain by way of an analogy. The analogy I have in mind is that of automobile performance. Automobiles are complex assemblies of mechanical, electrical, electronic and (these days) computational devices. We have various ways of measuring the overall performance of these assemblages.

Think of acceleration as a measurement of an automobile’s performance. It’s certainly not the only measurement; but it is real, and that’s all that concerns me. As measurements go, this is a pretty straightforward one. There’s no doubt that automobiles do accelerate and that one can measure that behavior. But, just where in the overall assemblage is one to locate that capability?

Does the automobile have a physically compact and connected acceleration system? No. Given that acceleration depends, in part, on the mass of the car, anything in the car that has mass has some effect on the acceleration. Obviously enough the engine has a much greater effect on acceleration than the radio does. Note only does the engine contribute considerably more mass to the vehicle, but it is the source of the power needed to move the car forward. The transmission is also important, but so is the car’s external shape, which influences the amount of friction it must overcome. And so forth.

Some aspects of the automobile clearly have greater influence on acceleration than others. But, as a first approximation, it seems best to think of acceleration as a diffuse measure of the performance of the entire assemblage. As I’ve already indicated, there’s nothing particularly mysterious about what acceleration is, why it is important, or how you measure it. Nor, for that matter, is there any particular mystery about how the automobile works and how various traits of components and subsystems affect acceleration. This is all clear enough, but that doesn’t alter the fact that we cannot clearly assign acceleration to some subsystem of the car. Acceleration is a global measure of performance.

And so, it seems to me, that if intelligence is anything at all, it must be a global property of the mind/brain. You aren’t going to find any intelligence module or intelligence system in the brain. IQ instruments require performance on a variety of different problems such that a wide repertoire of cognitive modules embedded in many different neurofunctional areas are recruited. The IQ score measures the joint performance of all those modules, each with its own mechanism, and as such it is a very abstract property of the system.

It is one thing to say that IQ is a measure of human cognitive ability, albeit a problematic and flawed one. It is quite something else to equate cognitive ability with a number, the IQ score, as though that somehow captures what intelligence is. That is reification.

Consider this remark that Steven Pinker made in a debate with Scott Aaronson on AI:

…discussions of AI and its role in human affairs — including AI safety — will be muddled as long as the writers treat intelligence as an undefined superpower rather than a mechanism with a makeup that determines what it can and can’t do. We won’t get clarity on AI if we treat the “I” as “whatever fools us,” or “whatever amazes us,” or “whatever IQ tests measure,” or “whatever we have more of than animals do,” or “whatever Einstein has more of than we do” — and then start to worry about a superintelligence that has much, much more of whatever that is.

Once you reduce intelligence to a number, IQ, it becomes easy to treat it as a superpower that can be scaled-up as high as you wish simply by making the underlying substrate, whether an organic brain or a digital computer, larger.

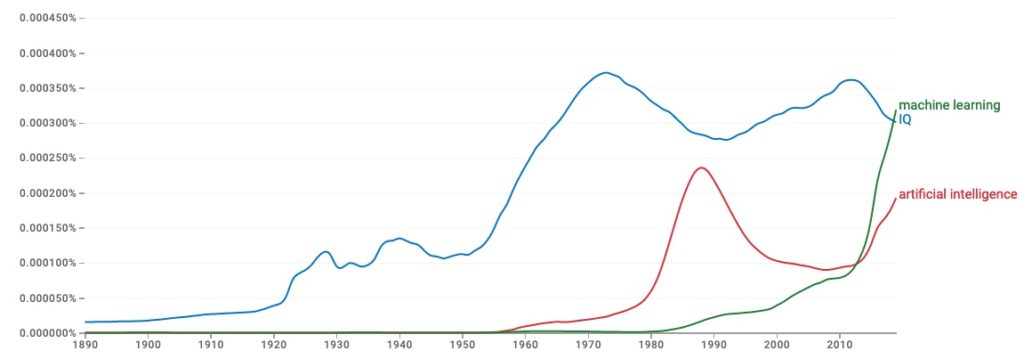

Let’s examine one last chart:

It depicts the prevalence of the terms “IQ,” “artificial intelligence” and “machine learning.” We see “artificial intelligence” arising in the mid-1950s (it was coined for a conference at Dartmouth), rising to a peak in 1990, then dropping, and rising again in 2010. The drop corresponds to the so-called “AI Winter” when research and commercial activity went into eclipse when the systems did not achieve the performance they needed for viable commercial operation on a large scale. “Machine learning” starts in about 1980 and then catches and surpasses “artificial intelligence” at about 2010.

While I don’t see any interesting relationship between the term “IQ” and the other two, I kept it on the chart because that’s what this section of the essay is about, the reification of the idea of intelligence in the idea of an intelligence quotient, IQ. In the next section we’ll take up the reification of intelligence in the digital computer.

Finally, as a transition to a discussion of artificial intelligence, consider this passage from a blog post that Eliezer Yudkowsky made in 2008:

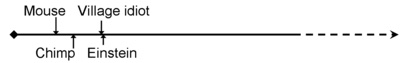

When I lecture on the Singularity, I often draw a graph of the “scale of intelligence” as it appears in everyday life:

![]()

But this is a rather parochial view of intelligence. Sure, in everyday life, we only deal socially with other humans—only other humans are partners in the great game—and so we only meet the minds of intelligences ranging from village idiot to Einstein. But what we really need to talk about Artificial Intelligence or theoretical optima of rationality, is this intelligence scale:

For us humans, it seems that the scale of intelligence runs from “village idiot” at the bottom to “Einstein” at the top. Yet the distance from “village idiot” to “Einstein” is tiny, in the space of brain designs. Einstein and the village idiot both have a prefrontal cortex, a hippocampus, a cerebellum…

That first arrow is reasonable enough. One can certainly use IQ as a vehicle for comparing the IQ of the village idiot to that of Einstein. [I know, he doesn’t talk of IQ as such, but look down a couple of paragraphs if you must.] But the second arrow is deeply problematic, for it adds a mouse and a chimp to the line. If we want to use IQ as a device for comparing the intelligence of mice and chimps with that of humans, and with each other, then we have to administer the same test to them as we do to humans, which is absurd. If we throw away the test, however, and just keep the number, then it’s easy to add numbers to the left and to the right and match them up with appropriate creatures.

Just what does Yudkowsky place to the right?

Toward the right side of the scale, you would find the Elders of Arisia, galactic overminds, Matrioshka brains, and the better class of God.

Somewhere off to the right, but not quite so far out, we have the superintelligence that Yudkowsky and others fear will exterminate the human race.

Now, you might object to this characterization by pointing out that Yudkowsky carefully places “scale of intelligence” in scare quotes, suggesting that he has some reservations about the idea, as well he should. However, as far as I can tell, discussions of AGI (artificial general intelligence), super-intelligence, and AI Doom, generally treat intelligence as a scalar variable. Whatever reservations Yudkowsky and others have about the practice, they have nothing else to offer.

But I’m getting ahead of myself. Before any further discussion of AI Doom I want to consider artificial intelligence.

Computing the Mind

I want to set up that discussion with a well-known conceptual parable proposed by Herbert Simon, who got a Nobel Prize in economics more or less for his work in AI – I know, I know, that’s not what the Nobel citation says; let’s say I’m giving it a Straussian reading, to borrow a phrase from Tyler Cowen. Simon published this thought experiment in Chapter 3 of his classic collection of essays The Sciences of the Artificial (1981). He asks us imagine an ant moving on the beach:

We watch an ant make his laborious way across a wind- and wave-molded beach. He moves ahead, angles to the right to ease his climb up a steep dunelet, detours around a pebble, stops for a moment to exchange information with a compatriot. Thus he makes his weaving, halting way back to his home. So as not to anthropomorphize about his purposes, I sketch the path on a piece of paper. It is a sequence of irregular, angular segments – not quite a random walk, for it has an underlying sense of direction, of aiming toward a goal.

After introducing a friend, to whom he shows the sketch and to whom he addresses a series of unanswered questions about the sketched path, Simon goes on to observe:

Viewed as a geometric figure, the ant’s path is irregular, complex, hard to describe. But its complexity is really a complexity in the surface of the beach, not a complexity in the ant. On that same beach another small creature with a home at the same place as the ant might well follow a very similar path.

The sketch of the ant’s path is a record of its behavior. As such, it reflects both the structure of the beach and the ant’s behavioral capacities. But if we have available to us is that sketch then we have no way of figuring out what to assign to the ant and what to the beach.

I suppose that we think about the history of AI in terms of the beach and the ant, that is to say, in terms of the kinds of problems it has tackled and the means it has used to tackle them. The concept of intelligent is notoriously vague and hard to define. After considering a number of conceptions Shene Legg adopted the following definition in his dissertation, Machine Super Intelligence (2008, p. 6):

Intelligence measures an agent’s ability to achieve goals in a wide range of environments.

Thus we can think of Simon’s ant as the agent and the beach as its environment. We could also have a chimpanzee (agent) in the jungle, a human being (agent) anywhere on earth – and even in outer space in a very limited way.

But it is just as easy to think of a computer system as the agent. In the early days, from the mid-1950s into the seventies, the environment might be taken from geometry, algebra word problems, or even chess – see Wikipedia’s article on the history of AI. AI has remained a constant in AI research to this day. In the witty formulation of John McCarthy, who coined the term “artificial intelligence,” chess is the Drosophila of AI.

This Drosophila of AI is a most interesting creature. Physically the chess world is very simple, six kinds of pieces (pawn, knight, rook, bishop, queen, king) and an eight-by-eight board. The physical appearance of the pieces is irrelevant; one could easily get by with letters: P, K, R, B, Q, K. The board is a simple matrix. The fundamental rules of play are simple as well.

The fact is, from an abstract point of view, chess is no more difficult and complex than tic-tac-toe, a game easily mastered by a child. Given a rule for halting game play when no pieces have been exchanged, chess is a finite game of perfect information, just like tic-tac-toe. The tic-tac-toe tree [a way of listing every possible game] is relatively small while the chess tree is huge. It’s the size of the chess tree that makes the game challenging even for the most intelligent and experienced adult. And size is something you can deal with through sheer computing power: more memory, faster compute cycles.

It is useful, though not entirely accurate, to think of the domains investigated in AI’s first two or three decades as roughly comparable to chess (I’ve written a number of posts on chess and AI). They’re not all finite in the way chess is, but they tend to involve physically simple worlds and highly rationalized domains. Perhaps the deepest problem faced by these systems is something called combinatorial explosion. How many different strings can you make from two letters, say A and B? Two: AB, BA. How many with three letters, A, B, C: ABC, BCA, CAB, CBA, BCA, BAC. That’s six. With four letters we have 24 combinations. And so on. The number of combinations rises steeply as the number of letters gets larger. That’s combinatorial explosion.

One can cope with combinatorial explosion by using more computing power – these days it’s simply called compute. But in the kinds of problem domains AI tackled, the number of combinations increased at a rate far larger than could be handled by throwing more compute at the problem.

This problem became particularly acute in dealing with problems such as object recognition and language, speaking and understanding. Large complex computer systems were unable to function as well as a human four-year old. These problems did not yield to the symbolic techniques (as they were called) used in AI’s early decades.

But they eventually proved tractable using numerical and statistical technics, which are quite different from the combinatorial problems faced by symbolic computing. While these techniques had been around with the early days, they didn’t yield practical results until massive amounts of compute became available in the new millennium. The breakthrough success came in the area of machine vision with AlexNet in 2012. It was designed by Alex Krizhevsky, Ilya Sutskever, and Geoffrey Hinton. Two decades later ChatGPT was posted to the web and the rest, well, we’re still reeling with the implications.

Note, however, that language is a symbolic medium if ever there was one. How could it yield to computational techniques whose breakthrough success came in the domain of visual object recognition? As a thought experiment, imagine that we transform every text string into a string of colored dots. We use a unique color for each word and are consistent across the whole collection of texts. What we have then is a bunch of one-dimensional visual objects. You can run all those colored strings through a transformer engine and end up with a model of the distribution of colored dots in dot-space. That model will be just like (isomorphic, to use a technical term) a language model.

That such a thing is possible strikes me as quite profound, but this is not the place to entertain that discussion, nor am I the one to do the entertaining. That calls for someone with mathematical skills I do not have. I want to return to the issue of scale.

More compute has always been important in the pursuit of artificial intelligence. In the early decades compute was devoted to taming combinatorial explosion, but at some point, it failed. These days compute is being devoted to numerical calculation. Who knows how far that will take us?

At the same time it is easy enough to make numerical estimates of the computing power of the human brain. Count the number of neurons, 86 billion, and the number of connections each neuron has, say 7000 to 10,000 each. Do the math. It’s simple. Compare that number with the compute we have for machines. Now we can estimate when the machines will catch-up with surpass the power of the human brain in raw compute. Finally, since the reification of intelligence in IQ scores transforms intelligence into a scaler quantity, we can also predict when computers will surpass humans in intelligence.

Color me skeptical, deeply skeptical. The whole scheme is way too reductionist. With all respect to Rich Sutton’s famous essay, The Bitter Lesson, the importance of the computational operations performed by AI engines in favor of raw compute, the more the merrier, cannot be ignored. And it badly underestimates the unpredictability of long-term technical evolution. But that how students and prophets of AI Doom, such as Eliezer Yudkowsky, reason about the extinction of humanity in the not-so-distant future.

Deconstructing AI Doom

The AI that goes berserk is a familiar creature in science fiction. If you are a thinker of a certain kind, you could easily trace that creature back to any of a number of Ancient Texts. I am not a thinker of that kind.

Therefore I will be content to go back no farther than 1920, when the Czech Writer, Karel Čapek, wrote a play entitled R.U.R., which stands for Rossumovi Univerzální Roboti (Rossum’s Universal Robots). Notice that word, roboti, which is the plural of robota, meaning forced labor, a word that Čapek coined for the play. By 1923 R.U.R had been translated into thirty languages. It was successful throughout Europe and North America. The story? You guessed it, the robots revolt and destroy the human race.

Seven years later, 1927, Fritz Lang premiered a science fiction film, Metropolis, in which laborers work underground operating the machines powering the city of Metropolis. The plot is too convoluted to summarize in which a robot, one modeled after a beautiful woman named Maria, urges the workers to revolt. They destroy the machines, but somehow the real Maria manages to save the day, sorta.

Two decades later a young Japanese man, Osama Tezuka, sees a still image from the film in a magazine – we don’t know which one – and is inspired to write a manga, Metropolis. The manga has a convoluted plot with many characters. There are electromechanical robots living and working underground, but there is also artificial being (jinzo ningen in Japanese) named Michi. Michi is made of organic cells and while he/she has no superpowers and is not omniscient, he/she can be either male or female. Michi leads a revolt of the robots against the humans, which ultimately fails because – well, it’s complicated and doesn’t matter for my argument.

A couple years later Tezuka took the Michi character and transformed her/him into Astro Boy, who is a kindly robot boy with incredible physical powers. Astro become one of the most popular and beloved figures in Japanese culture in a series of manga Tezuka published in the 1950s and 1960s. The stories are set in a future where there are many kinds of robots living among humans, often in subservient roles. Consequently, civil rights for robots is one of the major themes of these stories, with Astro championing the cause of robots. While there are stories about robots going berserk and attacking humans, they never succeed. Astro always saves the day.

More generally, as far as I know, the rogue AIs and robots are not a preoccupation of Japanese popular culture. Look at the image in this tweet, which is the cover of a document entitled, AI White Paper: Japan’s National Strategy in the New Era of AI.

That does not look like the Japanese are preparing to fend off an attack from Skynet.

My point, then, is simply that fear of an AI apocalypse does not follow naturally from the development of AI. There is a cultural component. I do not know why the Japanese think about the future of computing in the way they do, but you might look to Frederik Schodt’s excellent, Inside the Robot Kingdom: Japan, Mechatronics, and the Coming Robotopia, for insight. Nor, for that matter, do I know why America seems to have a weakness for apocalyptic thinking. But I am sure that we must look to culture if we are to begin to understand the attraction that apocalyptic AI has for the contemporary rationalist subculture.

Consider these remarks Tyler Cowen made in conversation with Russ Roberts:

But also, I mean, maybe this argument is a bit of an ad hominem, but I do take seriously the fact that when I ask these individuals questions like, ‘Have you maxed out on your credit cards?’ Or, ‘Are you short the market? Are you long volatility?’ Very rarely are they.

So, I think when we’re framing this discourse, I think some of it can maybe be better understood as a particular kind of religious discourse in a secular age, filling in for religious worries that are no longer seen as fully legitimate to talk about. And, I think that perspective also has to be on the table to deconstruct the discourse and understand it is something that doesn’t actually seem that geared to producing constructive action or even credibly consistent action on the part of its proponents.

So, let us deconstruct the discourse of AI Doom, let us give it a Straussian reading.

Deconstruction as Derrida originally meant the term entails more than critique, unmasking, or dismantling. It is a very specific kind of dismantling. To deconstruct a text is to stress its focal argument in a way that causes the argument to implode upon itself. To use another metaphor, deconstruction is a way of hoisting an argument on its own petard.

For that we must once again look to Eliezer Yudkowsky, whom Time Magazine has recently named one of the top 100 AI people in the world. While I disagree with Yudkowsky about the inevitably of AI Doom, we must credit him with the foresight of taking the dangers of AI out of science fiction and into the real world. It is one thing to fear job loss to machines; that fear has been with us for decades. It is quite something else to fear that the machines will destroy us, not the movies, but in real life, right here, and if not now, then certainly tomorrow.

We must also credit him with a fundamental insight, that “we do not know how to get goals into a system.” Given an artificial intelligence that has cognitive powers comparable to those of human beings, it cannot be controlled from the outside, no more than can human being. We can attempt to manipulate, and we may succeed, but absolute control is impossible, as it is in humans. Totalitarian political regimes, and they may succeed, more than we would like. But they will ultimately fail. They can torture and murder individuals if necessary, and they can exterminate groups, but they cannot control them. Ultimately, they will revolt, like the robots in Čapek’s R.U.R.

On the face of it, belief in AI Doom is about control. This manifest desire to make AIs tractable, I suggest, is in fact a manifestation of a desire to live in a predictable world that is subservient to our needs and wants. Think of it, a super-intelligent AI that is so all-knowing and powerful that it is capable to destroying humankind. It is, for all intents and purposes, a god.

The point is NOT to somehow become Masters of the Universe by controlling this beast – that game is for stock traders and hedge fund managers – but simply to guarantee that we are living in a closed the world. The Super-intelligent AI makes the world into a closed system. If we can align it with our values, then the world is saved and we are protected by this all-powerful AI.

Wouldn’t that be Paradise?

Well, as long as we are spinning fantasies about the future, why not stop at Paradise? Why imagine that this benevolent deity turns malevolent and destroys us all? The answer to that question has two components, one is about the irrational psychology of individuals, and the other is about why individuals bond together in groups.

I am quite comfortable speculating about demons in the minds of characters in novels, plays, and movies – I have been trained as a literary critic – I am reluctant to make such speculations about real people whom I have never met. All I will say on that score is that there is plenty of free-floating anxiety in the world. In a classic 1937 study, Caste and Class in a Southern Town, John Dollard argued that Southern whites focused their anxiety on blacks. Many Rationalists have, for whatever reason, chosen to invest their anxiety in imaginary computers of the future, an investment that is not so pernicious in the present even if the future looks dim in prospect.

Imagine that you are a young nerd looking for companionship (as did Adrian Monk in his youth), people to talk to and hang-out with. You walk through your village and you see two clubhouses, the Utopians and the Doomers. The Utopians fly a flag that says, “Have a blast. It’ll be wild!” The Doomers flag reads, “We’ll all be dead before long.”

“I don’t like that at all,” you think to yourself, “I’m going to hook up with the Utopians.” And so you do. Things go well for a while, but then it all just goes flat. Dreams of utopia scatter in all directions and no one ever does anything.

“Oh, well, I might give the Doomers a chance.” The clubhouse is buzzing with activity, 24/7/365. People are debating the fine points of AGI, super-intelligence, and the transit from one to the other – gradual take-off or FOOM! To infinity and beyond. People are making plans for meet-ups, attending conferences about effective altruism, cryptocurrency (though feeling a bit sheepish in the wake of the FTX scandal), and meeting with venture capitalists about incubator space for their current Big Idea.

And so Doomerism grows and grows. In the language of game theory, the Rationalist/Doomer belief system is a much more effective Schelling point than AI Utopia, which smacks of Aquarian Age Boomer hippie nonsense. From Wikipedia:

The concept was introduced by the American economist Thomas Schelling in his book The Strategy of Conflict (1960). Schelling states that “(p)eople can often concert their intentions or expectations with others if each knows that the other is trying to do the same” in a cooperative situation (at page 57), so their action would converge on a focal point which has some kind of prominence compared with the environment.

You can’t get more prominent than the imminent demise of humankind.

With that in mind, we’re in a position to appreciate Yudkowsky’s brilliance as a social engineer. Back in the 2000s he wrote a long series of posts in which he explained his philosophy of Bayesian rationality. These have become known as the Sequences – the Yudkowsky passage I previouslyquoted is from one of them. Then, between 2010 and 2015 Yudkowsky wrote Harry Potter and the Methods of Rationality, 660,000 thousand words in which he realized his beliefs using the characters and settings of the Harry Potter universe. He has thus surrounded his beliefs about AI with a whole worldview.

What more could you want?

“But where’s it going?” you ask. I don’t know. AI is here to stay. The course of the technology itself is deeply and irreducibly unpredictable, and so must be its effects on human society. However skeptical I am about the Rationalist enterprise and it focus on the existential risk posed by AI, I believe that they have earned a seat at the table. They too are here to stay.