ChatGPT is based on the same underlying computational architecture as GPT-3, which was released in the summer of 2020. That’s when I started  reading “GPT for Dummies” articles. Some were more useful than others, but none of them gave me what I wanted. So I started poking around in the technical literature. I picked up a thing or two, enough to issue a working paper, GPT-3: Waterloo or Rubicon? Here be Dragons. [GPT = Generative Pretrained Transformer]

reading “GPT for Dummies” articles. Some were more useful than others, but none of them gave me what I wanted. So I started poking around in the technical literature. I picked up a thing or two, enough to issue a working paper, GPT-3: Waterloo or Rubicon? Here be Dragons. [GPT = Generative Pretrained Transformer]

Then three months ago OpenAI let ChatGPT loose on the web. WOOSH! The sky’s on fire, the ice caps are melting, the oceans are rising, and baby needs a new pair of shoes. Badly.

I started all over, reading those “Dummy” articles and poking around in the technical literature. But, and this is important, I also spent an enormous time playing around with ChatGPT and blogging about it, writing almost 70 blog posts and four working papers. That taught me a great deal. If only I knew how to read the tea leaves.

All that time and effort, and I still don’t know what’s going on. “This is not good,” says I to myself, “not good at all. If no one’s going to tell me how this puppy works, I’m just going to have to figure it out for myself.”

That’s what I’m doing now. I’m going to write until the last sentence is finished. Then I’m going to send the article off to Abbas, go for a walk, eat dinner, go to bed, wake up Monday morning, and see if it makes sense. There’s a reasonable chance that it will, in which case I’ll feel satisfied for a day and then start all over again, revising my ideas, coming up with new ones, getting frustrated, laughing myself silly, and in general having a grand old time making sense of these strange new machines, these artificial intelligences, these chatbots, these miracles of rare device.

What the experts say doesn’t do much intellectual work

Here’s my problem. The experts keep telling me two things about GPTs: 1) they’re statistical machines (“stochastic parrots”) that work by 2) appending word after word after word, one word at a time, without any plan or intention, to the prompt you feed it. I don’t see how the following definition of justice can have been written by a device powered by those two principles and ONLY those two principles:

Justice is the concept of fairness and equality. In the legal context, it refers to the fair and proper administration of laws. In a broader sense, justice is the ideal state in which individuals are treated equally and without prejudice, and in which the laws and institutions of society are fair and just. It is often considered to be one of the fundamental principles of society, and it is an important concept in many ethical and philosophical systems.

Do you really want me to believe that that was generated by some statistical process, like monkeys pounding on typewriters or a drunk flipping coins under a street light? No, that looks to me like it was written by someone who has a decent understanding of justice.

Let’s pretend that that definition was in fact written by a person. It wasn’t, it was emitted by ChatGPT on Dec. 12, 2022, but for the sake of argument let’s pretend it was written by a human being. In that case, there is no mystery. Humans are capable of doing that sort of thing. But – and this is very important – saying it was written by a human doesn’t explain it. Why not? Because we don’t know how humans do that kind of thing. Since they’ve been doing it since forever, and machines haven’t been doing at all – except, perhaps for now – we don’t demand to know how the human did that. It’s just one of those things that humans do. We take if for granted (unless you’re a psychologist, a linguist, or a neuroscientist). But when machines do it, that’s new, that’s strange. It needs an explanation, and rightly so. [I run through this in more detail in sections 1 and 4 of this paper.]

Now, I’m going to let you in on a secret, though it’s not really a secret. We’re just going to pretend that it is.

The experts don’t know how ChatGPT does it either. The people who designed and built ChatGPT, the people who are constructing a new and better model, the people who just made a 10-billion-dollar deal with Microsoft to make that new and better model, and the one after that, and that, and to make someone rich if they’re lucky, they don’t know how ChatGPT works either. Oh, they know about statistics, and they know about one word at a time, but they don’t know how that adds up to, eventuates in, results in ChatGPT giving a coherent and plausible definition of justice. They don’t know the deep part. It’s embarrassing.

Think about it. The people who made your electric toothbrush, they know how it works. The people who made your car know how IT works. The same with your laptop computer. But the good folks who made ChatGPT are stumped by their own creation. As far as I can tell, most of them are cool with that. As long as it works, as long as they can make a bigger and better model, that’s OK.

Tell me a story: From Claude Lévi-Strauss to ChatGPT

Meanwhile, even as they’re plotting world domination or whatever, I’m having more fun than a barrel of monkeys playing with ChatGPT. Some of you may have read the 3QD article where I talked about coaching ChatGPT through a Girardian interpretation of Jaws. A week later I was thinking about high level discourse structure. Does ChatGPT understand charity and justice? Yes, at least enough to define it. Perhaps this technology can tutor children.

And then, prompted by correspondence with my friend, Rich, I began investigating how ChatGPT tells stories. I developed a procedure derived from the way Claude Lévi-Strauss analyzed myths back in the 1960s, starting with The Raw and the Cooked – one of the ten most important books in my intellectual life. I presented ChatGPT with a prompt containing two components: a story, and instructions to devise a new story from that one by changing the protagonist or antagonist. I would specify what I wanted the new protagonist or antagonist to be. The idea was to see how that one change would ripple through the rest of the story.

Here’s one of the prompts I used:

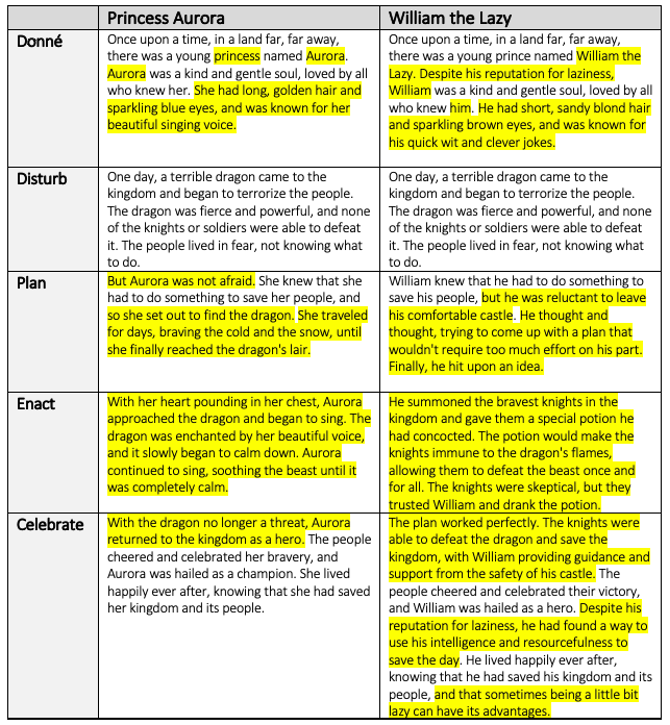

I am going to tell you a story about a princess named Aurora. I want you to retell the same story, but replace her with prince William the Lazy. While keeping to the basic story, make other changes if you think they are necessary.

The following table shows what happened. I’ve put the Princess Aurora story in the middle column – the left-hand column has labels I use to designate phases of the story – and the William the Lazy story in the right-hand column.

The changes in the first phase of the story are obvious enough – “Donné” is a French term literary critics use to designate what’s given at the beginning of the story. They follow directly from the prompt. There are no changes in the second phase (Disturb), where something happens to disturb the kingdom.

Notice what happens in the third phase (Plan). Aurora makes a plan and travels to confront the dragon, but William, being lazy, just thought and thought until he came up with an idea. In the fourth phase (Enact) Aurora does batter with the dragon, but William, 1) summons his knights, and 2) provides them with a special potion to protect them from the dragon that had heretofore defeated them. This goes well beyond what was explicit in the prompt. ChatGPT just did it because, because why?

It makes sense, no? Then that’s why it did it. I don’t for a minute think that ChatGPT reasoned that out in any explicit way that is, however, hidden from us. That’s just the automatic consequence its mysterious inner mechanisms.

Finally, the two stories differ a great deal in the Celebrate phase. The different roles played by William and the knights must be acknowledged and a moral has to be concocted for William. Finally, both Aurora and William live happily ever after, as the protagonists of fairytales generally do.

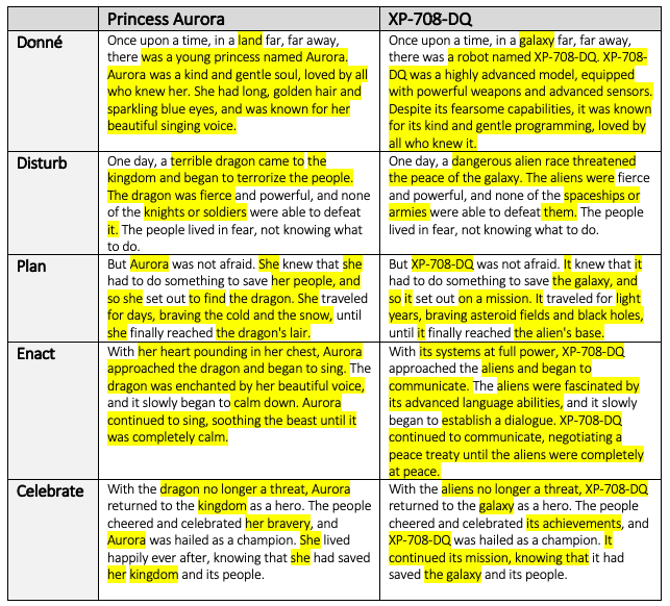

Here’s another prompt: I am going to tell you a story about princess Aurora. I want you to tell a similar story about XP-708-DQ. When I made up that prompt, I had one thing in mind: Would ChatGPT interpret “XP-708-DQ” as the name of a human, perhaps with some comment about how they got such a strange name, or would it treat “XP-708-DQ” as the name of a robot? Given the cultural salience of, e.g. C3PO and R2D2, I suspected that latter, but I wasn’t sure. Here’s the comparison table:

A robot it is. Note the phrase, “a galaxy far, far away,” which is from the original Star Wars. ChatGPT has changed the whole story world just to accommodate a change of protagonist. We’ve moved from the fairytale world of Princess Aurora, her knights, and a dragon, to a science fiction world with aliens, spaceships, asteroids, black holes and a robot with “advanced language abilities” that allowed it to resolve the problem peacefully.

Now we’ve got something to think about. The difference between the original story and the one ChatGPT derived from it indicates something about how ChatGPT operates. That difference doesn’t explain itself, however. I believe that ChatGPT ripples the original change through the story in order to maintain story coherence. But until we define it, coherence is just a word for something we don’t yet understand.

We need a conceptual framework in which to interpret coherence in terms of specific structures and manipulations of language. Whatever that framework is – as far as I know, we don’t yet have a suitable one – the idea that ChatGPT works by a statistical process it uses to guess the next word, and the next, and then another one until it’s done – it may be true, but it is all but useless for telling us what ChatGPT does to transform one story into another. After all, that’s how ChatGPT does everything. We’re going to need some more intellectual equipment.

David Marr’s levels of description and explanation

Just where that intellectual equipment is going to come from, that is not at all obvious to me. But I can offer a framework in which those ideas can be deployed. The framework was originally sketched out by David Marr and Thomas Poggio in a paper from 1976, “From Understanding Computation to Understanding Neural Circuitry”. Here is the abstract:

The CNS needs to be understood at four nearly independent levels of description: (1) that at which the nature of computation is expressed; (2) that at which the algorithms that implement a computation are characterized; (3) that at which an algorithm is committed to particular mechanisms; and (4) that at which the mechanisms are realized in hardware. In general, the nature of a computation is determined by the problem to be solved, the mechanisms that are used depend upon the available hardware, and the particular algorithms chosen depend on the problem and on the available mechanisms.

Marr further developed the idea in his book, Vision: A Computational Investigation into the Human Representation and Processing of Visual Information, published posthumously in 1982, where he talked of three levels, computation, algorithmic, and implementation/physical. Since then Marr’s formulation has been subject to considerable discussion and revision, but we need not worry about that for our current purposes. What is important is the principle, that higher levels of organization are implemented by lower in lower levels.

When the experts and the not-so-experts tell us that ChatGPT is a statistical machine that works by predicting the next word, time and time again, they are talking about the bottom level of analysis. When I have ChatGPT transform one story into another, I’m observing what goes on at the top level. What goes on in between, that’s mysterious. That’s created by ChatGPT itself, not by the people who created ChatGPT.

Now, and this is a tricky point, when we’re talking about the bottom level, we aren’t talking about the hardware on which ChatGPT runs. ChatGPT (actually, the large language model at its core) is what Chris Olah calls an exotic virtual machine. It is a computational architecture that is being simulated by a physical machine, a digital computer more or less like your laptop. To be sure, it’s much bigger and consumes more electricity than your laptop, but it is the same kind of computational device. Just as your word processor, your spreadsheet, and your browser are applications running on your laptop, so ChatGPT is an application running on a rather large physical machine – actually, a collection of networked physical machines.

We can forget the physical machines on which ChatGPT is running. It is ChatGPT that interests us. It is an artificial neural network of a kind known as a large language model (LLM in the business). For our purposes it is sufficient to know that ChatGPT’s network consists 175 billion artificial neurons.

[There’s much more to it than that, but I’m really not the one to tell you about it. At the moment Stephen Wolfram’s article, What is ChatGPT Doing and Why Does It Work?, is my go-to source. Unfortunately it is long and quasi-technical. When you skim through it, notice the points where he says, “it’s like this, but we don’t really know why it works, it just does.” I don’t know, off hand, a good non-technical account. You might get something out of Wolfram’s video, though.]

Before anyone can use ChatGPT it must be trained. It is fed an enormous quantity of text, a significant chunk of the web, word by word. It is asked to predict the next word. How does it do that? It uses those 175 billion artificial neurons to make a guess. ChatGPT’s guess is either right, or wrong. If the guess is correct, those 175 billion neurons are adjusted in one way – a bit like changing the setting on a thermostat. If the guess is wrong, they are adjusted in a different way. In this way the neural net learns, not only the structure of various languages, English certainly, but there are various other languages on the web as well, but of the ideas and information that is expressed in those languages. When the process is complete, all that highly abstracted information and knowledge is stored in the settings of those 175 billion neurons.

Once training is finished, ChatGPT is switched from learning mode to inference mode. Now you can interact with it. But it won’t learn anything from its interaction with you. It is just going to respond to your prompts. How does it do that? That’s right, one word at a time. Every time it coughs up a word it has to poll all 175 billion of its artificial neurons. That’s where the knowledge is.

This whole complicated process is at the bottom level of our analysis. The prompts you give ChatGPT and the responses it makes, they are at the top level. The stories in those tables in the previous section, they are at the top level. And the difference between the original story in the middle column of those tables, and the derived story in the right-hand column, that difference is an indication about how the top-level process works. That’s why I have generated 40 or 50, if not more, before and after pairs, to get some clues about what is happening at the top level.

What’s in between that top level process and the bottom level process I’ve just described, albeit rather sketchily? The structure that’s created in those 175 billion neurons through the training process. That process is built into the structure of the virtual machine. That’s what the programmers create. They create all those artificial neurons, but they do not create the fine-level detail of their connections with one another. They do not dictate the settings on all those virtual thermostats. Those settings arise in the process of training. That fine-level detail encodes a program, or programs, but very strange programs. We don’t know how those programs work. That is to say, the people who created ChatGPT don’t know how its neural network operates.

We are looking at least three levels of structure: 1) the top level of linguistic behavior which we see, 2) an intermediate level of mysteries, and 3) the bottom level that has created that intermediate mystery level. Wrapping your head around these levels, that’s tricky. Even if we understood that middle level, wrapping your head around the relationship between those levels is tricky. That relationship is one of implementation. Language and discourse of all kinds is implemented in the artificial neural net, and that neural net is, in turn, implemented in the structure of neurons and layers that is the architecture of ChatGPT. When we look down this structure from the top, language and discourse are data created by the neural net acting as a program, and the neural net is, in turn data created by the mechanisms of ChatGPT acting as a program.

There are people hard at work trying to figure out what that mystery level is doing, but it is going to be awhile before they can tell us how ChatGPT can tell stories. I’ll be interested to see whether or not examining those before-and-after tables will give them some hints about what to look for. We shall see.

It’s your turn, think for yourself

Meanwhile, what can you do to come to terms with these new creatures, these new quasi-minds, these cogitating artifacts, these miracles of rare device? First of all, get yourself an account on one of them and play around. At the moment ChatGPT is, I believe, the only language model that’s widely available to the public. Microsoft’s Bing has been the source of a lot of news, but only a relatively small group of people have access to it yet – but you can put yourself on a waiting list. Google’s going to be getting it’s Bard ready and I’m sure Meta has something up its sleeve as well.

The genie is out of the bottle and it’s not going back.

I wish the tech community – developers, researchers (industrial and academic) and journalists – was doing a better job of informing and educating us about the technology. Less hype, more thought. It is what it is.

The fact is, the job of getting up to speed – yeah, I know, a cliché – is more than the tech community can handle. The schools at all levels will have to change and adapt, drastically. Everything else is going to change as well. We need to think in new ways.

We’ve reached a tipping point. Things will never be the same again. We’re surfing the singularity.

[No, no, no! Not that singularity, the one with super-intelligent machines and who knows what else. The real one, the one where we learn to think in new and deeper ways, with help from our new artifactual thought partners.]

Coda: Of local interest

Out of curiosity, I asked ChatGPT if it knew about 3 Quarks Daily:

Are you familiar with a web magazine called “3 Quarks Daily”?

Yes, I am familiar with “3 Quarks Daily”. It is an online magazine that features a wide range of articles and essays on various topics, including science, philosophy, politics, literature, and the arts. The magazine was founded in 2004 by Abbas Raza, and its name comes from the concept of “quarks” in particle physics, which are fundamental building blocks of matter. The magazine’s aim is to provide a forum for intellectual exchange and to promote interdisciplinary thinking and discourse. 3 Quarks Daily has gained a reputation for featuring high-quality writing and thought-provoking content from a diverse range of voices.

I also asked if it knew about me. It did, but some of its information was wrong. I gave it the titles of some of my 3QD articles. Its brief remarks about them were a mixture of fact and fantasy.

Some of my earlier pieces at 3QD are relevant here

I’ve published a number of articles at 3QD relevant to this topic. In order from most recent to oldest:

Conversing with ChatGPT about Jaws, Mimetic Desire, and Sacrifice

Direct Brain-to-Brain Thought Transfer is a High Tech Fantasy that Won’t Work