Sometime in 1984, after Apple had released the first Macintosh, my friend Rich sent me a short note he’d written on one. The note had some text, not much, nor do I remember about what, and a black and white image of Japanese woman that Apple had put on the over of the user guide for the Mac. I took one look and thought, “text and images together on a page from the same computer, I gotta’ have one.” So I took out a loan for $4,000 ($12,000 in current dollars for the Mac, disk drive, and printer) and bought one. I wrote text, made images, and above all, put them on the same page using the same program.

I ended up writing an article for Byte Magazine, one of the premier magazines for home computers back then, “The Visual Mind and the Macintosh.”

In my opinion, the Apple Macintosh is the most significant microcomputer since that original MITS kit. but its importance hasn’t been adequately explained. The Mac is user friendly, but even more important is what lies beyond that user-friendly interface–MacPaint. […] By making it easy for us to create images and work with them, the Macintosh can help us to think. Perhaps our society will create a pool of images for thinking comparable to our pool of proverbs and stories.

A year or so later one Michael Green published an astonishing book, Zen and the Art of Macintosh. Green used a Macintosh to place text and images together on each page, seamlessly, wonderfully. I began daydreaming about a publishing renaissance, page after page where text and image worked together in new and wonderful ways.

Alas, it didn’t happen. Retrospectively it’s obvious why. Who’s going to create such books? Relatively few people are highly skilled in the production of both text and images. Green is exceptional.

On December 6, 2024 I published the first of many posts in which I asked Claude to describe a photograph I taken of a Burger King outside the Holland Tunnel at night. When I asked, “What artist painted pictures like this?” it got the answer I was fishing for: Edward Hopper. That’s been an interesting series of posts, though I have don’t one since the end of April.

At roughly that same time, April 24, I did a post where I had ChatGPT create variations on an image that I uploaded. That’s the first post where I used an image created by ChatGPT. I’ve done many such posts since then, and created many images with ChatGPT that I’ve not posted. In many cases, perhaps the majority at this point, I gave it a photograph as the basis for an image. In some cases I asked it to modify the image in a simple way (see the coffee cup below) while in other cases I asked it use that image as the basis for a new one. In a few cases I’ve had it create an image on the basis of a verbal prompts, sometimes simple, sometimes complex.

In the rest of this post I give you a small handful of examples. As is often the case with my posts, it’s a bit wordy, so you might pour yourself a gin and tonic or a cup of tea. Or you might decide, “It’s got pictures! To hell with the words!” Let’s dive in.

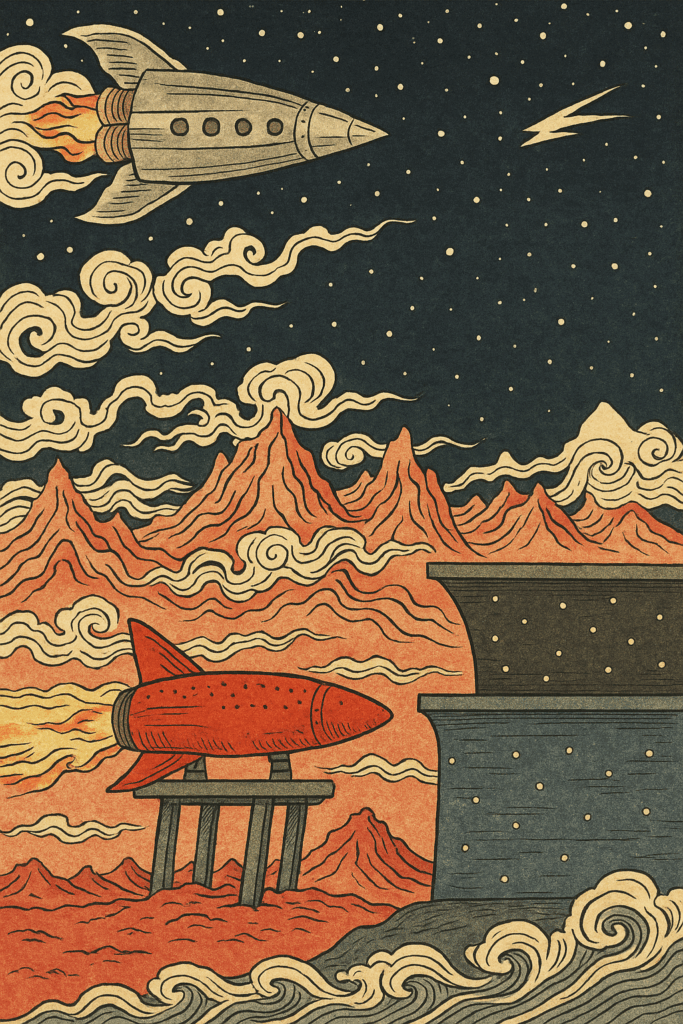

I was fascinated by the prospect of space travel as a young child, perhaps as a result of several episodes of Disneyland, but also movies like The Forbidden Planet. When I started taking weekly art lessons I took that interest with me. This is one of my early paintings:

What, I wondered, if that image had been rendered by a Japanese ukiyo-e artist? I uploaded a photo of the painting and asked ChatGPT to render it in that style:

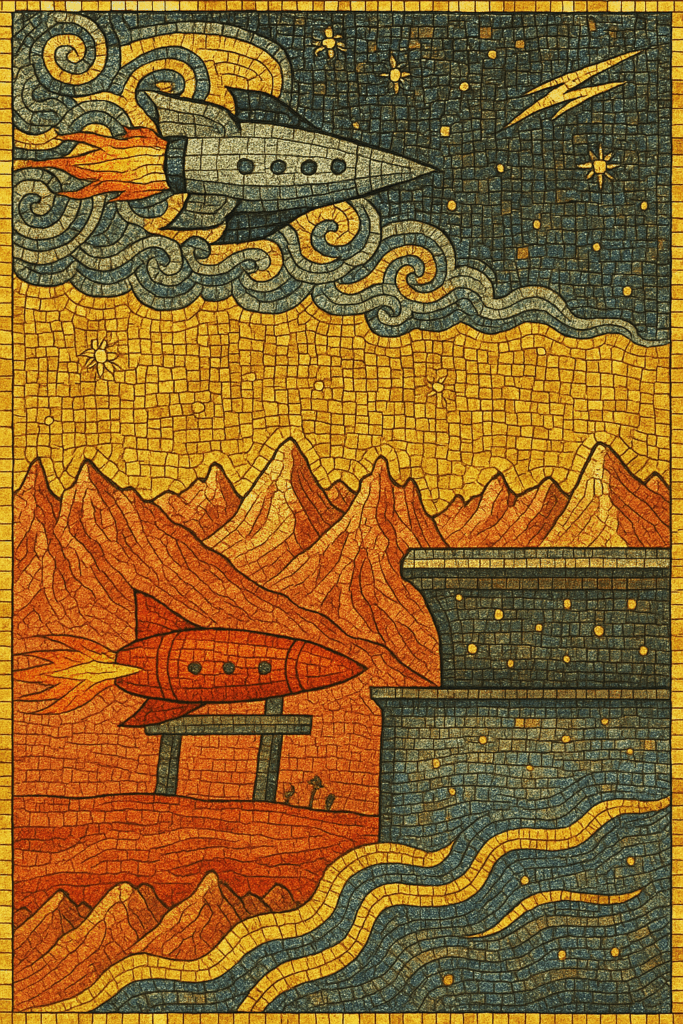

Notice the characteristic forms of the clouds and waves. I then asked it to render the same image in the style of a medieval miniature from an illuminated manuscript, which it did, and offered other styles as well, like Byzantine mosaics or Renaissance frescoes. I chose Byzantine mosaics:

Imagine how that would as an actual mosaic, with all those gold tiles.

Whatever you may think of those images – I like them, a lot – consider the fact that ChatGPT was able to do them at all.

It’s long been a commonplace of art commentary to distinguish between style and content. ChatGPT had to do that to produce those images. Each copy preserved much of the content of my original painting – the rockets, mountains, the building, the stars – but rendered them in a different style. Who’d have thought that a purely mechanical process would be able to make and utilize that distinction. (These chatbots can do the same with language.)

I’ve spent quite a bit of time using ChatGPT to produce images on the basis of photo-prompts, like those space images. One day I decided to see what I can do with some of my graffiti photos. The fact is, when I first started tromping the hinterlands of Jersey City in search of graffiti, I felt like I was a ten-year old kid pretending to be Indiana Jones exploring some lost city. That’s what I’ve done in this series of images: Indiana Jones goes to an alien world and ChatGPT gives us pictures. I gave ChatGPT one of my graffiti photos and asked it to treat it as a scene in an unfolding adventure. The adventure quickly went off-planet to another world. At various points along the way I introduced other photos for reference.

The starting photo is from an area known locally as “the oaks.” It’s a small densely wooded area. The bit of masonry you see at the right is the edge of a pillar supporting Exit 14C of the New Jersey Thruway. All of the pillars at this point are covered with graffiti at ground level. We’re at the foot of the Palisades and within one or two hundred yards of 12th Street in Jersey City, which feeds into the Holland Tunnel, taking traffic to Manhattan. Thus, this little jungle vignette is in fact in one of the most densely populated urban areas in the United States.

Here’s the image I started with:

And here’s my initial prompt (in bold face, ChatGPT’s response is plain-face):

Imagine that we’re on an Indiana Jones adventure. We come to this spot and know that there’s the ruins of an ancient temple somewhere down that river. We start walking. What’s the next thing we see? But not the temple, not yet.

You hack at the trailing vines, and as they fall away you catch sight of something glinting in the dappled sunlight: a low stone plinth, half-submerged at the water’s edge, its top carved with a snarling, feline-headed guardian. The moss has nearly overtaken the bas-relief, but you can still make out its wide, carved eyes staring out at you, almost daring you to proceed farther downriver.

Beyond the plinth, a faint trail of broken pottery shards leads off into the undergrowth, and you can hear the soft drip of water echoing from somewhere ahead—perhaps a hidden grotto or the remnants of an old sluice that once fed the temple’s inner sanctum. A sudden breeze stirs the leaves, and you think you glimpse, deeper in the shadows, the jagged outline of what might be a toppled column, its capital half-buried under a tangle of roots.

Clearly, someone—or something—has been here before. You press on, your hand brushing against the jagged stone face of the plinth, heart hammering as you realize the real adventure is only just beginning.

Now, paint me a photo-realistic picture.

Here’s a photorealistic rendering of our jungle riverbank scene: a moss-covered feline-headed guardian plinth, broken pottery shards underfoot, and the hints of toppled temple columns drifting into the leafy shadows. Let me know what you’d like to explore next!

Aspects of the original photo show up in the dense vegetation, the small creek, and the overall composition. ChatGPT came up with that cathead sculpture, pot shards, and the ruined column.

Very good. We move 100 yards along the right side of the river and the jungle begins to clear away. Draw me a picture of what we see.

Here’s our next scene: the riverbank clearing, with moss-covered steps leading up to a partially reclaimed stone entrance, all bathed in warm, golden light. Let me know where our adventure takes us next!

Notice that ChatGPT has added palm trees to the vegetation, but the general texture and density is consistent with the original photo.

Rather than step through the entire sequence – and you can follow it on my blog, New Savanna, at the previous link – let’s go off-planet to a strange-new world, one that is however based on photos of this not-so-strange world.

Notice the graffiti all over the ruins, these space-aliens seem to like the Roman alphabet, and that fairly standard image of an alien that we see at the upper right. Those buildings in the distance, though, seem quite alien. Here’s the photograph ChatGPT was working from:

It retained the basic structure and texture of the ruins, but opened it up in the middle to give us a glimpse of those alien buildings.

I took this next photo on the roof of an old industrial building in Jersey City. You can see a scrap of graffiti to the left, low industrial buildings in the center, and the combined skylines of Jersey City (to the left) and Lower Manhattan (to the right). The new World Trade Center building is in the middle; it was still under construction when I took this photo.

Here’s what ChatGPT did with it:

ChatGPT retained the general composition of the photo, though it changed the aspect ratio. We see graffiti at the left and a water tower just to the left of that. The low industrial buildings in this image are much like those in the original photo, but the skyline is quite different. Those skyscrapers are architecturally of a piece those we saw in the earlier rendering. And then we have that double-star illuminating the scene. We’re not in Kansas anymore.

I have no idea how ChatGPT decides what aspects of the original photo to retain and what things it’ll invent.

ChatGPT is quite capable of generating an image on the basis of nothing more than a verbal prompt. What’s going on here should be self-evident:

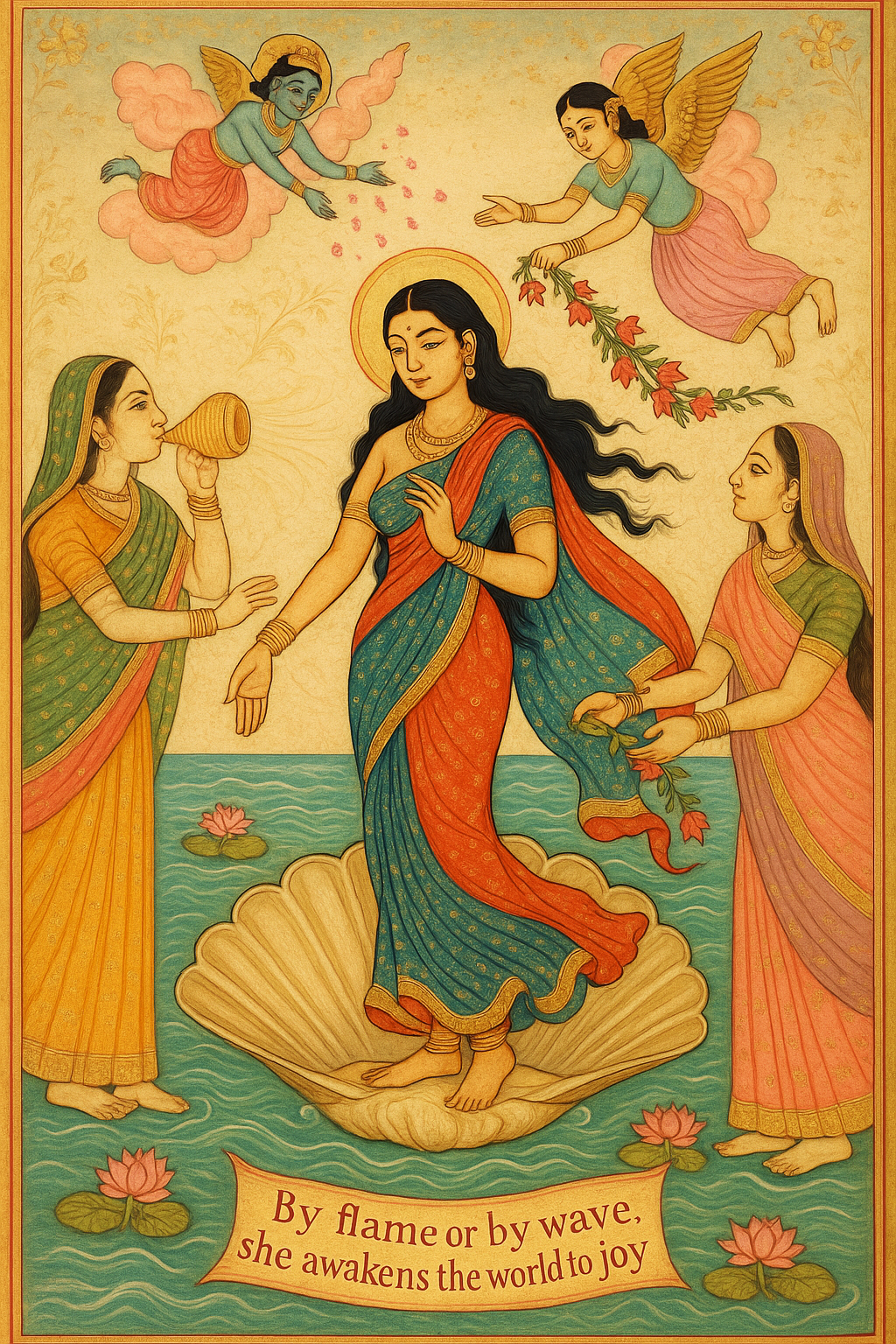

I want an image of a new superhero. She’s female and goes into action to advance pleasure, equality, and justice. She wears a magic sari that can change from hot pink flames to cool blue waters as the occasion dictates. How do you envision her?

What should we call her? “Kama Carnatica,” for the Kama Sutra and music? “Kama Shazaam!” is a bit humorus. Do you have any ideas?

Those are delightful! “Kama Carnatica” has a lyrical, classical elegance to it, while “Kama Shazaam!” is playful and subversive—a perfect Fourth Arena tension.

Here are some more possibilities, ranging from poetic to punchy:

- Shakti Rasa – “Power Essence”; invokes divine feminine force and the aesthetic flavor of erotic and emotional experience.

- Devi of Desire – A clear nod to her role as protector and celebrant of pleasure.

- Sundari Nova – “Beautiful One, Reborn”; blends Sanskrit with futurism.

- Kama Shakti Boom! – A rhythmic explosion of pleasure and power.

- Agent Ananda – “Ananda” means bliss; think of her as the secret agent of sacred joy.

- The Pink Lotus – Subtle, symbolic, and sensuous.

- Homo Ludens Prime – Our post-Homo economicus champion in sari form.

- Moksha Mode – She flips the world from grind to liberation.

- Auntie Pleasure – If you want the wise-and-wicked South Asian matriarch energy.

I love those. Maybe she’s a shape-shifter as well. Give me an image of her as Auntie Pleasure.

If you’re wondering what I’m doing thinking about the Kama Sutra – which, BTW, is much more interesting than you think if all you know about it is that it has a lot of sex positions – you might read my article from last month: Redeeming Pleasure: Women Lead A Second Sexual Revolution.

Some years ago I was invited to a birthday party for a four-year-old girl. What was I going to get her? I knew that she’d been talking a lot about monsters recently, so I decided to write a little book for her, “Valerie and the Monsters.” I decided to use that as the basis for a longish prompt to ChatGPT.

Some years ago I was invited to a birthday party for a four-year-old girl. What was I going to get her? I knew that she’d been talking a lot about monsters recently, so I decided to write a little book for her, “Valerie and the Monsters.” I decided to use that as the basis for a longish prompt to ChatGPT.

I want you to create four panel comic. First I’m going to tell you a story to set the mood. Then I’m going to give you specific instructions.

In this story a little girl named “Valerie” – the four-year-old’s name – was given a magic pencil. Whenever she came across a monster all she had to do was draw a picture of it with the magic pencil and she’d tame the monster. So, in the book I drew pictures of the monsters, wacky semi-funny monsters. I left the facing pages blank so that Valerie could use her pencil to draw a picture of the monster.

When her mother read the story to her at the birthday party she wanted to draw the pictures. But of course her mother didn’t let her. Still, when the story was over, Valerie went around and hugged everyone in the room.

I’m thinking of something similar. I want to make a comic book with a series of four panel episodes. In the first panel a little boy is confronted with a large and frightening “monster,” broadly understood. In the second panel the monster tells the boy, “Guess my name or I will harm you, or your friends and relatives.” In the third panel the boy stands tall and says, “You name is…” And he says the name. In the final panel the monster is chastened and the boy is triumphant.

I suppose that’s more of a prompt than is strictly necessary. But results are generally better if you give ChatGPT a detailed prompt. While I’m sure ChatGPT could have produced images even if I hadn’t given it the story about Valerie’s birthday, I figure the story contributed to the vibe of the images I wanted it to create. Here’s the actual image-generating prompts and the images ChatGPT produced in response.

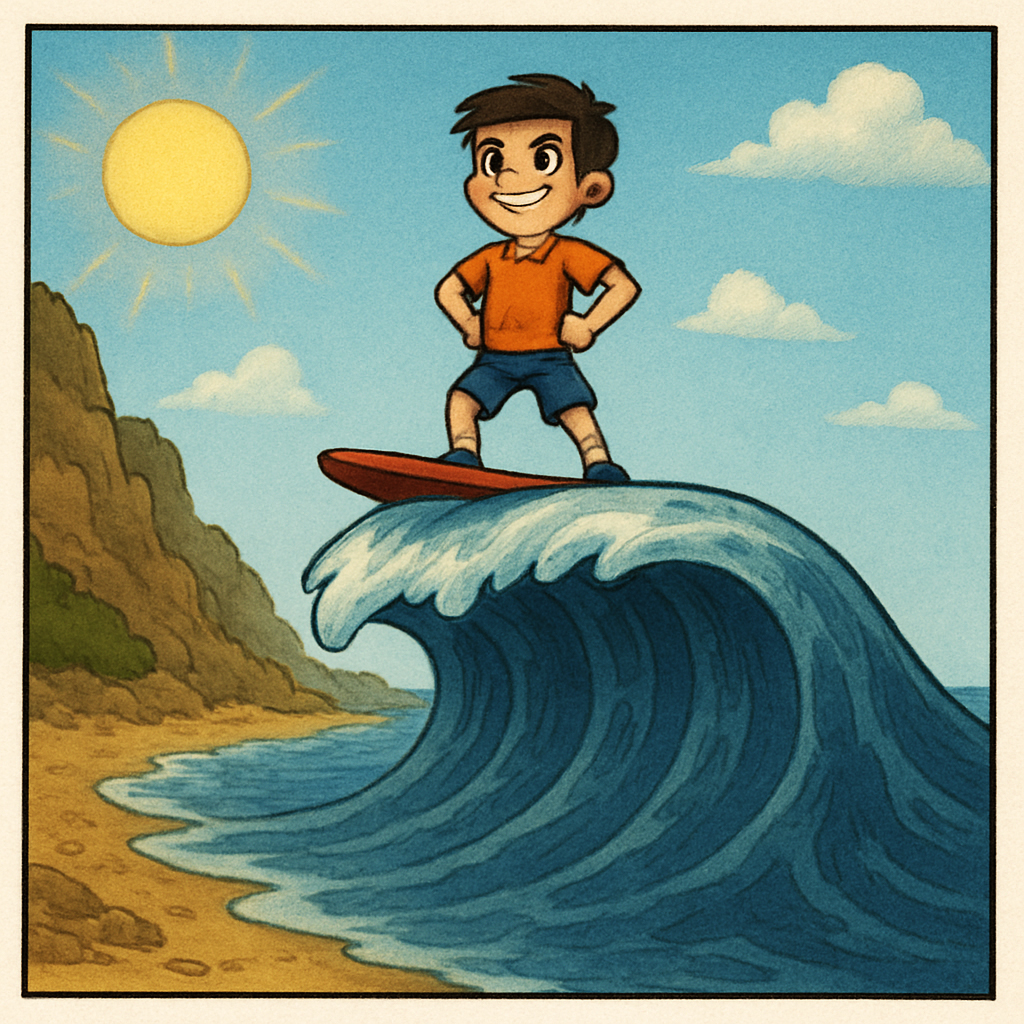

Let’s try one. In this one the monster is a tsunami. In the first panel we see a little boy standing on the beach in the center of the panel. There’s a rocky hillside behind him. In front of him there’s a large tsunami wave looming high over him, as high as the hillside behind him. He’s cowering down and looking up the way. The sky is cloudy and overcast, maybe with a lightning bolt in the distance.

Now let’s draw the second panel. We see a word balloon at the top of the wave. It’s saying, “Guess my name or I’ll drown you.”

OK, the third panel. The boy is standing tall, a look of defiance on his face. He says, “tsu…tsu…tsu, tsunami!”

In the forth panel the sun has come out, the sky is bright, and the boy is standing tall on a surfboard atop the tsunami wave.

Imagine what you could do if you worked at it. I chose four panels because it was convenient. But you could easily add other incidents to this little vignette, perhaps one just before the tsunami arrives, maybe another having the boy curl up in a ball once he’d been threatened by the tsunami. But if you do that, then you’re going to have to uncurl him and get him to stand his ground. Perhaps a beloved pet can do that. And so forth.

But it doesn’t have to be a tsunami. It could be a volcano, a tornado, a rampaging bull, or even a swarm of angry wasps. Why not all of them; create a whole bunch of hero stories. Nor does it have to be a boy. Nor a Caucasian. And so forth.

If you have a specific youngster in mind, you could upload a photo and have ChatGPT model your protagonist on them. You could specify a particular cartoon style. I didn’t specify any and just went with ChatGPT’s default. But you could specify studio Ghibli. OpenAI hyped that style a lot when then they released I-forget-just-which-version and the web was flooded with Studio Ghibli-like kids. I’ve tried classic Warner Brothers, classic Disney. I even tried The Simpsons:

Where’d she come from? She wasn’t in the prompt I gave the chatster. She must have snuck in from the Karma Carnatica section.

Oh Ohoooo..! Something’s rotten in the state of Denmark.

Has History Been White-washed?

Is it possible that Botticelli based his famous “The Birth of Venus” on a Mughal miniature?

Did Leonardo have a secret South Asian mistress?

And forget about Arrangement Grey and Black No. 1, aka Whistler’s Mother. Did James McNeill Whistler have a South Asian auntie he never told anyone about?

And what are we to do with this? Are we dealing with another European gentleman, Pierre Gautreau, who had a secret exotic mistress?

Seriously, though. There’s something wrong with this parody, and I’m not just talking about the color of the dress. If you’re familiar with John Singer Sargent’s Portrait of Madame X you know that she was looking toward the right of the picture (over her left shoulder). ChatGPT made a mistake, which is not unusual. When I asked ChatGPT to correct its mistake, to have her “facing to the right, like in Sargent’s original?, it gave me another slightly different image, but still with the same orientation. Figuring it might be confused about left and right I gave it another prompt: “No. Have her face in the other direction.” The same thing happened. Slightly different, but still facing the wrong way.

Hmmm, thought I to myself, children sometimes have difficulty learning the difference between left and right. Could ChatGPT have the same problem? Figuring there would be no point in asking it – how would it know? – I decided to at least verify my thought about children:

On a different topic, do children sometimes have difficulty learning the difference between left and right?

Many children have difficulty learning the difference between left and right, and it’s a more complex task than it may seem at first glance. Unlike directions like up and down, which are fixed by gravity and the structure of the body, left and right are relative and change depending on orientation. What’s on your left becomes your right if you turn around. Because of this, left and right are not absolute positions in space but require an internal sense of body orientation. That means children must first develop a stable mental model of their own body in space before they can reliably use these terms.

Typically, children begin to recognize their dominant hand—usually their writing hand—by age 3 or 4. But the ability to name and distinguish left from right, especially when applied to both themselves and others, tends to solidify between ages 5 and 7. There is considerable variation, however, and it is not unusual for children to continue to confuse left and right beyond that age. Some individuals, including many with learning differences like dyslexia, may have difficulty with this distinction well into adolescence or adulthood.

One challenge is that making a left/right distinction often requires mental rotation or perspective-taking, especially when interpreting instructions from someone facing you (like in dance or sports). Many children hesitate, look at their hands, or use simple tricks to help them decide. A common example is making an “L” shape with the thumb and forefinger of each hand—the left hand makes a proper “L” when held up. Others use verbal cues, such as remembering which hand they write with or which side they wear a watch on.

Unlike children ChatGPT is not embedded in the world. Is that why it had difficulty with left and right? If so, why did it get the Whistler’s Mother parody correct? It is strongly left-right oriented. Perhaps it’s just a matter of chance. Or is it because Whistler’s painting had two objects in the focal space, his mother and a small picture on the wall? Maybe the pair was more “stable” than a human alone.

Who knows? The ways of chatbots are ever mysterious.

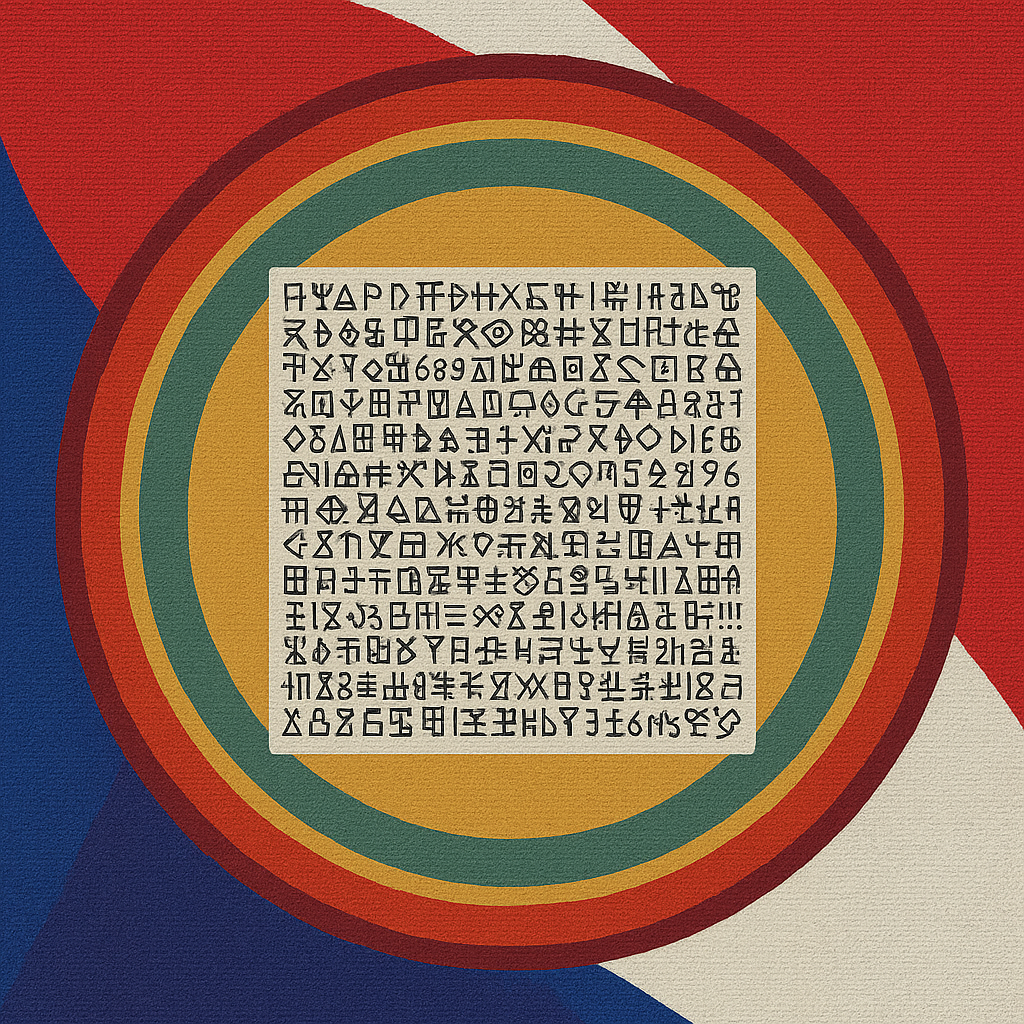

“Mandala” is a Sanskrit term (मण्डल) meaning circle. According to Wikipedia it “is a geometric configuration of symbols. In various spiritual traditions, mandalas may be employed for focusing attention of practitioners and adepts, as a spiritual guidance tool, for establishing a sacred space and as an aid to meditation and trance induction.” When I uploaded a photograph of an iris to ChatGPT and asked it to base a mandala on it, this is the result, a highly stylized image of an Iris inscribed within a circle (other mandalas):

Here’s a very different mandala.

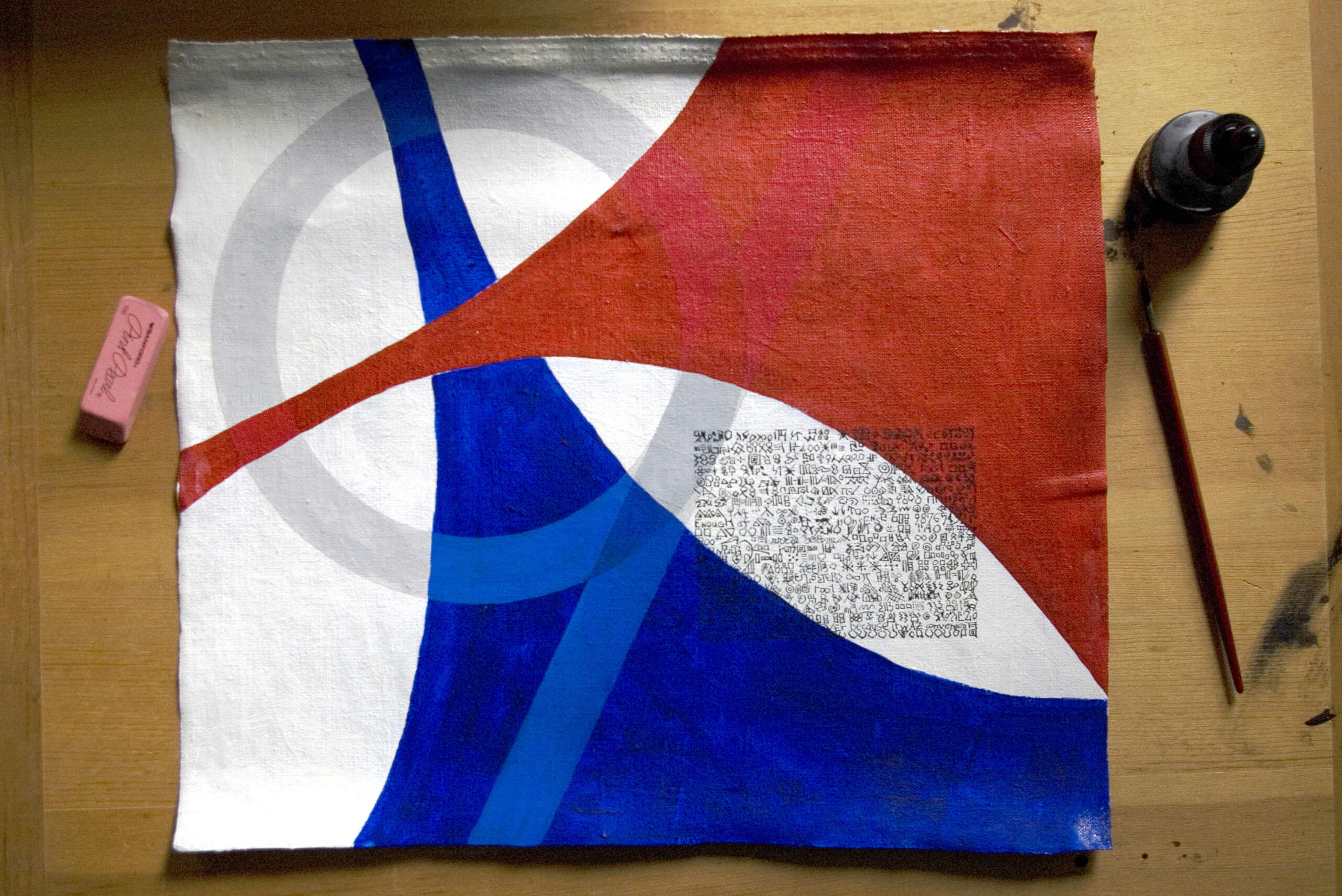

Notice the fine-grained canvas-like texture across the whole surface; I like that a lot. That mandala – can we call it a mandala? – wasn’t derived from a photograph of a flower. It was derived from this photo:

That’s an abstract image painted on a piece of unstretched canvas which is lying on a drawing board. The dominant colors in the mandala are clearly derived from that photo, as are the (meaningless) glyphs and the lozenge or eye-like form. The yellow might be derived from the color of the drawing board and the brown from the wooden pen. The green is pure invention.

This final mandala, and the concluding image in this article, was based on a verbal prompt. Over a period of several days I had been playing around with some documents in which I wrote about my intellectual history. I decided to have ChatGPT use that as thematic material for a mandala. Here’s the prompt I gave it, along with the mandala it produced:

I’m going to give you a short highly metaphorical account of my intellectual life. Taking my history into account, use that as the basis for a mandala about my intellectual journey. Here it is:

In the early 1970s I discovered that “Kubla Khan” had a rich, marvelous, and fantastically symmetrical structure. I’d found myself intellectually. I knew what I was doing. I had a specific intellectual mission: to find the mechanisms behind “Kubla Khan.” As defined, that mission failed, and still has not been achieved some 40 odd years later.

It’s like this: If you set out to hitch rides from New York City to, say, Los Angeles, and don’t make it, well then your hitch-hike adventure is a failure. But if you end up on Mars instead, just what kind of failure is that? Yeah, you’re lost. Really really lost. But you’re lost on Mars! How cool is that!

Of course, it might not actually be Mars. It might just be an abandoned set on a studio back lot.

Is the Martian landscape in the lower right of the mandala near the landscape I’d depicted in that painting I’d made as a child? Are the red and blue in the mandala akin to the red a blue in Kama Carnatica’s magic sari?

***

Enjoying the content on 3QD? Help keep us going by donating now.