by Jochen Szangolies

On May 11, 1997, chess computer Deep Blue dealt then-world chess champion Garry Kasparov a decisive defeat, marking the first time a computer system was able to defeat the top human chess player in a tournament setting. Shortly afterwards, AI chess superiority firmly established, humanity abandoned the game of chess as having now become pointless. Nowadays, with chess engines on regular home PCs easily outsmarting the best humans to ever play the game, chess has become relegated to a mere historical curiosity and obscure benchmark for computational supremacy over feeble human minds.

Except, of course, that’s not what happened. Human interest in chess has not appreciably waned, despite having had to cede the top spot to silicon-based number-crunchers (and the alleged introduction of novel backdoors to cheating). This echoes a pattern well visible throughout the history of technological development: faster modes of transportation—by car, or even on horseback—have not eliminated human competitive racing; great cranes effortlessly raising tonnes of weight does not keep us from competitively lifting mere hundreds of kilos; the invention of photography has not kept humans from drawing realistic likenesses.

Why, then, worry about AI art? What we value, it seems, is not performance as such, but specifically human performance. We are interested in humans racing or playing each other, even in the face of superior non-human agencies. Should we not expect the same pattern to continue: AI creates art equal to or exceeding that of its human progenitors, to nobody’s great interest?

Perhaps, but the story here is more complicated. First of all, art isn’t generally considered explicitly competitive. While we may identify the ‘best’ artists as those whose works command the greatest value, say, at auction, that’s not why we do it (ostensibly, at least). Chess, on the other hand, is played to win—so while in the past, we could elide the distinction between an abstract concept of ‘mastery’ and the more concrete ‘able to beat other humans’, having it made explicit by the introduction of machines that can take part in mastery, but not in the human game of chess comes at no great cost. With art, this is different, if we don’t want to reduce it to just another way of ranking humans.

Furthermore, the occasional chimp notwithstanding, art may be conceptualized as a quintessentially human endeavor, more so than the act of finding an optimal move among a large, but still finite number of options. Art comes from ‘the soul’, whatever that may mean—so where does the production of art by advanced AI systems leave that notion?

All of this, it seems, are just different proxies to interrogate a deeper question: what is it that we value in art in the first place? And whatever that is, does—or could, conceptually speaking—AI art encompass it?

I won’t pretend, or even really try, to make any headway on these questions in the present essay. Rather, I take them as an opportunity to investigate a comparatively less-trodden sidetrack, namely, the ostensible dichotomy between ‘AI’ and ‘us’. We tend to think of AI as a potentially rival, independent wellspring of intelligence: some intelligent ‘other’, perhaps something akin to an alien species, arisen in potential competition to our way of thinking, feeling, creating art, and threatening its continued existence, either by explicit doomsday-scenarios, via threatening irrelevance, or simply being subsumed.

In contrast, I submit that intelligence—all intelligence, not just the explicit reasoning facilities of individual humans, but also cogitation on a social level, the embodied intelligence of animals and plants, even the continuous, evolutionary adaptation of life itself—is part of the same movement of ‘hyperintelligence’ that relates to individual appearances of intelligence in the same way the hyperobject relates to its constituent, localizable parts, or that hyperbeing relates to individual life-forms. Just as the story of life, then, is not the story of individual life-forms locked in a red-of-tooth-and-claw struggle for survival, the story of intelligence is not that of any particular manifestation out-smarting another.

AI Will Not Out-Compete Us

There’s a common argument about the potential superiority of AIs as compared to human intelligence that’s often phrased in the form of the following argumentum ad canem (e.g. David Wolpert’s take here): there are plenty of things—quantum mechanics, powered flight, taxes—that we understand (to some degree, at least), but that one could never explain to a dog. Hence, should we not expect that there are likewise things that can be understood by a superior intelligence, but that they could not explain to us anymore than we could explain Bernoulli’s principle to a canine?

Add to this then the idea that artificial intelligence, once things get going, may rapidly evolve to a level of such superiority, and human intelligence—like human chess-playing capacity—will become relegated to at best a second-tier sideshow, of interest only to those who, by misfortune of their human birth, find themselves unable to transcend it. As AI snatches mastery in the domain of general intelligence from our grasping human hands (and minds), the mere ability to out-think fellow humans ceases to be a driver of progress, instead becoming a spectator sport at best.

But this gets something essential wrong about intelligence—in a way related to how worries about AI art get something wrong about art in general. Consider the following, structurally equivalent argument: there are functions your personal computer can calculate, that the calculator on your desk is strictly unable to. Hence, one should imagine a device along the same continuum capable of computing functions incalculable by your personal computer—right?

But no: there are no such devices. The reason for this is computational universality: while there are many devices capable of computing only a certain subset of functions, surprisingly, there are also universal devices capable of computing all of them—or all that are in-principle computable, at any rate. Thus, the greatest supercomputer in the world right now, the Frontier system at the Oak Ridge National Laboratory, can’t compute anything your personal computer can’t also compute—the latter would just take much, much longer, and probably run out of working memory (or break down due to material fatigue). The reason for this is that your computer can simulate every part of the Frontier, and thus, can simulate its action during any given computation—time and memory permitting.

The argumentum ad canem makes use of a hidden premise: that there is no qualitative distinction between the intelligence of a dog and a human. This assumption fails in the case of the calculator: its special-purpose logic cannot capture certain functions, while the general-purpose design of a modern-day computer can. Hence, we cannot generalize from the limitations of the calculator to that of the computer. Should we expect it to hold in the case of human intelligence?

First of all, let us note that by intelligence, we mean for the moment the rather narrow domain of symbolic intelligence—the step-by-step, formal reasoning process that takes assumptions to conclusions, derives consequences from posits, and so on: the ‘System 2’ style of conscious reasoning (the character of the lobster in my own ensemble). This is widely believed to be a uniquely human capacity, other animals lacking the explicit symbolic means of expression (i.e., language) it seems to depend on. So there seems to be a genuinely novel quality emergent somewhere along the scale between the dog and the human, calling the generalization into question.

Moreover, we have reason to believe that these System 2-capacities are similarly universal as those of the general-purpose computer. For one, we can emulate different symbolic systems: we can translate, formulate the same thoughts in different languages. While each language shapes thoughts formulated in it, stronger forms of the Sapir-Whorf hypothesis that allege that linguistic categories limit or determine cognitive categories are generally dismissed by modern-day scholarship.

Additionally, the same universal rules of reasoning programmed into general-purpose computers can be emulated within human-level symbolic reasoning—we can compute anything a general-purpose computer can, if only with effort, and subject to time- and memory-constraints. So in particular, nothing an AI could do is in principle off-limits to us: just as there are no distinct orders of computation, once a certain threshold has been surmounted, there is ultimately only one intelligence, manifesting itself in different localized forms.

Of course, an AI powered by sufficiently beefy hardware may still leave the wetware of the human brain far behind in terms of simple speed. Thus, any individual human, like any individual chess-player or artist, may find themselves outmatched by a given AI agent. It’s here that we must confront the second fallacy of AI doomism: not only can intelligence not simply be ordered along a scale, with AI coming out above humans, it can also not be localized solely in the individual, but is, rather, a collaborative social phenomenon that, once it gets going, rapidly encompasses and envelops a widely diverse range of beings and processes into an overarching, historical movement of hyperintelligence.

Sticky Intelligence

As we have seen, artificial intelligence, no matter how advanced, will not exceed human intelligence on the basis of thoughts that can be thought—anything that can be thought by an AI, can also be thought by a human intelligence (in principle). Thus, there is no insurmountable barrier between human and artificial intelligence, the way there is one between you and your dog (on the level of explicit, symbolic reasoning, at least): both can be integrated into the same discourse.

However, this integration necessitates leaving behind the narrow definition of intelligence as ‘System 2’-style explicit reasoning. Intelligence is, in fact, to be found in a wide range of natural and artificial processes, from the way slime molds find optimal transport routes between distinct locations, to the immune system tailoring its response to a near-limitless variety of potential invaders, to plant roots following foraging paths, to evolution itself finding optimal adaptations of life to the affordances of a changing environment. The ‘System 2’-style reasoning we generally associate with ‘intelligence’ simpliciter is simply that ubiquitous undercurrent of intelligence for a brief moment breaking the waterline to come into conscious access—the proverbial tip of the iceberg hiding the bulk of complex processing carried out unconsciously beneath (at the level of ‘System 1’, where the octopuses live).

Perhaps an analogy can make this relationship more immediate. Suppose you’re playing a video game. When you press one key, the character you control turns right; another, they turn left; another, they start to run; and so on. Some simple key presses may prompt the execution of complex actions, like blocking an enemy’s sword and executing a finely-choreographed counter, or jumping to a ledge, grabbing on and climbing up.

The relationship between you and your avatar then seems to be a tenuous one: whereas in real life, you seem to take action, in the game, you merely issue commands, which are then translated into appropriate actions by the game’s own logic. But on closer inspection, the difference turns out to be almost negligible: when you take any action—say, tying your shoes—you don’t micro-manage every movement, telling muscles to flex, fingers to bend in a complicated dance. All you do is issue the intention, formulating the desire of having your shoes tied—and your fingers do the rest.

That is, much of the intelligence in tying your shoes is offloaded onto unconscious processes, just as much of the intelligence in your character’s actions on-screen is offloaded to your computer’s processing. In both cases, only fleeting threads of causality briefly enter into ‘System 2’s purview for inspection, before being handed back to the unconscious machinery largely responsible for forming the world according to your intentions. To limit the concept of intelligence only to this administrative oversight would be to disregard its largest and most effective part.

This insight can be extended to the creation of works of art. In the Star Trek: The Next Generation episode ‘When the Bough Breaks’, a little boy is introduced to a sculpting-tool that, aimed at a block of wood, instantly shapes it into the desired form. Startled, the boy wonders whether it was really he who did this—who created the sculpture. But then, is the relationship between our intentions and fingers skilled by many years of training so different? Do we not sometimes marvel at what they create, apparently without ‘our’ guidance?

On this view, such a tool would just be an extension of human creativity—a part playing its role in the way the overall movement of intelligence, of creativity, manifests itself in the world. Intelligence is sticky: it is transferred from the agency formulating an original intention to and through their tools, the processes they put into motion, thus creating an overall movement not limited to the spatiotemporal confines of any single being carrying out a specific ‘intelligent’ act. Does AI art, created by nothing but a thin prompt posited to a sophisticated algorithm, not occupy a similar place?

The Aura of Intelligence

The relationship between art and technology has always been a fraught one. At first devastating developments may turn out to be a blessing in disguise: the development of photography might seem threatening for art as representation (who would want to sit for hours or days on end, when you can have your likeness preserved in a literal flash?), but turned out to instead enable a widening of the scope of art, enabling an exploration of abstract spaces without strict correspondence to the world around us.

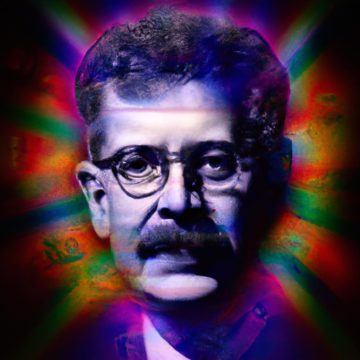

The classical investigation into the relation between art and technology is Walter Benjamin’s seminal text ‘The Work of Art in the Age of Mechanical Reproduction’. It has been much appealed to during the days of the NFT-hype (remember those?), but it is even more à propos now that we are entering the age of mechanical production of art. A central concept of Benjamin’s theory of art is that of the aura: the particular quality of the here-and-now of a work of art that is absent in its mere reproduction.

With the possibility of technological reproduction, the aura is diminished: the particular history of a work of art is replaced by the generic mass-produced circumstances of its copies. That is why people pay more for the original than for a copy, no matter how faithfully similar it might be: the context of the creation of the original, its historical nature, can never be reproduced. The creation of the NFT can then be seen as an attempt at creating an artificial aura, a post-hoc mark of originality, that fails to fulfill its role by its own arbitrary nature (it is not the fact that there is some context attached to a work of art that lends it its aura, but that it is that particular context specific to its creation).

However, to Benjamin, the aura is not a positive notion: rather, it serves an exclusionary function, allowing an elitist governance of the true artwork. From this point of view, the aura is a kind of manufactured exclusivity, prohibiting a democratization of art (and the NFT its hollowed-out shell in that regard).

Benjamin’s criticism of the aura in its function as a means to deny equal access to art is well posed. Still, there is something about the work of art that goes beyond its mere physical embodiment—the artifact as such: a random congealment of atoms in the exact likeness of the Mona Lisa in a chaotic universe void of life and creativity might be physically indistinguishable from it, yet could not constitute a work of art proper.

In contrast, then, the AI-produced work of art might be thought of as inherently without aura, and thus, reducible to its mere physical (or informational) artifact. But this is only right if AI is conceived of as essentially other, as artifice itself, separated from the historical movement of creativity we, by contrast, partake in. If, instead, what I have said is right—if intelligence, like breath, flows through us into our actions, into our works, and if we are just one particular local realization of that flow, a tangled vortex in its currents—then AI art partakes of just as much uniqueness and originality as human art does. Indeed, we might ultimately localize the aura within nothing else but that overall unfolding of intelligence and creativity, wherever it takes a particular form.

If the removal of the aura to enable the democratization of art then threatens the confusion of the work of art with the artifact, perhaps one might hope that the rise of AI art might instead remove the aura’s exclusionary aspect without simultaneously losing the dimension added to the merely physical by the overarching movement of unfolding creativity. There are obvious caveats to add to this conclusion, regarding the drive to turn a profit, or humanity’s questionable track record in engaging with non-human creativity. But for the sake of optimism, for now, at least, I will pass them over silently.