David Shields is a professor of English at the University of Washington and author of fiction, nonfiction and various hybrids thereof about sports, autobiography, celebrity and death. His new book, Reality Hunger: A Manifesto, uses collage writing to challenge preconceived ideas about form and genre in art, especially as they pertain to literature. Shields advocates disregarding these hardened constraints, a move which will allow art to use more of and become more like life itself. Colin Marshall originally conducted this conversation on the public radio program and podcast The Marketplace of Ideas. [MP3] [iTunes link]

In reading the book, which I really enjoyed, I try to picture what it would be like if I was reading in a complete vacuum, absent all the talk that's been going on around it — and there's been a whole lot of it, as you know. This is probably the 1,000th interview you've done about the book.

In reading the book, which I really enjoyed, I try to picture what it would be like if I was reading in a complete vacuum, absent all the talk that's been going on around it — and there's been a whole lot of it, as you know. This is probably the 1,000th interview you've done about the book.

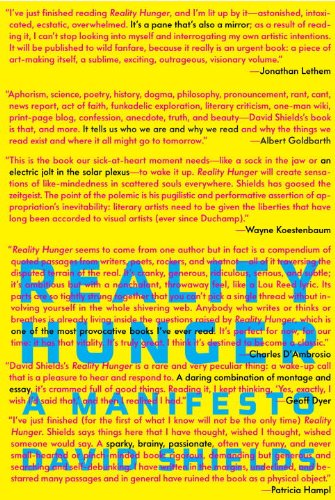

I think, okay, here's a book: 618 numbered sections, a lot of collage writing, a lot of remixing of writing from other sources, it advocates the spaces between genres, the spaces between forms, between truth and falsity. All this cool stuff that I like and think is important, but it would seem to me that it's going to be a niche book, it's going to be an academic book, maybe. Maybe it's not going to get a wide readership. But now, in the real world, this book is one of the most talked-about in recent memory. What do you think is going on here?

That's a good question. I totally agree with you! I was shocked that it was published by a commercial publisher. I got a tiny advance for it; I blush to mention how low it was, a virtually nominal advance. I think the publisher never expected it to have a great amount of attention either. I was, of course, hoping for it; every writer does with every book.

But what happened? I'm not sure. I couldn't point to a single review. Obviously it's gotten hundreds, even thousands, of reviews and blog mentions and things like that, but I can't point to a single review that was the catalyzing review. The only thing I would say is, I think to a certain degree, the book's arguments got cartoonized as two points: one, the novel is dead, and two, it's okay to steal stuff. Those are part of what I'm arguing — not even what I'm arguing in either case — but I think what happened is, those hot-button topics got grooved into the cultural discussion.

It's not like I don't partially agree with those statements, but those are far more nuanced in my book. That's not even the ultimate target of the book. Frankly, the book just came at a time when it is talking about stuff that people are concerned about. The book probably came along at a time when these topics were really crucial. What's my point? My point is that the book got cartoonized and the book came along at a time that these things had to be talked about: what is the fate of writing now? How do we want to think about copyright in a digital age? How do we want to think about the blurring of genres? Do people still read conventional novels? The book articulates all that.

I do think the killer app of the book was the disclaimer and the citations in the back, in which I refuse to provide citations, but then, with a gun to my head, I provide citations. Somehow that became, without any planning on my part, the book's killer app.

You mean the pages at the end where you mark down, if you can remember, who the writing that you remix, revise and put together from other sources came from?

Yeah, and I also preface it with a disclaimer. That was the book's killer app, almost, where I say, “I didn't want to provide these citations. The citations are in microscopic type. Many of the citations are misleading. The publisher made me do it. Please, for the love of god, don't read the citations. Stop, read no farther.”

I think that somehow became a door that a lot of readers and critics and bloggers could enter, like, “Oh, okay. I can talk about all these issues of copyright which have been swirling around us for the last ten years, the last five years especially.” We're very confused about appropriation and copyright in literature, and that one-page disclaimer of mine became almost a Trojan Horse for people to enter the gates of the city.

The reason that's there, of course, is the writing you used from other sources, used for your own arguments. I like how that was done, and I like the arguments you use those in service of making. But here's the reaction I have, and maybe you, as the author of the book, think the same thing. What you say about how the novel as we know it isn't so relevant, about how genres aren't so relevant, about how they might hinder art, about how plots and stories may be hindering art — and the type of collage writing you use: should any of this stuff be controversial in 2010?

I agree with you. The book has received a lot of reactions, somewhat contradictory. Some, “My god, this is the most radical thing I've ever read. I can't believe you're talking about this stuff.” On the other hand, people like you, who are more forward-thinking, it's almost like, yawn, it's all self-evident. If the book hadn't had all those citation issues, the book might not have entered the jetstream the way it had.

Read more »