by Monte Davis

What’s a blind spot? Physically, it might be an area obscured behind a clump of trees in an otherwise open landscape. Or a gap between the views in the side and rear-view mirrors. Lines of sight cut short, or nonexistent.

Metaphorically, it gains new connotation: a blind spot is something you don’t perceive or understand — and you are unaware of not perceiving or grasping it (while presumably the one who points that out does so.) So: no there there.

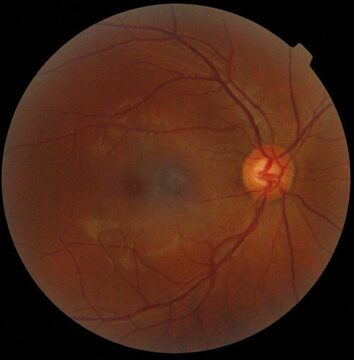

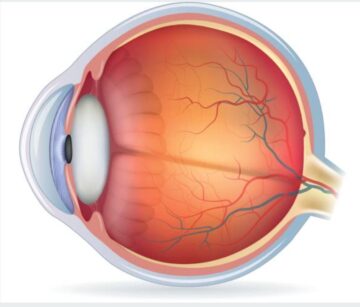

Anatomically, it’s the optic disc – the spot on each retina where neurons with news from all the light-sensitive rods and cones of the retina converge into the optic nerves. The optic disc itself, first described in 1666, has no sensors. So perceptually, your blind spots are two portions of the visual field that are inaccessible to vision. Even if you learned about them long ago, try this this simple, clever demo. (The link is a PDF file, and works poorly on a smartphone screen: view it on a desktop or laptop screen, or print it out.)

Anatomically, it’s the optic disc – the spot on each retina where neurons with news from all the light-sensitive rods and cones of the retina converge into the optic nerves. The optic disc itself, first described in 1666, has no sensors. So perceptually, your blind spots are two portions of the visual field that are inaccessible to vision. Even if you learned about them long ago, try this this simple, clever demo. (The link is a PDF file, and works poorly on a smartphone screen: view it on a desktop or laptop screen, or print it out.)

Are the blind spots big? SWAG it: your field of vision is roughly 210 degrees left to right, 150 degrees top to bottom. The blind spots are ovals 7-8 degrees wide and 5-6 degrees high, making their area less than one percent of the field’s total area. On the other hand, the full moon is just one-half degree across. So those two no-go zones, the blind spots you’re unaware of very nearly all your life, are each about a hundred times the area of the full moon. Are the blind spots small?

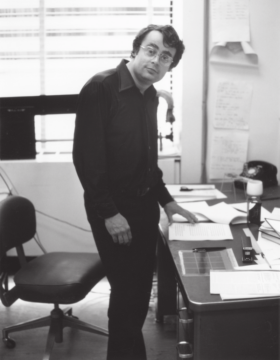

As a magazine science writer in 1978, I had an inch-deep acquaintance with neuroscience when I attended a talk by David Marr at MIT. At 32, Marr had been a rising star since his Cambridge doctoral thesis, A General Theory for Cerebral Cortex. (Yes, it was just as ambitious as that sounds.) Trained in mathematics as well as neurophysiology, Marr developed a schema for questions to be answered at different levels in any theory of an information-processing task. At MIT he concentrated on vision, and his talk was electric with ideas.

In an interview afterward, I asked Marr what he’d like to know about our non-perception of the blind spots. He replied that he hadn’t given it much thought, but the answer would probably involve interpolation from the surroundings of the spots, and integration over time as our gaze moves. Then he brightened:

“But that would be relatively simple sleight of hand. The real trick is keeping you unaware of just how narrow your macular and foveal vison is, and how sketchy all the rest is. I’d love to understand that!”

Look: directly behind the pupil and lens is the macula, an oval yellowish patch about 5 millimeters (less than a quarter-inch) across. Its visual angle is about 5 degrees. Centered in the macula and just visible at center right is a concave dip, the fovea, about 1.5 millimeters (6/100ths of an inch) across. Its visual angle is about 1 degree.

Cones – the photoreceptor cells responsible for color vision, quick to respond to changes – are packed 200 times more densely in the fovea. Rods, more useful in dimmer light but insensitive to color, are concentrated in a ring around the macula. The density of both rods and cones falls off with increasing distance from the macula. The results are dramatic. Let’s say you bring 20/20 vision to bear on a typical printed page at normal reading distance. Fix your eyes. The 1-degree visual angle of the fovea takes in a single 7-letter word. At 2 degrees out from the center, visual acuity is about 20/40. At the edge of the printed page, it’s about 20/140.

Marr’s point was that the area of detailed vision is stunningly small — a few times the area of the full moon. We do not experience how rapidly, going outward from that, your eyes deliver only blurs, blobs, and “nothing out there has changed in a way I need to attend to since I last looked that way.”

Well, of course, you may be thinking, but we do “look that way” often. Fair enough: attention redirects the gaze quickly. There are saccades – quick, frequent, automatic eye movements in identifying a face or reading a line of text. There are the “smooth pursuit” movements that follow a moving target, and the vestibulo-ocular firmware that compensates for movements of the head or body so that the visual world remains steady. Still, it’s a long way from the actual keyhole of sharp vision to our conscious impression of a fully detailed, panoramic visual field.

What I remember is the excitement in Marr’s eyes in thinking about tracing that “real trick.” But he died of leukemia just two years later, after finishing Vision: A Computational Investigation into the Human Representation and Processing of Visual Information. His short career was one of the most influential in the history of neuroscience.

*

Twenty years later, I read  with fascination science writer Tor Norretranders’ The User Illusion, a translation of his 1991 Danish Mærk verden. The English title echoed a phrase originally used by Alan Kay and his colleagues at Xerox PARC in the 1970s as they developed software and user interfaces for microcomputers – the nascent PCs and workstations. They created the “desktop” interface of files and folders that could be moved and stacked like paper, wastebaskets for deletion, calculator apps with a “key” layout like those of a handheld digital device (itself mimicking the layouts of hand-cranked desk calculators.)

with fascination science writer Tor Norretranders’ The User Illusion, a translation of his 1991 Danish Mærk verden. The English title echoed a phrase originally used by Alan Kay and his colleagues at Xerox PARC in the 1970s as they developed software and user interfaces for microcomputers – the nascent PCs and workstations. They created the “desktop” interface of files and folders that could be moved and stacked like paper, wastebaskets for deletion, calculator apps with a “key” layout like those of a handheld digital device (itself mimicking the layouts of hand-cranked desk calculators.)

These visual and procedural metaphors have very little to do with the computers’ internal states and processes – hence “illusion.” But as Apple and Windows would demonstrate, they have worked for millions, then billions. Norretranders’ book argued that our sensory and cognitive experience, their integration into consciousness, provides the same kind of useful, usable illusion. As he reported based on 1980s research, the senses generate on the order of 12 million bits per second of raw neural data – 10 million bits from vision, 1 million from external touch and proprioception (your body’s position and muscle activity), and the remainder for all the other senses. But the brain activity associated with our stream of conscious thought seems to process fewer than 100 bits per second. That implies a wholesale filtering and discarding of detail.

Get used to it: it’s bullet points and executive summaries all the way up. Consciousness evolved not to represent the environment as accurately as possible, but to help hominids live long and prosper by quick and dirty modeling of the environment and changes in it in three pounds of cellular jelly — followed by quick, appropriate action in response. Often that’s habitual, even unconscious and automatic action.

Only after The User Illusion did I understand why I’d recalled the exchange with Marr so often. He had happily turned inside out my curiosity about the blind spot, finding more wonder and challenge in how our flitting keyhole view comes to feel so broad and continuous. “Cutting consciousness down to size” is not a deprecation of consciousness, or advocacy of some other, better mode of apprehending the world. It’s simply a recognition that while we take pride in consciousness as a vast collective library / museum / laboratory / playground of thought, at any given moment any given one of us wields a rather small flashlight in that vastness.

*

I n 2011, Daniel Kahneman’s Thinking, Fast and Slow offered another encounter with the relations of conscious and not-conscious, how we think the mind works (or should) and how accumulating evidence tries to tell us it does.

n 2011, Daniel Kahneman’s Thinking, Fast and Slow offered another encounter with the relations of conscious and not-conscious, how we think the mind works (or should) and how accumulating evidence tries to tell us it does.

Kahneman, who died earlier this year, was trained as a psychologist, then did research on the cognitive mechanisms of attention. The best-known of his many collaborations was with Amos Tversky in the 1970s, when they studied the psychology of judgment and decision-making, designing simple, ingenious experiments to tease out how subjects made choices with incomplete or uncertain information.

Many economists had been pursuing mathematically rigorous theories based on assumptions of ideally rational actors with complete information. They began to take notice of the anomalous choices and systematic errors that turned up in these experiments. By the 1980s, “behavioral economics” was emerging as a more evidence-driven and experimental counterweight. Kahneman would go on to important contributions in other areas of cognitive psychology, but it was this work that was cited in his 2002 Nobel Prize in economics, and is the main background for Thinking, Fast and Slow.

In the book he posited System 1 thinking – fast, often unconscious, heavily influenced by habit, stereotypes, and bias – and System 2 thinking: slower, deliberate, guided by evidence and more or less articulated logic. His research on decision-making had shown that system 1 drives decisions more often than we like to acknowledge. System 2 is a relatively recent overlay, more cognitively costly and thus rarer, but can be fostered by education and the use of formal analysis and decision-making procedures.

A striking feature of the public response to Thinking, Fast and Slow was how readily it was assimilated to self-help marketing. The cognitive biases (originally a neutral term of art) that Kahneman and Tversky had studied were reformatted in clickbait listicles: “7 Logical Traps Even Smart People Fall Into” and “12 Ways Successful Leaders Make Better Decisions.” Bias is a bad thing to be done away with, right? If we sign up for the right training, subscribe to the right podcasts, couldn’t we become full-time System 2 superthinkers?

I doubt it very much. Kahneman cited experimental demonstrations that System 2 “depletes” concentration and emotional energy as well as being too slow for full-time use. Like foveal vision, it’s a limited resource., We couldn’t get through an ordinary day without hundreds of System 1 snap judgements that don’t rise to the level of deliberate choice. That’s why System 1 exists: it’s an evolutionary and cultural legacy, a toolkit of quick responses that are certainly fallible but have worked well enough, often enough, to be built in. We can and should educate and train for System 2 thought, use information technology to gather and sort more relevant information, design protocols and checklists to be methodical for us. Still, we should also be realistic — even humble? – about how far and how fast its scope can be extended.

Philosopher and educator Alfred North Whitehead saw the wry paradox more than a century ago:

“It is a profoundly erroneous truism, repeated by all copy-books and by eminent people when they are making speeches, that we should cultivate the habit of thinking about what we are doing. The precise opposite is the case. Civilization advances by extending the numbers of important operations which we can perform without thinking about them. Operations of thought are like cavalry charges in battle — they are strictly limited in number, they require fresh horses, and must only be made at decisive moments.”

***

Enjoying the content on 3QD? Help keep us going by donating now.