by Jonathan Kujawa

Recently some colleagues and I were out to lunch. It was our University’s “Dead Week.” This is the week before finals when students are in a last-minute rush to finish projects and study for exams, and faculty are planning how to wind up their courses and beginning to draft their final exams.

My colleagues and I joked that writing a final exam is basically an analog version of the Turing Test: You try to craft questions that can distinguish between the students who actually understand the material and those who “solve” problems by being skilled at pattern recognition and applying rote algorithms.

Coincidently, around the time of this lunch, OpenAI released ChatGPT. As 3QD readers no doubt have heard, ChatGPT is a large language model which was trained on a significant chunk of text in 2021. Using that text, it developed a model of which words and phrases most often follow one another. Using that model, ChatGPT then writes replies to prompts submitted by the user. The results are equally amazing and banal.

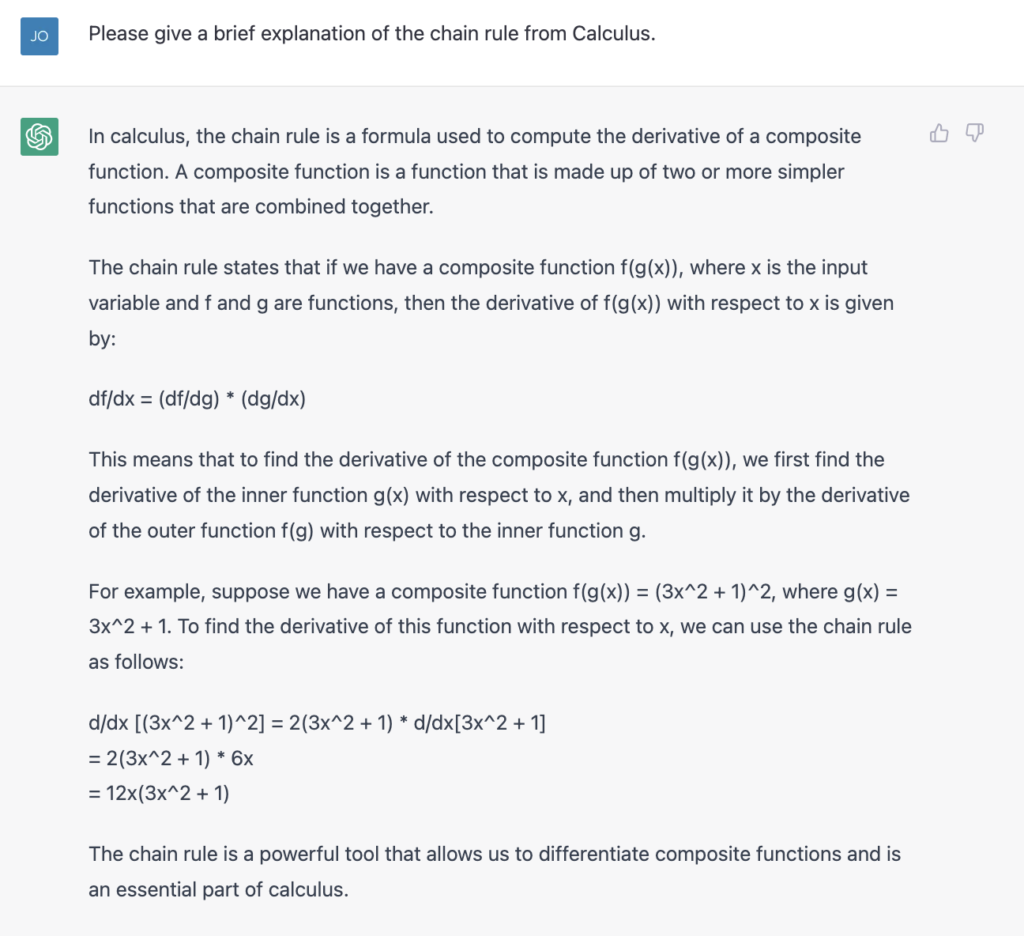

Amazing because it can write computer code, pretend to be a long lost friend, write a dialogue between Stephen Colbert and John Oliver about UFOs, learn and use a made-up language, and answer an essay question about the chain rule from Calculus:

The last is the sort of question an instructor might ask a first-year student to tease out if their students’ understanding of the chain rule goes beyond being able to turn the crank to get the correct answer.

Honestly, I’d be pretty happy to get ChatGPT’s answer from most of my students. I even asked a follow-up question about why the chain rule works, and the answer was not bad — I’d probably give it a B. It’s all the more impressive when you consider where such models were a few years ago and that ChatGPT is deliberately handicapped in various ways.

On the other hand, ChatGPT is ultimately banal. Obviously, it doesn’t actually understand what it writes. Based on a large body of text, mostly from the Internet, ChatGPT does a sophisticated version of auto-completion. It “writes” by predicting the words that should follow from the submitted prompt [1]. It’s like finding a path through the forest by looking at your feet and choosing to take the most deeply worn footprint step-by-step. You’ll often find your way through the forest, but you don’t discover anything new and sometimes get caught in a culdesac. ChatGPT’s responses read like a college freshman who only knows the world through reading about it on the internet. Worse, it replies with unflinching certainty, even when writing absolute nonsense [2].

But this raises some interesting questions. For one, if a computer system has no intelligence, creativity, or understanding but can mimic these qualities, when does it become a distinction without a difference? ChatGPT still falls short, but ChatGPT 2031 may be a different story. What is our place in such a world?

Thinking about ChatGPT and my students made me wonder what it means to educate someone in the modern world. A century ago, education was all about reading, writing, and arithmetic. It was mostly about the rote memorization of facts. When I was a student at the end of the last century, there was recognition that computers and calculators had changed things. Teachers placed a greater emphasis on synthesizing and applying knowledge and less on the mindless computations and regurgitation of facts.

Nowadays, we have Wikipedia, WolframAlpha, ChatGPT, and whatever comes next. I wish I could say our educational system turns out sophisticated, creative, robust thinkers. Unfortunately, we seem stuck in an Industrial Age, assembly-line version of education.

At most universities, Calculus is taught virtually the same as when I was a student in the pre-internet era. Students learn the chain rule, trig substitution, integration by parts, and various other tricks taught as much for their testability as their educational value. This is partly because this is how it was always done. But it is also due to the practical problem of educating and evaluating dozens or hundreds of students at a time. Many students get through these classes using an ad-hoc model that predicts how to solve a problem based on key phrases and symbols they recognize in the question asked.

However, once you get beyond the computational cookbook classes like Calculus, math requires you to be both rulebound and creative, to generalize and specialize, to know things with certainty and to acknowledge the limits of knowledge, to see both the forest and the trees, and to both grok the ineffable and to communicate your understanding to other humans.

The human mind is still the only computing device that has the capability of managing these contradictory goals. In previous 3QD essays, I’ve talked about ways that computers could revolutionize how we do mathematics. Nevertheless, I believe there is something deeply human about mathematics. As striking as ChatGPT is, it still struggles with what most mathematicians would consider “real” mathematics. I don’t see that fundamentally changing. Let me recommend again Francis Su’s book entitled Mathematics for Human Flourishing. He does a superb job of capturing the many ways learning, teaching, and doing mathematics makes us more human.

Perhaps it is my own biases, but I am hopeful that mathematics can help lead the way to a new kind of education [3]. My colleagues and I spend our careers trying to find ways to transcend the robotic and mindless preconceptions of our students. Like Lucy and Ethel at the assembly line, keeping up production means that sometimes corners are cut. But at our best, we teach students to deal with ambiguity, to know when to be careful and when to be reckless, to know what they know and what they don’t know, and to transcend their current limits.

In an era when computers can mimic shallow thinking, our educational system needs to find ways for everyone to think deeply as only humans can.

[1] If you’d like to know how ChatGPT and similar systems came to be, Jill Walker Rettberg, a professor at the University of Bergen, has a very interesting essay about the data and training most likely used for ChatGPT.

[2] Like some college freshmen!

[3] Not to say other disciplines don’t have their own virtues, but I can’t speak to those.