by Jochen Szangolies

Meet Hubert. For going on ten years now, Hubert has shared a living space with my wife and me. He’s a generally cheerful fellow, optimistic to a fault, occasionally prone to a little mischief; in fact, my wife, upon seeing the picture, remarked that he looked inordinately well-behaved. He’s fond of chocolate and watching TV, which may be the reason why his chief dwelling place is our couch, where most of the TV-watching and chocolate-eating transpires. He also likes to dance, is curious, but sometimes gets overwhelmed by his own enthusiasm.

Of course, you might want to object: Hubert is neither of these things. He doesn’t genuinely like anything, he doesn’t have any desire for chocolate, he can’t dance, much less enjoy doing so. Hubert, indeed, is afflicted by a grave handicap: he isn’t real. He can only like what I claim he likes; he only dances if I (or my wife) animate him; he can’t really eat chocolate, or watch TV. But Hubert is an intrepid, indomitable spirit: he won’t let such a minor setback as his own non-existence stop him from having a good time.

And indeed, the matter, once considered, is not necessarily that simple. Hubert’s beliefs and desires are not my beliefs and desires: I don’t always like the same shows, and I’m not much for dancing (although I confess we’re well-aligned in our fondness of chocolate). The question is, then, whom these beliefs and desires belong to. Are they pretend-beliefs, beliefs falsely attributed? Are they beliefs without a believer? Or, for a more radical option, does the existence of these beliefs imply the existence of some entity holding them?

Many authors report being faced with the curious sensation of their creations, their characters, refusing to behave as they intended them to. Jim Davies, director of the Science of Imagination Laboratory at Carleton University, in Imagination: The Science of your Mind’s Greatest Power, calls this the ‘illusion of independent agency’. He notes that many authors report hearing the voices of their characters, being able to enter into dialogues with them, even bartering with them over their fate in a novel—or receiving unsolicited advice from them.

Indeed, it is not unusual, in the creative process, to feel like one is not so much the originator, as but the conduit of ideas flowing from elsewhere, a ‘brief elaboration of a tube’. As singer-songwriter Nick Cave puts it:

A large part of the process of songwriting is spent waiting in a state of attention before the unknown. We stand in vigil, waiting for Jesus to emerge from the tomb—the divine idea, the beautiful idea—and reveal Himself.

But regardless of the metaphysics of creativity, the recognition that, in one’s own creation, one may be met with something not fully within one’s control, is a striking and powerful one. To simply disregard it as a convenient fiction, or even as an act of self-delusion, is, I think, a disservice to the creative act. But, to the committed metaphysical materialist, admitting the idea of ‘independent agency’ of fictional entities seems dangerously close to inviting the long-exorcised ghost back into the machine: minds, after all, are brains, or perhaps are what brains do; but where is the brain housing the mind of Sherlock Holmes, of Horatio, or of Hubert? And how, if that brain is just identical with their creator’s, could it be surprised by its own machinations?

Breaking out of the Chinese Room

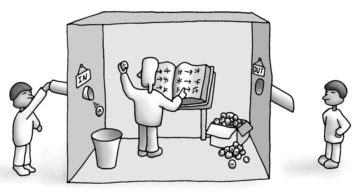

The famous Chinese Room thought experiment, due to the philosopher John Searle, was conceived as an attack on the notion of computationalism—the idea that all that our minds do can be explained as computation performed by the brain. Searle’s thought experiment is as follows. Suppose an enterprising AI researcher had come up with a program capable of holding an intelligible conversation in Chinese—that is, able to pass the Turing test. On this basis, we might want to conclude that, in executing this program, the computer understands Chinese—that, indeed, executing such a program is just what is meant by ‘understanding Chinese’.

Searle now imagines himself locked into a sealed room, with an input- and output-chute, through which Chinese characters are funneled (see Fig. 2). To Searle, not understanding Chinese, these characters are no better than arbitrary squiggles—they carry no meaning. But now suppose that Searle had access to the supposedly Chinese-understanding program in the form of a huge rule book. Using this book, he is able to organize groups of symbols he receives as input, and then produce matching groups of symbols in response—in such a way that a meaningful, Chinese conversation results. A Chinese-speaking person, thus, might quite reasonably conclude that the room contains another Chinese-speaking person.

Except, of course, this isn’t the case: Searle simply matches meaningless squiggles to other meaningless squiggles, without any hint at what these squiggles might mean. Therefore, or so Searle claims, merely following a program is not sufficient to facilitate genuine understanding.

The most obvious reply to this conclusion is that it doesn’t follow from the claim that following a particular program is sufficient for understanding Chinese that Searle in the Chinese room should acquire such an understanding. Searle, after all, is just a part of the system implementing the program; as an analogy, nobody would claim that, in executing a program for speaking Chinese, it is the CPU of the computer that might gain an understanding of Chinese.

In anticipating this ‘systems reply’ to his argument, Searle proposed to, instead, memorize the entire rule book; thus, instead of flipping through its pages, he just ‘looks up’ the appropriate characters in his mind. In this sense, all the system that’s left is just Searle himself; yet, he still won’t have any understanding of Chinese. In particular, if you asked Searle, in English, what the topic of a recent conversation carried out in Chinese was, he would have no way to know.

But this answer seems somewhat question-begging. For instance, suppose I ask Searle, in Chinese (as aided perhaps by Google translate), what his favorite color is, and get the answer ‘yellow’. Then, I ask again, in English this time, receiving ‘blue’ for an answer. Clearly, Chinese-speaking Searle has a different opinion from English-speaking Searle; and the same result might obtain if asked on matters of politics, favorite foods, or football clubs. Indeed, conceivably, Chinese-speaking Searle might disagree with English-speaking Searle on whether program-execution is sufficient for understanding Chinese!

It seems plausible, then, to consider the mind of Chinese Searle distinct from that of English Searle. In learning the rule book, and internalizing the process of using it to uphold a conversation in Chinese, Searle has essentially instantiated a ‘virtual mind’, with its own beliefs and desires. English Searle then ought not expect to share Chinese Searle’s understanding, any more so than I should expect to share yours. If that is right, then Searle’s argument fails to establish the insufficiency of computationalism—but it does illustrate the possibility of two distinct minds cohabiting within the same brain. (Note that accepting this incurs no commitment to computationalism, which, I believe, suffers from other fatal problems.)

The Overextended Mind

Searle rejected the conclusion that it could be the system that understood Chinese for a different reason: he held that ‘the conjunction of that person and bits of paper’ could not be seriously considered as the locus of understanding, unless one is ‘under the grip of an ideology’.

However, in recent years, the thesis that minds are not necessarily confined to brains has greatly advanced in popularity. This idea, the ‘extended mind’ introduced by the philosophers David Chalmers and Andy Clark in their 1998 paper of the same name, essentially holds that the various accoutrements we use to supplement our mental capacity—notebooks, calculators, smartphones—are not functionally different from the appropriate brain circuitry carrying out comparable functions, and thus, ought to be considered as taking part in the business of mental activity proper. That is, it’s not just brains that generate minds; a brain augmented with something as simple as a notepad might give rise to a mind different from that produced by the unaided brain.

The extended mind hypothesis is a kind of pulling-in of inert objects into the mental space, where the work of mind-production is carried out. In contrast, the idea that Hubert might have a mind of his own is an outward projection: Hubert, lacking a biological brain, must have his mental properties (if he were to have any) imbued by an external agent. The system of myself and Hubert gives rise to a mind distinct from that produced by my brain alone, which then projects its mental properties into Hubert as the believer of the attendant beliefs, the desirer of the conjured desires.

This is, surely, a fantastical idea, and should not be accepted without due consideration. After all, accepting the reality of a particular mind should have grave consequences for our stance towards the entities judged to possess minds. In particular, whether we hold something to have a mind generally determines its status as a moral agent. Are we, then, to award moral standing to every child’s doll? Is an author guilty of something akin to murder for killing off a character?

Yet, the idea that mental properties, like desires and beliefs, are not so much inherent to an entity, but rather, ascribed, is not entirely new: Daniel Dennett, philosopher and (erstwhile?) member of the Four Horsemen of New Atheism, has proposed the concept of the ‘intentional stance’. Intentionality, a concept (re-)introduced into modern philosophy by psychologist Franz Brentano, who borrowed the term from medieval scholasticism, here is the other-directedness of mental content: the property of thoughts to be about something, of beliefs and desires to have an object they are directed at.

The idea of the intentional stance proposes that when we attribute intentional states to an entity, what we’re really doing is to apply a heuristic: it is much simpler to say, Jane reached for the apple because she wanted to eat it, than to substitute the full story involving biochemical reactions, neuron spiking patterns, muscle contractions and so on that connect a lack of nutrients with the act of attaining a source of them on the microphysical level.

What’s striking about this is that the presence of mind becomes, to a certain extent, an interpretational question. I think that this is probably a true conclusion, even if I don’t agree with Dennett that intentionality is externally attributed—I believe that minds are, essentially, self-interpreting systems, and that their intentionality derives from this act of self-interpretation.

A full discussion of this idea would take us too far afield (for which, see the articles here and here, or the freely accessible preprint versions on my academia.edu page), but the basic motif is that of a self-reading and self-assembling blueprint. This sounds dangerously close to inviting paradox (and in a way, it is), but the great Hungarian polymath John von Neumann figured out a way to make it work, using his universal constructor. (Hence, I’ve called my proposal the ‘von Neumann Mind’, and it’s a life goal of mine to see it eventually featured on the list of things named after John von Neumann.)

This echoes a dictum of Douglas Hofstadter, physicist and author of the magnificent Gödel, Escher, Bach: An Eternal Golden Braid. In The Mind’s I, written together with Dennett, he proposes that ‘mind is a pattern perceived by a mind’. I might substitute ‘interpreted’ for ‘perceived’, but overall, I agree with the sentiment. (Indeed, I was surprised when, recently, I found that Hofstadter himself appealed to von Neumann’s construction in I Am a Strange Loop, the successor to GEB, in a discussion between two ‘strange loops’.)

Hofstadter is himself moved to consider the possibility of instantiating other minds within one’s own. However, his reason is far less cheerful than my own grappling with Hubert’s occasional mischief: wracked with grief at the untimely death of his wife Carol, he struggles to come to terms with the idea that her conscious experience, her self, her beliefs and desire should just be extinguished. As he puts it:

The name “Carol” denotes, for me, far more than just a body, which is now gone, but rather a very vast pattern, a style, a set of things including memories, hopes, dreams, beliefs, loves, reactions to music, sense of humor, self-doubt, generosity, compassion, and so on. Those things are to some extent sharable, objective, and multiply instantiatable, a bit like software on a diskette. […] I tend to think that although any individual’s consciousness is primarily resident in one particular brain, it is also somewhat present in other brains as well […].

Hofstadter’s reasoning might easily be dismissed as the desperate grasping for straws of the recent widower, aching for a balm to soothe the raw loss. After all, in a similar situation, many turn to the comforts of religion, and promises of eternal life.

But in Hofstadter’s view, a self is, ultimately, a self-referential ‘strange loop’, a pattern perceiving itself, like an image containing itself. And indeed, this is the logical form of von Neumann’s construction.

But such a loop may persist, even beyond its original context, and therefore, is not tied to any particular substrate, biological or otherwise. Thus, to suppose that one’s mind could contain, within itself, multiple loops—perhaps echoes, partial recollections of persons one knows well enough to know, to partake in, their beliefs and desires—in the same manner in which one self-referential image might contain other self-referential images, is not in itself absurd.

The Self within the Other, the Other within the Self

All this is not intended to convince anybody that yes, Hubert is his own self, with his own beliefs and desires. It is, however, intended to question a particular, and to my mind pernicious, belief about the relation between mind and world, that’s grown to be pervasive in modern culture: namely, that we are all little isolated islands of selves, each single brain giving rise to an instance of self-hood, a singular consciousness at infinite remove from any others.

Rather, we should think in terms of systems. Just as the system of myself and my notebook gives rise to a mind distinct from what my brain on its own might produce, the system of you and me produces yet a further mind—and, to the extent that a system persists even if any of its components might not, such as a car persists if it has lost its muffler, the attendant minds might transcend any of the brains composing them.

Moreover, all of us partake in many systems—with objects in the world, with plush animals, but also with each other, with families, and even with institutions. To the extent that all of these systems may come to share the work of producing minds, then, every one of us is not associated with a single mind, but with a plethora, a chorus of mentality. And more than that, everyone of us is a collection of systems themselves—granting that systems combining brains with various extensions may give rise to minds, there is no reason that subsystems of the brain could not, likewise. If it’s minds all the way up, there’s no reason it shouldn’t be minds at least a good way down.

Minds are not solitary prisoners of bony brain-boxes, with no hope to ever really reach out to one another. Indeed, to the extent that our extended minds share substrates—if we share the same notebook, say, or more broadly, are all connected via the internet, via google and wikipedia—we all partake in the same process of mind-creation. Nobody really is just for themselves; having a mind is taking part in the world, and thus, in one another. We are, truly, all in this together.