Ingrid Fadelli in Phys.Org:

Emerging technologies such as artificial intelligence (AI) algorithms, mobile robots and unmanned aerial vehicles (UAVs) could enhance practices in a variety of fields, including cinematography. In recent years, many cinematographers and entertainment companies specifically began exploring the use of UAVs to capture high-quality aerial video footage (i.e., videos of specific locations taken from above). Researchers at University of Zaragoza and Stanford University recently created CineMPC, a computational tool that can be used for autonomously controlling a drone’s on-board video cameras. This technique, introduced in a paper pre-published on arXiv, could significantly enhance current cinematography practices based on the use of UAVs.

Emerging technologies such as artificial intelligence (AI) algorithms, mobile robots and unmanned aerial vehicles (UAVs) could enhance practices in a variety of fields, including cinematography. In recent years, many cinematographers and entertainment companies specifically began exploring the use of UAVs to capture high-quality aerial video footage (i.e., videos of specific locations taken from above). Researchers at University of Zaragoza and Stanford University recently created CineMPC, a computational tool that can be used for autonomously controlling a drone’s on-board video cameras. This technique, introduced in a paper pre-published on arXiv, could significantly enhance current cinematography practices based on the use of UAVs.

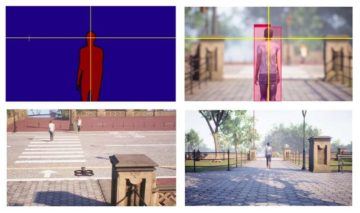

“When reading existing literature about autonomous cinematography and, in particular, autonomous filming drones, we noticed that existing solutions focus on controlling the extrinsincs of the camera (e.g., the position and rotations of the camera),” Pablo Pueyo, one of the researchers who carried out the study, told TechXplore. “According to cinematography literature, however, one of the most decisive factors that determine a good or a bad footage is controlling the intrinsic parameters of the lens of the camera, such as focus distance, focal length and focus aperture.”

A camera’s intrinsic parameters (e.g., focal distance, length and aperture) are those that determine which parts of an image are in focus or blurred, which can ultimately change a viewer’s perception of a given scene. Being able to change these parameters allows cinematographers to create specific effects, for instance, producing footage with varying depth of field or effectively zooming into specific parts of an image. The overall objective of the recent work by Pueyo and his colleagues was to achieve optimal control of a drone’s movements in ways that would automatically produce these types of effects.

More here.