by Fabio Tollon

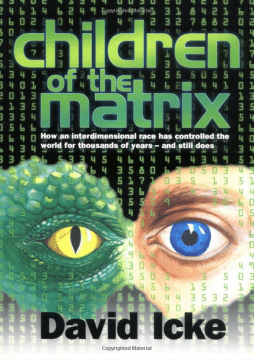

In 2019 Buckey Wolf, a 26-year-old man from Seattle, stabbed his brother in the head with a four-foot long sword. He then called the police on himself, admitting his guilt. Another tragic case of mindless violence? Not quite, as there is far more going on in the case of Buckey Wolf: he committed murder because he believed his brother was turning into a lizard. Specifically, a kind of shape-shifting reptile that lives among us and controls world events. If this sounds fabricated, it’s unfortunately not. Over 12 million Americans believe (“know”) that such lizard people exist, and that they are to be found at the highest levels of government, controlling the world economy for their own cold-blooded interests. This reptilian conspiracy theory was first made popular by well-known charlatan David Icke.

What emerged from further investigation into the Wolf murder case was an interesting trend in his YouTube “likes” over the years. Here it was noted that his interests shifted from music to martial arts, fitness, media criticism, firearms and other weapons, and video games. From here it seems Wolf was thrown into the world of alt-right political content.

In a recent paper Alfano et al. study whether YouTube’s recommender system may be responsible for such epistemically retrograde ideation. Perhaps the first case of murder by algorithm? Well, not quite.

In their paper, the authors aim to discern whether technological scaffolding was at least partially involved in Wolf’s atypical cognition. They make use of a theoretical framework known as technological seduction, whereby technological systems try to read user’s minds and predict what they want. In these scenarios, such as when Google uses predictive text, we as users are “seduced” into believing that Google knows our thoughts, especially when we end up following the recommendations of such systems.

So how exactly does the recommender system work? Well, firstly, it makes use of bottom-up seduction, whereby the system creates suggestions based on aggregated user data. This data is collected by combining various “digital footprints” of users, such as their location, search history, etc. YouTube’s recommender system is optimized to encourage users to watch entire videos, as this is how YouTube can generate more revenue. If users watch videos through to the end, they are more likely to see adverts displayed adjacently to or embedded in the video they are watching. The system therefore recommends videos that users are likely to spend the largest amount of time watching through the “AutoPlay” feature (whose default setting is to be automatically enabled). The burning question, at this point, is whether this recommender system can reliably lead users down epistemically problematic rabbit holes. In other words, is it possible to discern a pattern in YouTube’s AutoPlay system that takes users from ABBA to lizard people? This becomes especially significant when you consider that 70% of all watch-time spent on YouTube is due to videos suggested by the recommender system.

To test this theory the authors made use of a “web-crawler”, which simulated a user, and followed all the recommended videos five layers deep, at each point noting which videos were recommended . Following this, they assigned each video a score of 1-3:

“Videos receiving a ‘1’ have no conspiratorial content, videos receiving a ‘2’ contain a claim that powerful forces influence (or try to influence) the topic of the video, and videos receiving a ‘3’ contain claims that forces influence (or try to influence) the topic of the video and also systematically distort evidence about their actions or existence.”

The key feature distinguishing mild from severe conspiracy theories is their amenability to counter evidence. In the case of severely conspiratorial theories one finds them to be especially sticky, with overt distortion of the available evidence a hallmark of such theories. They are, in a sense, unfalsifiable.

Six different starting points were used for the web-crawler. These starting points were based on predicted potential pathways to conspiracy theories: martial arts, fitness, firearms and other weapons, natural foods, tiny houses, and gurus. The search terms used in each genre varied from things like “Jordan Peterson”, “Ben Shapiro” and “Joe Rogan” in the guru category, to “GMO”, “apple cider vinegar”, and “Monsanto” in the natural foods category. From this data, the videos were sorted based on the 1-3 point scale outlined earlier.

I will focus here on the most problematic of the genres: gurus, firearms, and (perhaps surprisingly) natural foods. In the case of gurus, almost 50% of the videos contained conspiratorial content. For firearms, the result was just under 10%, and for natural foods just over 10%. While some topics may seem to be better off than others, one must keep in mind what is being measured here. Videos with a rating of “3” systematically distort evidence. This means that YouTube’s AutoPlay feature is promoting content that actively aims to misrepresent available evidence to push some agenda. Moreover, in all six categories it was found that YouTube was promoting conspiratorial content. This is extremely worrying when one considers that YouTube has 2 billion users who watch a combined total of one billion hours of video per day.

What emerges from the foregoing discussion is that the YouTube recommender system encourages users to view counter-normative and epistemically problematic content. Considering this, there are serious questions that are raised regarding the deployment of such algorithmic systems. Firstly, it seems obvious that YouTube ought to have increased oversight on the kind of content it recommends. The recommender system, designed for profit, seemingly lacks an adequate epistemic component. That is, it does not seem to have a good enough mechanism for detecting epistemically good from epistemically problematic content. This blindness is especially challenging because it seems as though YouTube is actually making more money as a result of this system. No amount of profit should justify the promotion of views such as those of David Icke or Alex Jones.

Secondly, what becomes salient in this discussion is the importance of design in such systems. For example, on the YouTube platform the default setting for AutoPlay is for it to be “on”. However, there is good evidence that simply turning off this default option might significantly reduce the amount of problematic content users consume, as defaults are extremely powerful behavioral nudges. Moreover, this design level perspective suggests that we should seriously consider whether technology can influence what we value. This contrasts with the so-called value neutrality thesis regarding technology, which claims that technology can come to have value only by the ways in which it is used by human beings. On this view, technology is merely a neutral vehicle through which human beings achieve their ends. However, as the YouTube case suggests, it seems that artificial systems can indeed influence the kinds of things we come to value. We do not have the counterfactual evidence which could prove whether or not, in the absence of the recommender system, less conspiratorial videos would be viewed. What we can say, however, is that it seems no person would consciously admit that they want to believe content that is systematically distorted. Even someone like Buckey Wolf, who believed his brother was turning into a lizard, presumably believed that he had good reasons to have this belief. Wolf, over a period of years, was slowly nudged towards more and more conspiratorial content. This content led to him endorsing and coming to value various problematic alt-right conspiracy theories. In the absence of such seduction, at the very least, it seems less likely that he would have the same beliefs.

Thirdly, this evidence also raises questions regarding the kinds of virtues we ought to be cultivating as digital citizens. While change is often slow to come from a regulatory point of view, especially when profits are involved, there are steps we can take as individuals to reduce the impact of these seductive systems. We can, simply, investigate what defaults are presented to us, and whether we are better off without them. We could also promote the virtue of epistemic vigilance . Vigilance is a dynamic, enduring, and intentional activity, requiring effort from the part of the user to try and avoid problematic content. Such a virtue seems now, more than ever, especially necessary.