Grigori Guitchounts in Nautilus:

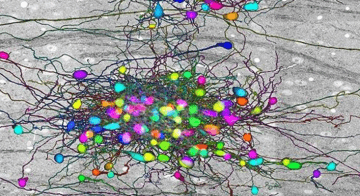

On a chilly evening last fall, I stared into nothingness out of the floor-to-ceiling windows in my office on the outskirts of Harvard’s campus. As a purplish-red sun set, I sat brooding over my dataset on rat brains. I thought of the cold windowless rooms in downtown Boston, home to Harvard’s high-performance computing center, where computer servers were holding on to a precious 48 terabytes of my data. I have recorded the 13 trillion numbers in this dataset as part of my Ph.D. experiments, asking how the visual parts of the rat brain respond to movement. Printed on paper, the dataset would fill 116 billion pages, double-spaced. When I recently finished writing the story of my data, the magnum opus fit on fewer than two dozen printed pages. Performing the experiments turned out to be the easy part. I had spent the last year agonizing over the data, observing and asking questions. The answers left out large chunks that did not pertain to the questions, like a map leaves out irrelevant details of a territory. But, as massive as my dataset sounds, it represents just a tiny chunk of a dataset taken from the whole brain. And the questions it asks—Do neurons in the visual cortex do anything when an animal can’t see? What happens when inputs to the visual cortex from other brain regions are shut off?—are small compared to the ultimate question in neuroscience: How does the brain work?

On a chilly evening last fall, I stared into nothingness out of the floor-to-ceiling windows in my office on the outskirts of Harvard’s campus. As a purplish-red sun set, I sat brooding over my dataset on rat brains. I thought of the cold windowless rooms in downtown Boston, home to Harvard’s high-performance computing center, where computer servers were holding on to a precious 48 terabytes of my data. I have recorded the 13 trillion numbers in this dataset as part of my Ph.D. experiments, asking how the visual parts of the rat brain respond to movement. Printed on paper, the dataset would fill 116 billion pages, double-spaced. When I recently finished writing the story of my data, the magnum opus fit on fewer than two dozen printed pages. Performing the experiments turned out to be the easy part. I had spent the last year agonizing over the data, observing and asking questions. The answers left out large chunks that did not pertain to the questions, like a map leaves out irrelevant details of a territory. But, as massive as my dataset sounds, it represents just a tiny chunk of a dataset taken from the whole brain. And the questions it asks—Do neurons in the visual cortex do anything when an animal can’t see? What happens when inputs to the visual cortex from other brain regions are shut off?—are small compared to the ultimate question in neuroscience: How does the brain work?

The nature of the scientific process is such that researchers have to pick small, pointed questions. Scientists are like diners at a restaurant: We’d love to try everything on the menu, but choices have to be made. And so we pick our field, and subfield, read up on the hundreds of previous experiments done on the subject, design and perform our own experiments, and hope the answers advance our understanding. But if we have to ask small questions, then how do we begin to understand the whole?

More here.