From Simons Foundation:

At each instant, our senses gather oodles of sensory information, yet somehow our brains reduce that fire hose of input to simple realities: A car is honking. A bluebird is flying.

At each instant, our senses gather oodles of sensory information, yet somehow our brains reduce that fire hose of input to simple realities: A car is honking. A bluebird is flying.

How does this happen?

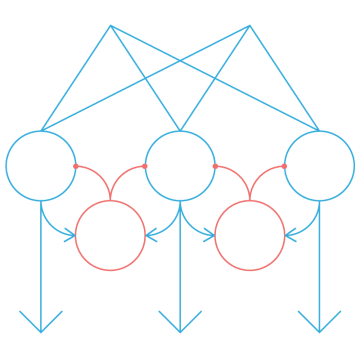

One part of simplifying visual information is ‘dimensionality reduction.’ The brain, for instance, takes in an image made up of thousands of pixels and labels it ‘teapot.’ One such simplification strategy shows up repeatedly in the brain, and recent work from a team led by Dmitri Chklovskii, group leader for neuroscience at the Center for Computational Biology, suggests the strategy may be no accident.

Consider color. In the brain, one neuron may fire when a person looks at a green teapot, whereas another fires at a blue teapot. Neuroscientists say that these cells have localized receptive fields, as each neuron responds strongly to one hue, collectively spanning the entire rainbow. Similar setups allow us to distinguish aural pitches. Conventional artificial neural networks accomplish similar tasks, such as classifying images, but these algorithms work completely differently from those in the brain. Many artificial networks, for instance, tweak the connections between neurons by using information from distant neurons. In a real brain, however, the strength of a connection predominantly depends only on nearby neurons. However, by extending a tradition of emulating biological learning, Chklovskii and his collaborators developed an approach that is not only biologically plausible but also powerful.

More here.