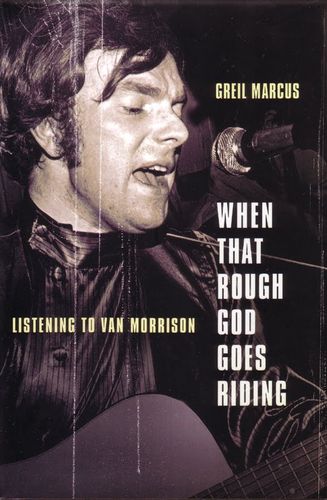

Greil Marcus is a music journalist, critic, and observer of America. Though they span countless subjects, Marcus’ past books have been rooted in examinations of icons like Bob Dylan, the Sex Pistols, Elvis Presley, and Bill Clinton. In his latest release, When that Rough God Goes Riding: Listening to Van Morrison, he takes on the Irish singer-songwriter’s vast, varied catalogue, documenting his own responses to Morrison’s music as well as the far-flung cultural and psychological resonances it sets off. Colin Marshall originally conducted this interview on the public radio program and podcast The Marketplace of Ideas. [MP3] [iTunes]

How much should we read into the fact that this book is about not the work of an American, but the work of an Irishman? Of course you've written about U.K. artists before — say, the Sex Pistols. Should we consider this a departure from your normal thread?

How much should we read into the fact that this book is about not the work of an American, but the work of an Irishman? Of course you've written about U.K. artists before — say, the Sex Pistols. Should we consider this a departure from your normal thread?

I don't know if it's a departure, or, if it were, if that would be of any interest or significance. I spent nine year writing a book about the European avant-garde. America is as subject I will never leave behind, but Van Morrison is a voice, someone I've been listening to for 45 years. It's not really important where he comes from.

I want to get an idea of the very beginning of your own career listening to him. Where does it start?

I was 20 years old, living in the San Francisco Bay Area. Van Morrison, with his band Them or on his own, had always been extraordinarily popular in the Bay Area. His song “Gloria”, which everybody knows, in 1965 was a national hit by a Chicago Band called Shadows of Knight. It was only in California that Them had the hit, that their verson got the most airplay. Now, of course, nobody remembers the Shadows of Knight, their version never gets played on the radio, and Van Morrison still does. I was here. It was on the radio. Not just that song, but “Mystic Eyes” and “Here Comes the Night”. They were all glamorous and big, and they had a desperation I wasn't hearing anywhere else. I was captivated.

That desperation — I want to hear more. How distinct was that from the musical context you heard Van Morrison in?

He sounded like somebody pursued, as if there was something at his back he had to get away from. There was a sense of jeopardy in his music. Whether that came from something personal or something he heard in John Lee Hooker that he particularly liked and wanted to emulate, I don't know. It became, as any stylistic theme or element becomes after a while, a thing in itself. Its source, whatever might've sparked it in the first place, becomes irrelevant. It becomes part of your style, your personality. That happened very quickly with him.

This gets at the type of criticism you write, and of the way you approach Van Morrison's work I so much enjoy. It's that it doesn't matter whether Van Morrison, in his life, actually did feel pursued, or what events might have made him feel desperation. Safe to say it doesn't interest you if the events of his life contributed to his music? It's about the music itself and nothing more, correct?

That's absolutely right. I don't have any interest in the private lives of the people I am intrigued by and that I might end up writing about. To trace anybody's work, what they produce, what they put into the world, what you or I respond to, to somebody's life, their biography, is utterly reductionist. It's simply a way of protecting ourselves from the imagination, from the threat of the imagination. Some people are very uncomfortable with the idea they can be moved, they can be threatened, they can be thrilled by something that is just made up.

John Irving, the novelist, once said to me, “You know why that is? It's because people who don't have an imagination are terrified of people who do.” I don't know if that's true, but we live in culture of the memoir, where we're not supposed to believe anything unless it's documented that it actually happened. Never mind that most memoirs are more fictional than novels. We want that imprimatur: “This really happened. This is really true.” You can respond to it. You can feel “okay” about being moved by it. Whereas with art, whether music, movies, novels, painting, ultimately, to be moved by art, by something somebody has made up, is, from a certain perspective, to be tricked. To be fooled. You made me cry, and you just did it like you hypnotized me. I love that. Not everybody does.

This is intriguing when I think about what you quote Van Morrison as saying. You talk about interviewers asking him, “Who's Madame George? What's this and that about? What's the real source?” One of the lines that has so stuck with me that Morrison said was that these are fictions, short stories in musical form. It seems like, when an artist says something is fiction, it validates being tricked, in a sense. But people don't tend to read it that way?

I think people don't believe it. I was very struck by that statement, which he made just a year or so ago on a radio. I was listening to this program on NPR, and that's what he's saying. I think, for a lot of people, that must have come off as defensive or evasive. How could anything like the song “Madame George” on the album Astral Weeks, an album that came out in 1968, a song people have been listening to, discovering, passing on all that time, a song that has a life in the world, how can anything rendered with such passion and with such detail be made up? It's got to be true. There has to be a real Madame George in the life of the composer. That's one way of looking at things. It isn't mine. With very few exceptions, it isn't anything I want to read about, people investigating work on those terms.

Look, there are all kinds of people who suffer great traumas, who have life-changing experiences that become touchstones for them. Maybe they lost a parent or were in a terrible accident, laid up for five years, couldn't do anything but think. We say, “Well, that's what made this person who he or she is. That's what let do the work.” You know, all sorts of people experience traumas, and few people go on to produce something other people pay attention to. You can't trace any given work somebody produces to anything that happened in that person's life. It doesn't work.

Read more »

Brownian motion at a macro-scale. That's what my working week feels like these days. On Monday I flew from Delhi to Ahmedabad, the next day to Bangalore, a couple of days later to Patna via Calcutta. And now, after a tense hour's delay in Patna, while this decrepit Air-India plane was late arriving into that one-room box of an airport, we're finally off and away. We'll land in Delhi with just the right sliver of time for me to catch the only direct flight to Goa today. We'll be going for a friend's 40th birthday bash on the beach this holiday weekend.