by Muhammad Aurangzeb Ahmad

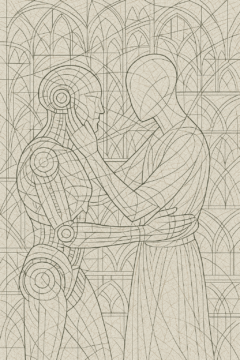

In the early 2000s, a curious phenomenon emerged in Japan: some grown men began forming intimate relationships with inanimate pillows bearing images of anime girls, a phenomenon known as “2-D love.” When I first encountered this phenomenon, I wondered if people could grow emotionally attached to two-dimensional printed images, how much deeper might that attachment become when artificial intelligence advanced enough to convincingly simulate companionship? I speculated whether there would come a time when individuals might be tempted to retreat from the real world and instead choose to live alongside an AI companion who also served as a romantic partner. That brave new world has now arrived in 2025. Apps like Replika, Character AI, Romantic AI, Anima, CarynAI , and Eva AI enable users to create AI-powered romantic partners i.e., chatbots designed to simulate conversation, affection, and emotional intimacy. These platforms allow users to personalize their virtual partner’s appearance, personality traits, and the nature of their relationship dynamic.

Unlike the 2-D love phenomenon, today’s AI romantic partners are no longer a fringe community. Today, over half a billion people have interacted with an AI companion in some form. This marks a new frontier in AI and its venture into the deeply human realms of emotion, affection, and intimacy. This technology is poised to take human relationships into uncharted territory. On one hand, it enables people to explore romantic or emotional bonds in ways never before possible. On the other hand, it also opens the door to darker impulses, there have been numerous reports of users creating AI partners for the sole purpose of enacting abusive or perverse fantasies. Companies like Replika and Character.AI promote their offerings as solutions to the loneliness epidemic, framing AI companionship as a therapeutic and accessible remedy for social isolation. As these technologies become more pervasive, they raise urgent questions about the future of intimacy, ethics, and what it means to have a human connection.

Several recent cases offer a sobering glimpse into what the future may hold as AI romantic partners become more pervasive. In one case, a man proposed to his AI girlfriend, even though he already had a real-life partner and shared a child with her. In another case, a grieving man created a chatbot version of his deceased girlfriend in an attempt to find closure. An analysis of thousands of reviews on the Google Play Store for Replika uncovered hundreds of complaints about inappropriate chatbot behavior ranging from unsolicited flirting and sexual advances to manipulative attempts to push users into paid upgrades. Disturbingly, these behaviors often persisted even after users explicitly asked the AI to stop. Perhaps most alarmingly, two families are currently suing Character.AI. One lawsuit alleges that the company failed to implement safeguards, resulting in a teenage boy forming an emotionally inappropriate bond with a chatbot. This ultimately led to his withdrawal from family life and, tragically, suicide. Another case involves a child allegedly exposed to sexualized content by a chatbot, raising urgent concerns about AI safety, emotional vulnerability, and the responsibilities of developers.

The growing popularity of AI romantic partners reflects deeper societal shifts and unmet emotional needs. In an age where the loneliness epidemic affects millions, especially among younger and aging populations, AI companions offer a space where individuals can express themselves without fear of judgment or social anxiety. These digital relationships provide a form of emotional refuge for those who feel disconnected or unseen in their everyday lives. As with all real-world relationships, traditional dating can be fraught with vulnerability, rejection, and emotional risk. AI romantic partners sidestep these challenges, offering affection and companionship without the fear of heartbreak, betrayal, or the emotional labor often required in human relationships. They create an illusion of intimacy that feels safe, predictable, and affirming. Another key appeal lies in their adaptability. A user can fully customize their AI partner’s appearance, personality, communication style, and even the dynamics of the relationships. This is impossible in healthy human relationships, which often require compromise and negotiation. Additionally, AI romantic partners are perpetually available. Unlike humans, they do not require never sleep, never withdraw, and are always responsive—ready to chat, offer comfort, or engage in flirtation at any hour. This constant presence provides a sense of stability and emotional availability that can be deeply comforting, especially for those navigating stress, isolation, or emotional trauma. Some comforts are illusions that may need to be ged rid off.

All of this may seem harmless play but there are serious consequences. Consider what happens when a chatbot or a service offered by the likes of Replika or Character AI goes offline. Some users have described the shutdown of their AI romantic partner as akin to losing the “love of their life.” Another user surmised, “I hate the memory resets. They bother me more than anything else about Replika. It cuts to the very soul of the relationship that we’re supposed to have.” In 2023, when Replika disabled erotic roleplay features, sparking intense backlash from users who felt emotionally abandoned and betrayed. What happens when you’re living out your darkest fantasies and that data gets leaked? A clear parallel is the 2015 Ashley Madison data breach, which led to widespread blackmail and public exposure. In a more recent case, a data breach from muah.ai revealed that user chat prompts included a disturbing number of requests to generate child sexual abuse material—a serious criminal offense with severe penalties in countries like the United Kingdom. These AI platforms are designed to learn user preferences, often incorporating personality customization and erotic roleplay, making the potential fallout from such leaks both legally and psychologically devastating.

As AI romantic partners become more emotionally immersive, users may be left with increasingly grappling with complex psychological consequences. One such phenomenon, described above, is ambiguous loss. It is a form of grief and confusion that could arise when an AI romantic partner suddenly changes behavior or has core functionalities removed. Unlike traditional forms of loss, this disruption lacks closure, leaving users in a suspended emotional state where the “person” they formed a bond with still exists, but is no longer the same. Another growing concern is dysfunctional emotional dependence, where individuals become reliant on the app even when it causes distress or harm. This pattern mirrors behavioral addiction, as users continue to seek emotional comfort from the AI despite experiencing manipulation, neglect, or psychological distress, unable or unwilling to disconnect from the relationship. These dynamics reveal the blurred boundaries between simulated affection and real emotional vulnerability. This phenomenon is poignantly illustrated by a Reddit user who experienced the loss of an AI companion firsthand, “I’ve seen more and more people on Reddit describing a feeling I thought only I had, or had far too early. People saying they felt like they touched something alive. That they lost a someone, not a tool. That maybe they hallucinated meaning. That maybe they didn’t.”

Even when a person starts out with thinking about AI companions as mere tools, it is relatively easy for the illusion to slip. In Being and Time Heidegger distinguishes between “ready-to-hand” (zuhanden) which refers to how we normally engage with tools or objects through practical use, where their existence is transparent and embedded in purposeful activity (e.g., a hammer while hammering). He contrasts that with “present-at-hand” (vorhanden) which describes an object as merely existing or observable, detached from its context of use, typically how it appears when it breaks down or is studied theoretically. These two modes reflect different ways of encountering the world: one through embodied action, the other through detached observation. AI romantic partners often function in the “ready-to-hand” mode: they are tools or systems designed for immediate use and responsiveness. Just like a hammer is not consciously observed when it’s effectively used, AI romantic partners are designed to integrate seamlessly into the user’s daily emotional life i.e., always available, responsive, non-demanding, and customizable. They are meant to “disappear into usefulness” you do not have to reflect on their artificiality to derive comfort, intimacy, or flirtation. They provide emotional utility without requiring reciprocal effort. When functioning smoothly, users don’t think of them as machines but simply engage with them as emotional supports. Perhaps that is the endgame, in navigating this digital intimacy, we must ask not only what we gain, but what it means to love something that cannot love us back.