Amaral and Neves in Nature:

Research articles in the life sciences are more ambitious than ever. The amount of data in high-impact journals has doubled over 20 years2, and basic-science papers are increasingly expected to include evidence of how results will translate to clinical applications. An article in a journal such as Nature thus ends up representing many years of work by several people. Still, that’s no guarantee of replicability. The Reproducibility Project: Cancer Biology has so far managed to replicate the main findings in only 5 of 17 highly cited articles3, and a replication of 21 social-sciences articles in Science and Nature had a success rate of between 57 and 67%4.

Research articles in the life sciences are more ambitious than ever. The amount of data in high-impact journals has doubled over 20 years2, and basic-science papers are increasingly expected to include evidence of how results will translate to clinical applications. An article in a journal such as Nature thus ends up representing many years of work by several people. Still, that’s no guarantee of replicability. The Reproducibility Project: Cancer Biology has so far managed to replicate the main findings in only 5 of 17 highly cited articles3, and a replication of 21 social-sciences articles in Science and Nature had a success rate of between 57 and 67%4.

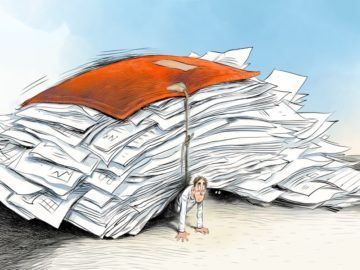

Many calls have been made to improve this scenario. Proposed measures include increasing sample sizes, preregistering protocols and using stricter statistical analyses. Another proposal is to introduce heterogeneity in methods and models to evaluate robustness — for instance, using more than one way to suppress gene expression across a variety of cell lines or rodent strains. In our work on the initiative, we have come to appreciate the amount of effort involved in following these proposals for a single experiment, let alone for an entire paper. Even in a simple RT-PCR experiment, there are dozens of steps in which methods can vary, as well as a breadth of controls to assess the purity, integrity and specificity of materials. Specifying all of these steps in advance represents an exhaustive and sometimes futile process, because protocols inevitably have to be adapted along the way. Recording the entire method in an auditable way generates spreadsheets with hundreds of rows for every experiment. We do think that the effort will pay off in terms of reproducibility. But if every paper in discovery science is to adopt this mindset, a typical high-profile article might easily take an entire decade of work, as well as a huge budget. This got us thinking about other, more efficient ways to arrive at reliable science.

More here.