by Ashutosh Jogalekar

How do we regulate a revolutionary new technology with great potential for harm and good? A 380-year-old polemic provides guidance.

How do we regulate a revolutionary new technology with great potential for harm and good? A 380-year-old polemic provides guidance.

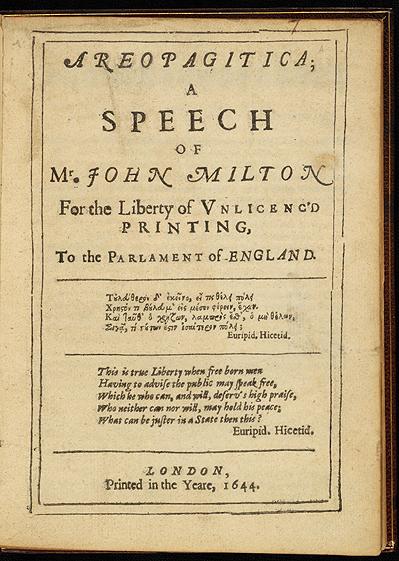

In 1644, John Milton sat down to give a speech to the English parliament arguing in favor of the unlicensed printing of books and against a proposed bill to restrict their contents. Published as “Areopagitica”, Milton’s speech became one of the most brilliant defenses of free expression.

Milton rightly recognized the great potential books had and the dangers of smothering that potential before they were published. He did not mince words:

“For books are not absolutely dead things, but …do preserve as in a vial the purest efficacy and extraction of that living intellect that bred them. I know they are as lively, and as vigorously productive, as those fabulous Dragon’s teeth; and being sown up and down, may chance to spring up armed men….Yet on the other hand unless wariness be used, as good almost kill a Man as kill a good Book; who kills a Man kills a reasonable creature, God’s Image; but he who destroys a good Book, kills reason itself, kills the Image of God, as it were in the eye. Many a man lives a burden to the Earth; but a good Book is the precious life-blood of a master-spirit, embalmed and treasured up on purpose to a life beyond life.”

Apart from stifling free expression, the fundamental problem of regulation as Milton presciently recognized is that the good effects of any technology cannot be cleanly separated from the bad effects; every technology is what we call dual-use. Referring back all the way to Genesis and original sin, Milton said:

“Good and evil we know in the field of this world grow up together almost inseparably; and the knowledge of good is so involved and interwoven with the knowledge of evil, and in so many cunning resemblances hardly to be discerned, that those confused seeds which were imposed upon Psyche as an incessant labour to cull out, and sort asunder, were not intermixed. It was from out the rind of one apple tasted, that the knowledge of good and evil, as two twins cleaving together, leaped forth into the world.”

In important ways, “Areopagitica” is a blueprint for controlling potentially destructive modern technologies. Freeman Dyson applied the argument to propose commonsense legislation in the field of recombinant DNA technology. And today, I think, the argument applies cogently to AI.

AI is such a new technology that its benefits and harms are largely unknown and hard to distinguish from each other. In some cases the distinction is clear. For instance, image recognition can be used for all kinds of useful applications ranging from weather assessment to cancer cell analysis, but it can be and is used for surveillance. In that case, it is not possible to separate out the good from the bad even when we know what they are. But more importantly, as the technology of image recognition AI demonstrates, it is impossible to know what exactly AI will be used for unless there’s an opportunity to see some real-world applications of it. Restricting AI before these applications are known will almost certainly ensure that the good applications are stamped out.

It is in the context of Areopagitica and the inherent difficulty of regulating a technology before its potential is unknown that I find myself concerned about some of the new government regulation which is being proposed for regulating AI, especially California Bill SB-1047 which has already passed the state Senate and has made its way to the Assembly, with a proposed decision date at the end of this month.

The bill proposes commonsense measures for AI, such as more transparent cost-accounting and documentation. But it also imposes what seem like arbitrary restrictions on AI models. For instance, it would require regulation and paperwork for models which cost $100 million or more per training run. While this regulation will exempt companies which run cheaper models, the problem in fact runs the other way: nothing stops cheaper models from being used for nefarious purposes.

Let’s take a concrete example: in the field of chemical synthesis, AI models are increasingly used to do what is called retrosynthesis, which is to virtually break down a complex molecule into its constituent building blocks and raw materials (as a simple example, a breakdown of sodium chloride into sodium and chlorine would be retrosynthesis). One can use retrosynthesis algorithms to find out the cheapest or the most environmentally friendly route to a target molecule like a drug, a pesticide or an energy material. And run in reverse, you can use the algorithm for forward planning, predicting based on building blocks what the resulting target molecule would look like. But nothing stops the algorithm from doing the same analysis on a nerve gas or a paralytic or an explosive; it’s the same science and the same code. Importantly, much of this analysis is now available in the form of laptop computer software which enables the models to be trained on datasets of millions of data points: small potatoes in the world of AI. Almost none of these models cost anywhere close to $100 million, which puts their use in the hands of small businesses, graduate students and – if and when they choose to use them – malicious state and non-state actors.

Thus, restricting AI regulation to expensive models might exempt smaller actors, but it’s precisely that fact that would enable these small actors to use the technology to bad ends. On the other hand, critics are also right that it would effectively price out the good small actors since they would not be able to afford the legal paperwork that the bigger corporations can. The arbitrary cap of $100 million therefore does not seem to address the root of the problem. The same issue applies to another restriction which is also part of the European AI regulation, which is limiting the calculation speed to 1026 flops. Using the same example of the AI retrosynthesis models, it is easy to argue that such models can be run for far less computing power and would still produce useful results.

What then is the correct way to regulate AI technology? Quite apart from the details, one thing that is clear is that we should be able to experiment a bit, run laboratory-scale models and at least try to probe the boundaries of potential risks before we decide to stifle this or that model or rein in computing power. Once again Milton echoes such sentiments. As a 17th century intellectual it would have been a long shot for him to call for the completely free dissemination of knowledge; he must well have been aware of the blood that had been shed in religious conflicts in Europe during his time. Instead, he proposed that there could be some checks and restrictions on books, but only after they had been published:

“If then the Order shall not be vain and frustrate, behold a new labour, Lords and Commons, ye must repeal and proscribe all scandalous and unlicensed books already printed and divulged; after ye have drawn them up into a list, that all may know which are condemned, and which not.”

Thus, Milton was arguing that books should not be stifled at the time of their creation; instead, they should be stifled at the time of their use if the censors saw a need. The creation vs use distinction is a sensible one when thinking about regulating AI as well. But even that distinction doesn’t completely address the issue, since the uses of AI technology are myriad, and most of them are going to be beneficial and intrinsically dual-use. Even regulating the uses of AI thus would entail interfering in many aspects of AI development and deployment. And what about the legal and commercial paperwork, the extensive regulatory framework and the army of bureaucrats that would be needed to enforce this legislation? The problem with legislation is that it is easy for it to overstep boundaries, to be on a slippery slope and gradually elbow its way into all kinds of things for which it wasn’t originally intended, exceeding its original mandate. Milton shrewdly recognized this overreach when he asked what else besides printing might be up for regulation:

“If we think to regulate printing, thereby to rectify manners, we must regulate all recreations and pastimes, all that is delightful to man. No music must be heard, no song be set or sung, but what is grave and Doric. There must be licensing dancers, that no gesture, motion, or deportment be taught our youth but what by their allowance shall be thought honest; for such Plato was provided of; it will ask more than the work of twenty licensers to examine all the lutes, the violins, and the guitars in every house; they must not be suffered to prattle as they do, but must be licensed what they may say. And who shall silence all the airs and madrigals that whisper softness in chambers? The windows also, and the balconies must be thought on; there are shrewd books, with dangerous frontispieces, set to sale; who shall prohibit them, shall twenty licensers?”

This passage shows that not only was John Milton a great writer and polemicist, but he also had a fine sense of humor. Areopagitica shows us that if we are to confront the problem of AI legislation, we must do it not just with good sense but with a recognition of the absurdities which too much regulation may bring.

The proponents of AI who fear the many problems it might create are well-meaning, but they are unduly adhering to the Precautionary Principle. The Precautionary Principles says that it’s sensible to regulate something when its risks are not known. I would like to suggest that we replace the Precautionary Principle with a principle I call The Adventure Principle. The Adventure Principle says that we should embrace risks rather than running away from them because of the benefits which exploration brings. Without the Adventure Principle, Columbus, Cook, Heyerdahl and Armstrong would never have set sail into the great unknown and Edison, Jobs, Gates and Musk would never embark on big technological projects. Just like with AI, these explorers faced a significant risk of death and destruction, but they understood that with immense risks come immense benefits, and by the rational light of science and calculation, they thought there was a good chance that the risks could be managed. They were right.

Ultimately there is no foolproof “pre-release” legislation or restriction that would purely stop the bad use of models while still enabling their good use. Milton’s Areopagitica does not tell us what the right legislation for regulating AI would look like, although it provides hints based on regulation of use rather than creation. But it makes a resounding case for the problems that such legislation may create. Regulating AI before we have a chance to see what it can do would be like imprisoning a child before he grows up into a young man. Perhaps a better approach would be the one Faraday adopted when Gladstone purportedly asked him what the use of electricity was: “Someday you may tax it”, was Faraday’s response.

Some say that the potential risks from AI are too great to allow for such a liberal approach. But the potential risks from almost any groundbreaking technology developed in the last few hundred decades – printing, electricity, fossil fuels, automobiles, nuclear energy, gene editing – are no different. The premature regulation of AI would prevent us from unleashing its potential to confront our most pressing challenges. When humanity is then grasping with its last-ditch efforts to prevent its own extinction because of known problems, a recognition of the irony of smothering AI because of a fear of unknown problems would come too late to save us.