Ewen Callaway in Nature:

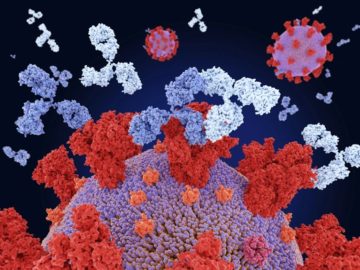

Antibodies are among the immune system’s key weapons against infection. The proteins have become a darling of the biotechnology industry, in part because they can be engineered to attach to almost any protein imaginable to manipulate its activity. But generating antibodies with useful properties and improving on these involves “a lot of brute-force screening”, says Brian Hie, a computational biologist at Stanford who also co-led the study.

Antibodies are among the immune system’s key weapons against infection. The proteins have become a darling of the biotechnology industry, in part because they can be engineered to attach to almost any protein imaginable to manipulate its activity. But generating antibodies with useful properties and improving on these involves “a lot of brute-force screening”, says Brian Hie, a computational biologist at Stanford who also co-led the study.

To see whether generative AI tools could cut out some of the grunt work, Hie, Kim and their colleagues used neural networks called protein language models. These are similar to the ‘large language models’ that form the basis of tools such as ChatGPT. But instead of being fed vast volumes of text, protein language models are trained on tens of millions of protein sequences. Other researchers have used such models to design completely new proteins, and to help predict the structure of proteins with high accuracy. Hie’s team used a protein language model — developed by researchers at Meta AI, a part of tech giant Meta based in New York City, — to suggest a small number of mutations for antibodies. The model was trained on only a few thousand antibody sequences, out of the nearly 100 million protein sequences it learned from. Despite this, a surprisingly high proportion of the models’ suggestions boosted the ability of antibodies against SARS-CoV-2, ebolavirus and influenza to bind to their targets.

More here.