Erik Hoel in his Substack newsletter, The Intrinsic Perspective:

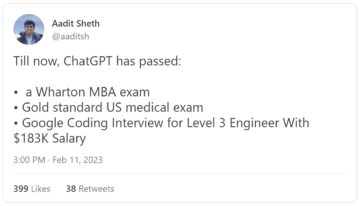

Now a third threat to humanity looms, one presciently predicted mostly by chain-smoking sci-fi writers: that of artificial general intelligence (AGI). AGI is only being worked on at a handful of companies, with the goal of creating digital agents capable of reasoning much like a human, and these models are already beating the average person on batteries of reasoning tests that include things like SAT questions, as well as regularly passing graduate exams:

It’s not a matter of debate anymore: AGI is here, even if it is in an extremely beta form with all sorts of caveats and limitations.

Due to the breakneck progress, the very people pushing it forward are no longer sanguine about the future of humanity as a species. Like Sam Altman, CEO of OpenAI (creators of ChatGPT) who said:

AI will probably most likely lead to the end of the world, but in the meantime, there’ll be great companies.

So it’ll kill us, but, you know, stock prices will soar. Which begs the question, how have tech companies handled this responsibility so far? Miserably. Because it’s obvious that recent AIs are not safe.

More here.