Scott Alexander in Astral Codex Ten:

This is a point I keep seeing people miss in the debate about social media.

This is a point I keep seeing people miss in the debate about social media.

Moderation is the normal business activity of ensuring that your customers like using your product. If a customer doesn’t want to receive harassing messages, or to be exposed to disinformation, then a business can provide them the service of a harassment-and-disinformation-free platform.

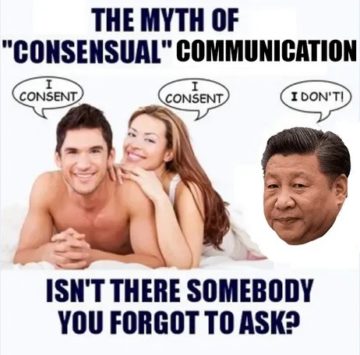

Censorship is the abnormal activity of ensuring that people in power approve of the information on your platform, regardless of what your customers want. If the sender wants to send a message and the receiver wants to receive it, but some third party bans the exchange of information, that’s censorship.

The racket works by pretending these are the same imperative. “Well, lots of people will be unhappy if they see offensive content, so in order to keep the platform safe for those people, we’ve got to remove it for everybody.”

This is not true at all. A minimum viable product for moderation without censorship is for a platform to do exactly the same thing they’re doing now – remove all the same posts, ban all the same accounts – but have an opt-in setting, “see banned posts”. If you personally choose to see harassing and offensive content, you can toggle that setting, and everything bad will reappear.

More here.