by David Kordahl

Every October, I try to carve out a little time to enjoy Nobel Season. This past week marked the climax of the last year’s iteration, with the winners of the various Nobel Prizes announced on successive days of the week. I had fun following the picks, and learning a bit about new things in the fields I don’t follow closely—which, frankly, is most of them.

Physics, however, was a different story. The physics Nobelists this year were familiar to me and most other physicists, seemingly obvious choices, if not exactly household names. Alain Aspect, John Clauser, and Anton Zeilinger have become standard characters in the physics lore of the past few decades. Their stories have even bled out into the wider culture. In Les Particules élémentaires, the 1998 novel by Michel Houellebecq, Aspect’s experiments are positioned as part of a “metaphysical mutation” comparable only to the birth of Christianity and the rise of science itself.

Well, as one might say of many grand claims…sounds important, if true. Here, I’ll be expressing my doubts about a few articles of common faith.

A Brief History of Quantum Entanglement

The lineage of experiments involving quantum entanglement has been described in many popular science books. I’m fond of an old hippieish one, Quantum Reality: Beyond the New Physics, by Nick Herbert. Anton Zeilinger has himself written one, Dance of the Photons: From Einstein to Quantum Teleportation, which I suppose will now get a sales bump.

What entanglement experiments have in common is that they all follow up a question raised by Einstein, along with his colleagues Boris Podolsky and Nathan Rosen, regarding the completeness of quantum mechanics. The “EPR Paradox” (so named after the three men) proposed a thought experiment involving two particles in an entangled state, such that measurements on one help to predict measurements on the other.

According to quantum mechanics, the measurement on one particle casts the other into a determinate quantum state, even if the particles are spatially separated from each other. This seemed to imply a tension with the theory of relativity, with the possibility that signals were being shared faster than light. Even more importantly, it seemed to indicate that quantum mechanics was incomplete, and needed to be augmented.

Many decades later, the Irish physicist John Stewart Bell (1928-1990) became interested in these questions. Bell didn’t introduce any new physics, since “Bell’s Theorem” follows directly from standard old quantum mechanics, but it cast the old EPR paradox in a way that suggested experimental tests, clearly demonstrating how probability could not be easily circumvented in the analysis of entangled quantum states.

The 2022 Nobel citation celebrates Aspect, Clauser, and Zeilinger “for experiments with entangled photons, establishing the violation of Bell inequalities and pioneering quantum information science.” Each of these words could spark arguments. But let’s stick to one word: entanglement.

What does it imply—or, for an easier question, what doesn’t it?

Entanglement Doesn’t Imply Action at a Distance

Einstein sardonically called entanglement “spooky action at a distance,” which is a dig that only makes sense if you know some physics. The original “action at a distance” theory was Newton’s version of gravity, which simply posited that every mass in the universe pulls every other mass in the universe toward itself, with a strength proportional to the product of the mass doing the pulling and the mass being pulled, and inversely proportional to the squared distance between them. Why did this work? Newton wrote, “hypotheses non fingo”—“I frame no hypotheses.”

But later physicists couldn’t be stopped from hypothesizing, and the nineteenth century saw the rise of field theory, which posited that the effects formerly interpreted as inexplicable long-distance communiques could better be understood by introducing local entities hanging out in space, that is, by introducing fields. Electric and magnetic forces were thought to act not across vast distances, but because electric and magnetic fields were already present at the locations where the forces were being applied. These fields were eventually understood as dynamical entities, both reacting to and causing changes in the motions of charged particles.

The great unification of nineteenth-century physics came when James Clerk Maxwell showed that light could be understood as coherent oscillations of the electric and magnetic fields. Considering the symmetries of Maxwell’s equations would lead Einstein, a few decades later, to propose relativity theory, which described a world of local physics, where action at a distance had been banished entirely.

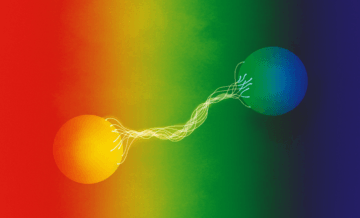

The illustration above, from the “Popular Science Background” article on the Nobel website, subtly suggests that quantum entanglement contradicts the earlier picture of locality in physics—i.e., that it contradicts our picture of things only interacting with other things that occupy the same position in space. The ghostly strands might suggest to us a strange neo-Newtonian revival, overthrowing the local universe of Einstein and Maxwell.

Of course, there are many physicists (and at least one novelist) who do believe this, who believe the “non-locality” of entangled quantum states implies something deep about physics. But I don’t, and I’m not alone.

The important thing to understand about entangled particles is how they became entangled. Particles become entangled when they interact. When we see particles on opposite sides of the room whose measurements are correlated, it doesn’t necessarily mean that there is some spooky force between them that keeps them connected. The particles’ correlations are a residue of their past contact, not evidence of their continued interaction.

There are classical analogues to this. For instance, if a bomb rests quietly in the middle of a parking lot, its Newtonian momentum (the product of its mass and velocity, p = m v) is zero, because it isn’t moving. Momentum is conserved, so after the bomb explodes, the sum of all the momenta of the outgoing shards is also zero. Does this imply that the particles are spookily connected afterward? No, but it does suggest they once were linked.

I’ve lifted this exploding bomb analogy from John P. Ralson’s book, How to Understand Quantum Mechanics. Ralston uses an exploding lumber mill, but experts may argue that this doesn’t get to the heart of the matter.

To those experts, however, I recommend the analysis of “Information Flow in Quantum Mechanics” by David Deutsch and Patrick Hayden, who treat the EPR setup with more formality and less invective than Ralston. Deutsch and Hayden examine every step of the EPR setup, and notice that the information is always stored locally and extracted locally. That certain ways talking about the situation make it seem as though there are non-local forces is an accident of language, not a fact of science. After all, correlations can arise from causal contact that no longer persists.

Entanglement Doesn’t Imply Faster-Than-Light Messaging

Once you take up the interpretation that particles are entangled due to their past interaction and not anything more mysterious than that, it seems obvious that entanglement won’t give us any faster-than-light signaling schemes. But this wasn’t all initially obvious. Schemes were proposed.

David Kaiser, a physicist and historian of science who has collaborated with Anton Zeilinger, one of this year’s laureates, has written a wonderful book about the early history of Bell’s theorem. How the Hippies Saved Physics: Science, Counterculture, and the Quantum Revival describes how quantum ideas bled out of the universities and into the wider world. Aspect, Clauser, and Zeilinger all play a part in this story, but it is also populated by some wilder characters, including the physicist Nick Herbert, author of the aforementioned popular science text, Quantum Reality.

Herbert was intrigued by the possibility that entanglement could be used to send information faster than light. This wasn’t necessarily a crazy idea.

Suppose that you have a friend who tells you she is traveling either to Italy or Finland for vacation—she hasn’t decided, but before she gets on the plane she’ll send you a postcard. If we were to assign a physics description to your friend, we might be tempted to describe her as being in a superposition of Italy and Finland position states. But when you read her postcard, information about where she is reaches you instantaneously, faster than it would take for a message to reach you from Italy or Finland. There’s something not strictly physical about this information transfer.

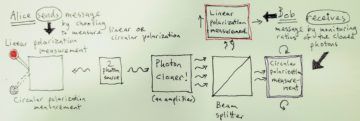

This not-quite-physical loophole inspired Herbert to propose his FLASH system—the “First Laser-Amplified Superluminal Hookup.” Herbert imagined that by sending out a steady stream of entangled photons from a central location, two far-off friends could communicate instantaneously. One side could measure the photons, and the other side could amplify the signal on their end to deal with quantum probability.

The controversial piece of this setup—an error which was far from obvious to many early readers—was the supposition that a general quantum state could be perfectly amplified. It turns out that this isn’t possible, a result that is now known as the no-cloning theorem. Though the correlations exist in some sense outside of time, this unfortunately doesn’t give us a way to send messages faster than light. Too bad, really.

I should mention, for fairness, that some versions of quantum theory do include faster-than-light signals. A famous example is the hidden-variable theory proposed independently by Louis de Broglie in the 1920s and by David Bohm in the 1950s, which claims that quantum mechanics is secretly deterministic, despite its probabilistic outcomes. Regardless, de Broglie-Bohm enthusiasts don’t have any way to send FTL signals, either.

Entanglement Doesn’t Imply Randomness

Now, readers who disagree with the point of view I’ve been forwarding here—roughly, that quantum entanglement is demonstrably real, but isn’t that philosophically profound—may quite reasonably demur that I haven’t fully grappled with how probability enters into quantum interpretation.

Which is true. Quantum measurement will have to wait for another essay. The problem of quantum measurement involves understanding how observations of quantum systems manage to pick out just one outcome, when a quantum state seems to be divvied among various possibilities. But in the applications of entanglement that researchers are studying most intently, quantum measurement matters less than quantum dynamics.

When, as an undergraduate, I first heard about “quantum computers,” I presumed that such devices might be ones whose outcomes were chancy. Didn’t the modern physics homework problems always ask me to compute probabilities? It seemed logical to suppose that a quantum computer would have some randomness in its operation. But how would you get a result out of that? Maybe you ran the calculation a bunch of times. I wasn’t sure.

This, it turns out, was entirely wrong. A quantum computer that acts according to theory will give answers that are entirely deterministic. Quantum measurements often involve probabilities, it’s true, but when quantum bits—“qubits”—are prepared in a “0” or a “1” state, a measurement should give you back exactly the state you just prepared.

The reason quantum computers are tricky to implement comes down to the various possibilities for entanglement. Once experimental physicists are able to control the interactions between the qubits in a quantum computer with sufficient delicacy, they will be able to do amazing things. But quantum systems can easily become entangled with thermal noise, which can scramble their delicate correlations. As a result, extensive work has been done on “quantum error correction,” which involves using multiple physical qubits for each qubit needed for quantum information processing.

I’m far from an expert in any of this, but I do know enough to assert that this is physics, not magic. Nature keeps track of all sorts of delicate correlations that we normally have no reason to question. What this year’s physics Nobelists did was to lay the first bricks of the long road toward a future where quantum entanglement becomes a tool for technologists, and not just a subject of contemplation for philosophical ditherers like me.