by Charlie Huenemann

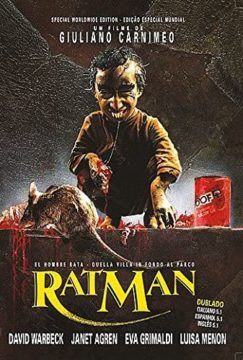

It was announced last week that scientists have integrated neurons from human brains into infant rat brains, resulting in new insights about how our brain cells grow and connect, and some hope of a deeper understanding of neural disorders. Full story here. And while no scientist would admit they are working toward the development of some Rat Man who will escape the lab and wreak havoc on some faraway island or in the subways, it’s impossible not to wonder.

It was announced last week that scientists have integrated neurons from human brains into infant rat brains, resulting in new insights about how our brain cells grow and connect, and some hope of a deeper understanding of neural disorders. Full story here. And while no scientist would admit they are working toward the development of some Rat Man who will escape the lab and wreak havoc on some faraway island or in the subways, it’s impossible not to wonder.

There are some legitimate and difficult ethical questions in this territory, such as whether we should work toward “curing” all conditions labeled as “disorders”, including those conditions that have become woven into people’s lives so thoroughly that they would rather not lose them. Autism, for example, can be a terrible burden in some cases, but in others it serves as a valued feature of one’s individuality, an element in the core of who they are. It’s difficult to follow the precept of doing no harm as we dig deeper into human natures and find it’s not always obvious what counts as a harm. There is a medical/pharmaceutical mindset that likes to pound down any nail sticking up, which is running into the fact that some nails prefer to stick up, thank you very much.

But as we leave those problems to more insightful minds, I would like to turn instead to an illegitimate and silly worry, which we shall call “Ewww”:

Ewww = What if my neurons end up in a rat brain? Would I feel its whiskers twitching, feel hungry for garbage, and find fulfillment by running endlessly inside a little wheel?

As I said, it’s not a legitimate worry, but it’s hard for many of us not to feel a tug in its direction, because many of us are entranced by illusions about consciousness.

Here’s where Ewww comes from, I think. Those of us loosely educated in the ways of modern science understand perfectly well that we are just a bunch of neurons. But we can’t quite understand how neurons sending signals to each other can actually be a feeling or a thought, and so we invent a subjective dimension to neurons: some hidden aspect of neural cells where the feelings and thoughts live. The feelings and thoughts don’t show up on MRIs, and they aren’t needed to explain any of the measurements we are able to take, but we have them, after all, so they must exist somewhere: not in objective space, but in subjective space.

And maybe the subjective space travels along with the neuron, as if in a hidden pocket. So if my neuron gets plugged into a rat’s brain, some little smoosh of my feelings and thoughts will go along as well, and there some part of me will be, looking out at the researchers with my beady little eyes.

This is just one case among many in which our “legitimate” beliefs—the ones we’re willing to advertise among our peers—don’t fit together very well, and if someone (like a philosopher) comes along to press us on the poor fit, we are liable to come up with a goofy little theory on the spot which, at a glance, seems to spackle over the cracks, and we hope it’s enough to pass inspection, at least for now. (Ask your co-worker how the WiFi signals that go to your computer know to go to your computer and not theirs, and you will have the opportunity of hearing a goofy little theory being born—unless your co-worker more sensibly replies “hell if I know”.)

No, neurons don’t have hidden glove compartments containing feelings and thoughts. They’re just circuits, the same as can be found in any electrical component. No part of the brain magically turns voltage into what-it’s-like-to-be-ness or any other occult property.

What neural circuitry can do, in sufficient quantity and complexity, is create models of its environment, including representations of entities flagged as threats, opportunities, and curiosities—the same as a self-driving car. Brains can also create models of other organisms with brains, meaning a human brain, and a few other kinds, can form models predicting what other beings with brains are likely to believe or do. And, finally, human brains—and the bigger primates may be alone on this one—can model themselves, and thus have theories about who they themselves are and what they themselves are up to.

It’s not all fully understood of course, but the general outline of a full explanation is clear: our feelings and thoughts are part of the ways we model ourselves, others, and the world, and they don’t reside like nuggets among the dendrites, but instead emerge from higher level judgments about ourselves and the world, and then get talked about in often misleading ways. It’s not easy to rig together a system that can form judgments, let alone talk about them, but we are already developing artificially-intelligent systems that are able to semi-autonomously navigate their ways through complicated and changing environments.

Do these AIs have feelings? Yes, I would guess they have something along the lines of feelings. We have feelings because we evolved in dangerous environments in which perceiving information as sudden and urgent was beneficial, and a sudden and urgent burst of information, tagged with “highest possible priority”, is indistinguishable from a vivid, powerful feeling. AIs are evolving in different environments, so any feelings they have will be correspondingly different from our own; but there are probably some cases approaching familiarity. There are no doubt many flavors of sentience.

So your neurons “have feelings” insofar as they operate within a larger engine that processes information, some of which ends up being described as feelings. Once those neurons leave your body and enter into a rat, they will be part of a smaller but still relatively large engine that does the same thing, though in a somewhat different, ratty way, and so there will still be feelings. But not your feelings; not the rat’s feelings, being felt by you. No, just the rat’s feelings, felt by the rat. That should help to diminish the Ewww, I hope.