by David J. Lobina

In my series on Language and Thought, I defended a number of ideas; to wit, that there is such a thing as a language of thought, a conceptual representational system in which most of human cognition is carried out (or more centrally, the process of belief-fixation, or thinking tout court, where different kinds of information – visual, aural, information from memory or other held beliefs – are gathered and combined into new beliefs and thoughts); that the language of thought (LoT) is not a natural language (that is, it is not, for instance, English); that cross-linguistic differences do not result in different thinking schemes (that is, different LoTs); that inner speech (the writers’ interior monologue) is not a case of thinking per se; and that the best way to study the LoT is through the study of natural language, though from different viewpoints.

In the following two posts, I shall change tack a little bit and consider an alternative approach (as well as some alternative conclusions), at least on some rather specific cases (see endnote 1). As pointed out in the already mentioned series on the LoT, the study of human thought can be approached from many different perspectives; central among these is to study the “form” or “shape” thought has through an appropriately-focused analysis of language and linguistic structure – my take – a position that raises the big issue of how language and thought at all relate.

And in considering the relationship between language and thought, it is often supposed that the rather sophisticated cognitive abilities of preverbal infants and infraverbal animal species demonstrate that some form of thought is possible without language. That is, that thought does not depend upon having a natural language, given that many non-linguistic abilities are presumably underlain by a medium other than a natural language.

Even though there must be some truth to this view, its rationale is not as secure as it prima facie seems to be; or put differently, its import is not as extensive as it is presumed. In this and the following post, I will in fact argue that the study of preverbal and infraverbal cognitions can often derive into rather mistaken views on the mental representations and cognitive architecture subsuming adult human thought, and that is a bad thing.[i]

Regarding the cognitive abilities of non-human animals, and despite how impressive some of these may be, there do not seem to be any grounds to believe that a significant amount of the richness of human cognition is to be found in animal psychology, for the very simple reason that different species may well possess rather different cognitive structures. I’m not denying other animals conceptual representations or, in general, the existence of various homologies between human and animal cognition. What I’m suggesting is that it is very likely that each species has its very own conceptual structure, and as a result there will likely be very few correspondences at the top end of higher cognition, the realm that has interested me in previous entries (viz., language, thought, the fixation of belief, etc.). The situation may well be slightly different at the lower-end of cognition (perception, attention, etc.), but this will be of no concern here.

In order to frame the discussion, and as is my usual want, I shall assume a LoT model of cognition. I will not run the entire LoT argument again; all I need to state here is that such models involve characterising cognition as a system of internal representations that can combine with each other into ever more complex representations thanks to a number of syntactic and semantic rules. In turn, by thought (and thinking) I shall understand what Jerry Fodor called the fixation of belief, a process that requires entertaining and combining different propositions (roughly, a proposition is the meaning of a sentence); under this construal, to have a thought is to have entertained a proposition, and to be thinking is to combine different propositions with each other as to form new beliefs.[ii]

The literature on what sort of mental representations and computations humans possess and employ is vast, but we are still awaiting a study of animal cognition that clearly demonstrates a clear and meaningful correspondence to any of the syntactic/semantic rules that human higher cognition conventionally exhibits. As a case in point, a target article in Behavioural and brain sciences from 2008 argues that there is in fact a large discontinuity between human and nonhuman minds, and the responses the paper received suggest that this is a view that is shared by a not insignificant number of people in cognitive science.

The most impressive cognitive abilities of other species, according to the authors of the article, differ in kind and not only in degree from those of human beings, the reason for this apparent fact irreducible to the unique human possession of natural language, the one factor often regarded as the most obvious differing feature. If this is correct, then the differences between animal and human cognition would be rather widespread, the human mind remaining ‘distinctively human even in the absence of…language’, to quote from the article. This is not to say that language is not an important contributing factor, but what this articles disputes, correctly, I think, is the implication that ‘aside from our language faculty, human and nonhuman minds are fundamentally the same’.

The claim that human and nonhuman minds may be fundamentally the same aside from the presence or absence of the language faculty is probably too strong a claim, though a number of scholars do argue that what makes human cognition unique vis-à-vis animal cognition is the addition of the language faculty and the mental reorganisation such an addition plausibly provokes (Paul Pietroski and Wilfram Hinzen are two philosophers who have argued for this position in one way or another).

I would argue that we should treat any correspondences or analogies between animal and human cognition with a grain of salt. What’s more, I would actually argue that the concept of a LoT should not be taken as a unitary phenomenon at all; there is no THE LoT, the evidence for which can be found in the cognition of humans as much as it can be found in the cognition of other species. Instead, I submit that there must be such a thing as the human LoT, a conceptual system unique to, well, humans.[iii]

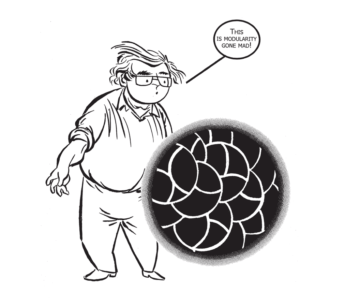

Be that as it may, and as advanced in endnote 1, Peter Carruthers and Paul Pietroski have claimed that animal cognition can shed significant light on the type of mental architecture humans possess, and these two scholars have moreover put forward rather specific arguments to that effect. Carruthers, for instance, supposes the human mind to be massively modular, contrary to the sort of picture Fodor famously drew in his 1983 book, The Modularity of Mind, wherein Fodor claimed that some mental processes, particularly some perceptual ones, are specific to a given task (or domain) and do nothing else, and the evidence Carruthers has gathered for his conclusion comes from animal cognition. Carruthers has chronicled some of the domain-specific cognitive abilities of various animals species – that is, one ability per problem or task – and has argued that the “massive modularity” of animal psychology carries an implication as to whether the human mind itself is modular – that is, composed of domain-specific systems. Indeed, Carruthers claims that the human mind is composed of multiple specific mental processes, perhaps through and through. Fodor was shocked.

The sort of inference Carruthers is defending, however, appears to be based on an analogy between two very different ‘‘universes’’, as it were, and this makes for a rather unpersuasive argument. The analogy would be between the universe of animal cognition, including all the cognitive lives in that universe, and the universe of the human mind, the cognitive life of humans. These are surely two very different things: one constitutes the overall study of different organisms, whilst the other is the nature of one cognitive system, the human mind. After all, the postulation of domain-specific abilities in animal cognition, often one specific ability per species in fact, surely does not warrant the conclusion that the human mind, the mind of one other species, is as a result composed of various domain-specific systems, massively so or otherwise.

The specificity of animal abilities is, as it happens, a rather nuanced matter. The architecture usually postulated for these abilities is a domain-general one: a rather simple read-and-write Turing Machine-like mechanism (Randy Gallistel is the go-to scholar here). So at this level most, if not all, animal cognitive abilities would be underlain by the same sort of architecture – the same sort of mechanism. The specificity, and thus the variability, comes in in the sort of information the read/write mechanism manipulates in each species, and consequently in the sort of operations that the system carries out in each case (I’m ignoring differences in memory capacity, attention, etc., but these are important factors too).

As an example, the representations and calculations that a foraging bee carries out are rather different from those of the African ant, and yet the underlying architecture would be roughly the same in both cases. This particular point is somewhat lost in discussions of what domain-specific abilities animals exhibit and what this means for human cognition, and yet it would appear to be a rather crucial one: different calculations imply different primitives (representations), and thus different LoTs.[iv]

Pietroski finds himself in a similar position in his account of the human conceptual system. According to Pietroski, there is no global or domain-general LoT in humans prior to the acquisition, or emergence, of natural language (his account supposedly applies to both language acquisition and language evolution). Rather, the languageless mind is an unconnected mind, with different mental systems manipulating different types of concepts which cannot combine with each other because they manifest different ‘formats’. In particular, Pietroski argues that pre-linguistic concepts vary in “adicity” – the number of arguments a function takes, with some taking one argument, others two, etc – and this bars combination, as concepts are argued to needing to have the same adicity to combine. According to Pietroski, the acquisition/emergence of natural language reformats the different types of concepts into a common code – a set of monadic concepts, as it happens, where all concepts take one single argument (this follows from the sort of semantic theory Pietroski favours, but this is by-the-by at this point) – and this permits conceptual combination. But why does Pietroski believe that the languageless mind is not an integrated mind to begin with (pun intended)?

As was the case with Carruthers, Pietroski also finds the argument from animal cognition to massive modularity a compelling one, and this same reasoning suggests to him that pre-linguistic concepts are ipso facto not fully integrated because they belong to different modules and thus exhibit different format properties (different adicities, as mentioned).[v] However, from the fact that different animal species manipulate different representations for different problems, representations that appear to be incommensurable to one another (recall, very often one domain-specific ability per species), nothing at all would follow regarding the human mental architecture, let alone the character of human concepts (or, indeed, regarding the possibility of a domain-general LoT).

My point, to be more specific, is that Pietroski cannot derive the different kinds of (human) pre-linguistic concepts he postulates from the study of animal cognition. That is, and following upon the point I made regarding Carruthers’s own argument, the apparent fact that the representations of one species are of a different character from the representations of another species does not warrant the conclusion that something like that is mirrored in the human mind – it certainly does not establish that different systems of the mind manipulate representations of different adicities.

To be super clear, I have tried to make two points in this post. Firstly, that different animal species very likely possess rather different conceptual systems, despite possible homologies at different levels; and secondly, that the results of the study of animal psychology do not support the rather strong positions scholars such as Carruthers and Pietroski have adopted regarding the nature of the human mind. A fortiori, if there are cognitive homologies between human and non-human animal species, these are unlikely to be found at the top-end of cognition (language, concepts, propositions, belief fixation, etc.).

If so, some of the claims I have argued for in previous posts appear to be safe from what the cognitions of other animal species tell us about the human mind. But what about an actual human mind? That is, what about the mind of a preverbal infant as it acquires a natural language all of its own? Stay tuned for the next entry!

[i] A disclaimer: I will not provide a comprehensive review of the literatures on infant and animal cognitions, vast as they are, nor will I be arguing that the study of infant and animal cognitions is useless for the purposes of outlining what human thought is like – or, for that matter, that these two topics should be avoided altogether for the purposes of a theory of thought. The study of infant cognition can sometimes yield a purer view, as it were, of human thought (or at least offer a series of snapshots of the development of thought and thinking abilities), and this is worthwhile information in its own right. As for nonhuman animal cognition, many studies suggest that there are wide-ranging homologies between human cognition and some animal abilities, and this too is clearly significant for the study of thought (though such homologies are usually broad rather than deep). Instead, I shall just target views that connect infant/animal cognition and (adult) human thought in a manner that is not entirely warranted, resulting in unsupported conclusions as to the nature of thought; the philosophers Peter Carruthers and Paul Pietroski will be the main culprits here.

[ii] The LoT story is an old one, as I have mentioned before, but it is en vogue these days. Indeed, LoT models are now again quite common in cognitive science, especially in combination with Bayesian models of cognition (see, for instance, here).

[iii] This is perfectly compatible, by the way, with the often-made point that the sophisticated abilities of animals prove that such a thing as a LoT, qua a medium of representation distinct from natural language, may be indeed possible – and, complementarily, that the same sort of representational-computational story (that is, theory of cognition) can be used to account for both human and animal cognition. There is in fact some ambiguity in the literature as to what it is meant by the ‘language-of-thought hypothesis’, and this may explain some of the confusion. Some scholars just mean a conceptual representational system, as I do for the most part, while others simply have a type of theory or account in mind (viz., the so-called the computational theory of mind, often closely related to Fodor’s particular type of computational theory of mind).

[iv] Say what? Foraging bees and the African ant? In a lengthy article, Gallistel lays it all out.

[v] There is certainly a fair amount of speculation in all this: why do the concepts of different modules differ in adicity? what does establishes the adicity of each concept/domain? are there only mismatches in adicity, never any correspondences?, etc.