by Charlie Huenemann

“Thus the concept of a cause is nothing other than a synthesis (of that which follows in the temporal series with other appearances) in accordance with concepts; and without that sort of unity, which has its rule a priori, and which subjects the appearances to itself, thoroughgoing and universal, hence necessary unity of consciousness would not be encountered in the manifold perceptions. But these would then belong to no experience, and would consequently be without an object, and would be nothing but a blind play of representations, i.e., less than a dream.” (Immanuel Kant, Critique of Pure Reason, p. 112(A))

“Thus the concept of a cause is nothing other than a synthesis (of that which follows in the temporal series with other appearances) in accordance with concepts; and without that sort of unity, which has its rule a priori, and which subjects the appearances to itself, thoroughgoing and universal, hence necessary unity of consciousness would not be encountered in the manifold perceptions. But these would then belong to no experience, and would consequently be without an object, and would be nothing but a blind play of representations, i.e., less than a dream.” (Immanuel Kant, Critique of Pure Reason, p. 112(A))

[IN OTHER WORDS: Without concepts, experience is unthinkably weird.]

Back in the 17th century, some philosophers tried to place all knowers on a level playing field. John Locke claimed the human mind begins like a blank tablet, devoid of any characters, and it is experience, raw and unfiltered, that gives the mind something to think about. Since everybody has experience, this would mean everybody could develop knowledge of the world, and no one would be inherently better at it than anybody else.

It’s a valuable idea, and in the neighborhood of a great truth, but not very plausible as a model of how we manufacture knowledge. Later philosophers argued that, if this is how we do it, then we really don’t know much. For example, David Hume could not see how anyone could ever develop the idea of causality: you can watch the events in a workshop all the livelong day, and though you might see patterns in what happens, you will never see the necessity that is supposed to connect a cause with an effect. (Philosophers writing about this stuff have a hard time avoiding italics.)

But clearly we do end up with causal knowledge, as Hume himself never doubted, and we manage to navigate our ways through a steady world of enduring objects. We somehow end up with knowledge of an objective world. And we don’t remember that arriving at such knowledge was all that difficult. We just sort of grew into it, and now it seems so natural that it’s really hard to imagine not having it, and it’s even difficult not to find such knowledge perfectly obvious. But in fact it is anything but obvious (as Jochen Szangolies recently explored).

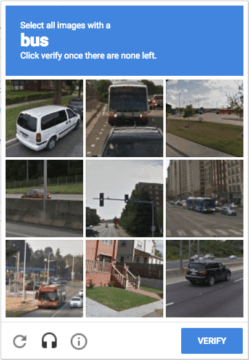

This nonobviousness of obvious knowledge is why CAPTCHA challenges are all over the internet. A CAPTCHA security challenge presents us with a set of pictures and asks us to click on the squares with a stop sign or a bus or something. It works as a security challenge because it is devilishly difficult to train a robot to recognize the things we recognize quite easily. As of this moment, it still takes a human to spot a bus. (“CAPTCHA” in case you didn’t know is an acronym for “Completely Automated Public Turing test to tell Computers and Humans Apart”.) We find it easy to do, and nearly impossible to teach to a smart machine.

But why should it be so hard for a machine, if Locke & Co. were right in claiming that experience simply writes itself upon blank minds? What more has to be added to the picture?

Immanuel Kant’s answer (buried under the verbal rubble that is the Critique of Pure Reason) was that human minds aren’t blank, but have a definite structure or format. It’s as if the mind has an operating system that puts the sensations that come along into a specific sort of form. Our cognitive operating system—the HUMAN OS—constructs grammatical sentences, as it were, from the squiggles and loops that the world shoots at us. If we could figure out exactly how the HUMAN OS manages to do this, we could program AIs to do the same thing, and in a jiffy we’d have self-driving cars and CAPTCHA challenges would become useless and we could finally get on with the End of Days. But we don’t know how the HUMAN OS does this—at least, not yet—and so we have to resort to brute force machine learning tactics to come up with algorithmic shortcuts that get machines to the right answers even when neither we nor they know what the hell they are doing. (Though this is exactly our situation with respect to our own brains, come to think of it.)

Kant thought he knew the structure of the HUMAN OS, and that’s what his massive Critique is all about. But it’s not so useful to today’s AI researchers because Kant was up to something very different from cognitive psychology. That is to say, he didn’t really care about neural nets or how the OS is programmed into the brain. He was interested in a deeper question. To get to that deeper question, we need to put on our shiny thinking caps—so you go get yours, I’ll get mine….

OK? OK. Our task is to think about what needs to happen before we can even begin to think about cognitive psychology. Before we can start doing any empirical research at all, we have to be able to trust that we are in genuine causal contact with a law-governed causal world rich with patterns and form. If we are wrong about this, and we’re in a simulation, or a dream, or The Matrix, then anything we come up with in the cognitive science workroom is just a pile of dookie (in the technical sense). So before we can figure out how our brains work we need to figure out why we think we have the competence to even pursue such a question. That’s the deeper question Kant is interested in: what reason do we have to think any of our thinking isn’t just a pile of dookie?

OK? OK. Our task is to think about what needs to happen before we can even begin to think about cognitive psychology. Before we can start doing any empirical research at all, we have to be able to trust that we are in genuine causal contact with a law-governed causal world rich with patterns and form. If we are wrong about this, and we’re in a simulation, or a dream, or The Matrix, then anything we come up with in the cognitive science workroom is just a pile of dookie (in the technical sense). So before we can figure out how our brains work we need to figure out why we think we have the competence to even pursue such a question. That’s the deeper question Kant is interested in: what reason do we have to think any of our thinking isn’t just a pile of dookie?

You would be right if your response to this is, “Aha! Kant here is raising an age-old skeptical question about the veracity of human knowledge!” Yes. But here is where Kant offers a genuinely new approach. Kant turns the skeptical question around. He asks: what shall we have to assume in order to be confident that our thinking isn’t just a pile of dookie? His answer to this question (spoilers!) is that the only way we can be absolutely sure that every experience we have of the world will follow patterns and regularities is if we assume that the world has to conform to our expectations. We will never experience anything that doesn’t have a format our minds will recognize—because anything we experience will be formatted by the mind itself.

It’s only by making this mystifying assumption, Kant argued, that we can explain how we end up with such seemingly accurate knowledge of an objective world. The world meets our expectations, by and large, because we interpret it in such a way as to meet our expectations.

Your mind may well be buzzing with some good questions at this point, but I ask you to suspend those questions so that we can update Kant’s view with a more plausible one. Kant thought the HUMAN OS, the thing that brings structure both to the world and our thinking about the world, was a stable, unchanging entity that put all our experience and knowledge of the world into a familiar format. Later philosophers like Hegel and Cassirer followed Kant’s line of thought, but suggested instead that the HUMAN OS was the result of history or culture: that we carve up our experience and turn it into objects in the ways we do because we are carried along by a larger parade of traditions, cultural practices, and institutions of symbols and images like language, history, and mythology. Other peoples, finding themselves carried along by different parades, see the world differently. (Well, yes, that checks out.) The HUMAN OS does change over time, these Kantianesque philosophers will admit, but it’s exceedingly hard for us to observe the change, in the same way that it’s hard to notice when you need a new eyeglass prescription.

I think this view is more plausible than Kant’s original idea because when we see the world, we are in fact making judgments about what is in the world (this Kant knew), and those judgments can only be shaped by expectations, categories, prejudices, languages, and interests that come from the environment that built our minds (this Kant didn’t like so much). When we see the world, our culture sees the world through our eyes.

But if there is anything true in this abstract story, it would mean that we’re not going to reach an understanding of how we see the world, or even how AIs see the world, without paying closer attention to the role of culture. It may even be true that CAPTCHA challenges work, at least for now, precisely because as wonderful as our machines are, they ain’t got no culture.