by Jochen Szangolies

The world we inhabit is a world of objects. Wherever we look, we find that it comes to us already disarticulated into cleanly differentiable chunks, individuated by certain properties: the mug on the desk is made of ceramic, the desk of wood; it is white, the desk black; and so on. By some means, these properties serve to circumscribe the object they belong to, wrapping it up into a neatly tied-up parcel of reality. No additional work needs to be done cutting up the world at its joints into individual objects.

Moreover, this fact typically doesn’t strike us as puzzling: objects seem entirely non-mysterious things. I could describe this coffee mug to you, and, if I include sufficient detail, you could fashion an identical one. The same procedure could be repeated for every object in my office, indeed, for the entire office itself.

Certainly: there may be edge cases. Where I see one cloud, you might see two. When the mug is glued to the desk, they don’t seem to become one object; but certain sorts of fastening, such as assembling various electronic components into a computer, seem to beget novel objects over and above mere collections of parts. Still: there are various ways out of these troubles. The computer can be described as various sorts of parts and their arrangement; the cloud by its shape.

Objects seem eminently describable sorts of things. There seems to be no residual mystery beyond an exhaustive specification of their properties. But not everything is so amenable to description, as speakable as objects seem to be.

As a canonical example, take qualia, the strange, subjective impression of ‘what it’s like’ to see a particular shade of red (for instance). No matter my verbal prowess, it seems doubtful that I could transport this knowledge to somebody having never had an appropriate impression—say, due to congenital color blindness. But opinion is divided: is there really a ‘there’ there, or are qualia so hard to pin down conceptually because they don’t exist after all?

In the history of philosophy, various kinds of things have been claimed to resist verbalization, or even conceptualization—to be unspeakable. The divine, the sacred, the numinous are typically said to be beyond human imagination—and thus, expression. But then again, the skeptic might wonder: are there such things?

Wittgenstein’s famous final proposition closing out the Tractatus, ‘whereof one cannot speak, thereof one must be silent’ can be (and has been) read in two different ways. First, that the attempt to speak about things ‘beyond’ conceptualization is just nonsense; that only clearly definable terms have meaning. This reading—I think these days mostly considered a misreading—is associated with the logical positivists, who sought to find a precise language capable of encompassing all and only empirically verifiable truths, stripping it of all superfluous metaphysical chaff.

Second, that there are truths or topics that cannot be verbalized; that what we can talk about is just a part of what there is, and that the unspeakable rest of it will always lurk beyond our verbal grasp. It is in this sense that Wittgenstein acknowledges that his own propositions in the Tractatus must ultimately be meaningless, that they can merely ‘indicate’ a certain truth—that they must serve as a ladder to be thrown away after having climbed it.

Such an attempt to ‘speak the unspeakable’ naturally has an air of paradox. Ultimately, the Tractatus itself is just a bit of text like any other; what it contains thus must be expressible by text. But if it claims that certain things are not so expressible, then it has, even if only negatively, managed to express something about them: thus undermining its own claim.

There is, I think, a way out of this dilemma: a way of speaking the unspeakable—or perhaps evoking it. To illustrate this, I consider two sources: first, the attempt of finding automated ways of speaking, via modern-day artificial intelligence; and second, the ways in which the unspeakable is alluded to in the works of modern horror fiction, particularly as pioneered by H. P. Lovecraft. Along the way, we will see that, beyond a thin veneer of apparent reasonableness, even everyday objects hide cyclopean chasms of the unspeakable, leaking into our world through every crack in the conceptual cloaks we use to try and cover them up.

The importance of Lovecraft to philosophy has been investigated by Graham Harman within his Object-Oriented Ontology (OOO) in the 2012 book Weird Realism: Lovecraft and Philosophy. The following will add little to his analysis. My main reason for writing it is that I believe there is something to these points even absent a commitment to the OOO-framework, especially regarding the speakable/unspeakable dichotomy. In particular, its dividing line can be seen to cut along the interface between ‘System 1’ and ‘System 2’ in dual-system psychology—or, as I like to call them, the lobster and the octopus.

The Humean Approximation

It may have been the apparent graspability of objects that inspired 18th century Scottish philosopher David Hume, one of the most notable figures of the empiricist tradition in philosophy, to his bundle theory: an object, Hume held, is nothing but the collection of its properties. Thus, an apple is nothing over and above the collection of its roundness, redness, sweetness, and so forth; there is no further substance that has these properties, but could equally well assume others.

These properties, in so far as they do not exclude one another, can in principle be freely combined to create new objects: something that is round and red, but tart rather than sweet, might go under the name ‘tomato’, for instance. Objects are just whatever can be mixed up from the bag of ingredients, meaning properties.

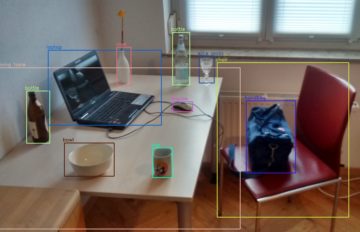

This picture is challenged by the way modern AI systems recognize objects. Where according to a bundle theory, an object should be individuated by identifying its properties, modern AI systems typically pass judgments on images as a whole, in a way that is not clearly differentiable into the recognition of individual elements. Moreover, their attention often is not just limited to what we consider the ‘object’, but includes relationships with the environment: adding elements to a scene can change the way different elements are recognized. A Humean object, by contrast, is its own thing, and its character doesn’t change depending on its surroundings.

On Hume’s theory, then, object recognition might work by deciding a sequence of alternatives: Is it round or square? Red or green? Sweet or tart? In AI research, this sort of approach is called an ‘expert system’. Such systems are based on the idea that one could just program a huge database of domain-relevant distinctions, and automatically decide them one by one, to eventually achieve an unambiguous verdict—say the diagnosis of some illness. While it has its uses, this program, nowadays often somewhat mockingly referred to as ‘GOFAI’—Good, Old-Fashioned AI—has largely fallen out of favor.

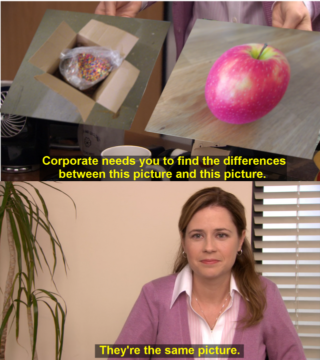

An advantage of this method, however, is that in case of failure, it is relatively transparent where things went wrong: misidentifying a tomato as an apple could be due to a mistake in deciding the ‘sweet/tart’ disjunction. In contrast, the mistakes made by a modern-day neural network AI, such as misidentifying what looks (to us) like a random abstract pattern as the picture of a starfish, are severely puzzling from a Humean point of view: the pattern has none of the properties Hume would have claimed constitute the object.

Imagine asking somebody what’s in a box full of confetti, and having them confidently declare it an apple! We would surely conclude that there is something objectively wrong with the perceptual apparatus of such a person. But for the AI, there is no essential difference between the pattern and the starfish: both fit naturally into the same category—both look, inscrutably, ‘starfish-ike’.

This opens up a first worry: the AI will never be able to tell that its classification is ‘wrong’; but then, by what token to we think we’re ‘right’? All we have is our collective judgment: but that’s really not saying anything other than that a bunch of similar judges, whether artificial or natural, agree on the judgment. There is, beyond our judging an apple to be an apple, no possibility to appeal to an independent standard to adjudicate whether we’re right in this judgment.

Suppose we encountered an alien species whose judgments about objects systematically differ from ours, yet who are internally just as coherent as we are: who’s to say who’s right? Perhaps our lumping certain objects together under the category ‘apple’ is not different from the AI seeing no distinction between the pattern and a starfish, after all!

This becomes even more clear if we let an AI run in ‘reverse’ to try and produce an image of its own. AI-generated imagery has seen rapid development in the last few years, with algorithms such as OpenAI’s Dall-E producing stunningly realistic pictures from descriptions. But again, I think this is most illuminating when it goes wrong.

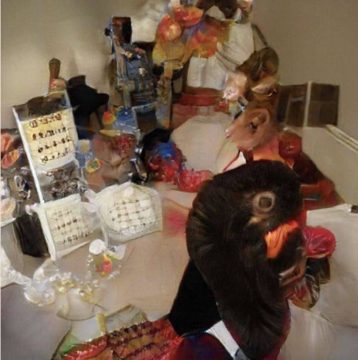

In 2019, a picture was posted to Twitter with the caption ‘name one thing in this photo’. On a cursory glance, the image might easily be taken to show a somewhat disorganized room, various items—perhaps a trash bag, some pieces of writing, perhaps a heap of laundry—strewn about. But as soon as one tries to actually identify any of these items, one finds that the image resists being disarticulated into clearly circumscribed objects—it is an example of a world that, unlike ours, is not a world of objects: rather, it’s a world of stuff, that, while readily visually appreciable, resists conceptualization. There is no list of ‘objects’ in this ‘room’ that would enable one to recreate a copy; nothing there is just a bundle of listable properties.

The opposite effect is likewise possible: in Oliver Sachs’ classic The Man Who Mistook His Wife For A Hat, the titular case concerns a man suffering from visual agnosia: while nothing is, biologically, wrong with his vision, he is unable to recognize concrete objects, to integrate their properties into a coherent whole. Confronted with a rose, the patient, Dr. P, describes it as ‘a convoluted red form with a linear green attachment’, while a glove is described as ‘a continuous surface, infolded on itself’ which appears to have ‘five outpouchings’. Here, we have the properties—but the bundling, the coming together into an object, seems to fail.

What this seems to suggest is a picture in which the world does not just come nicely sliced into objects to us, but in which we do the slicing, by means of coordinatizing it with a set of concepts. The world thus splits in two: the pre-conceptual, amorphous reality of stuff, and the Humean approximation, the world of our shared experience, the objects with their properties we can talk about—an unspeakable reality and the speakable representation we fashion from it.

I should perhaps emphasize that this is not the Kantian distinction between noumena, things in themselves, and phenomena, objects of our senses: both speakable and unspeakable aspects of objects feature in our experience of them, but—for obvious reasons—only the speakable, bundle-of-properties conception ever makes it into our shared discourse. Rather, it parallels the distinction, familiar from dual-system psychology, that partitions our cognitive facilities. There, we have ‘System 1’, the automatic, effortless, unconscious, implicit facility of recognition at work, for instance, when identifying familiar faces—the octopus, ever entwined with the world; and ‘System 2’, the explicit, analytic, step-by-step, conscious reasoning carried out, e. g., when constructing a logical argument—the lobster with its analytic claw.

When Things Come Apart

In the past, I have argued that the former is analogous to a neural network, like a deep learning system, while the latter is like a more traditional algorithm, carrying out instructions. The dual nature of the object then consists in a System 1-style implicit judgment together with System 2’s analytic disarticulation into clearly delineated properties. System 2 is what we use to convey information to one another: when I claim to have seen a red kite hunting for mice circling overhead, and you ask how I know it was a red kite, I will say something like ‘I saw its characteristic forked tail and rufous wing coverts’, or something like that. I will not say ‘it looked red kite-ish’—but this is the sort of ‘holistic’ judgment passed by System 1. Generally, this sort of judgment has no easy translation into System 2-style lists of reasons—just as the working of a neural net has no easy translation into an expert system-style decision tree.

Each aspect is indispensable to our concept of an object—if the System 1-style bundle of properties is missing, we end up with an inscrutable judgment of the sort that identifies random patterns as starfishes; if System 2’s holistic binding is absent, all we have are disarticulated, local properties, as in the case of Dr. P. But only System 1’s conceptual image of the object can feature in conversation, leaving the part of the object supplied by System 2 unspeakable.

Both conceptions are not entirely separate, however. Any bundle of properties calls up an accompanying object in toto—rather than, as in Dr. P’s case, remaining disjunct. Describing a ‘red, round, glossy fruit’ will evoke a picture of a whole object, rather than a dissociated collection of properties. One might speculate that the mechanism at work here is not entirely dissimilar from the way recent AI image generators produce images from text—thus, supplying a System 2, ‘unspeakable’ object on the basis of a System 1 prompt. The speakable thus backreacts on the unspeakable, and skillful manipulation could enable us to use this backreaction to achieve a kind of commonality as regards the unspeakable, as well. A speakable bundle of properties can be made to point beyond itself towards the unspeakable, calling it up in a way not dissimilar to how the right incantation summons a spirit from beyond.

We bump up against the insufficiency of our conceptual tools to encompass objects in their totality only rarely. Thus, for the most part, we live with the fiction that the world is a world of objects; but in reality, it is a world of stuff, from which objects are fashioned only by convention and approximation. In this sense, we hide the world behind our concepts, and each crack in this self-constructed veil is an object of both fascination and loathing: the presence of the unspeakable shows us that the world is not for us.

It is then no wonder that the best tools (that I am aware of) for bringing the unspeakable world, to an extent, at least, to light, come from the tradition of horror fiction: horror emerges when the cardboard facade we erect in the world’s stead is shown as such, when cracks begin to emerge, and when, finally, the Potemkin village collapses and reveals its unfathomable foundation.

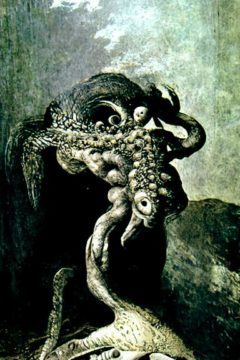

The greatest mastery over marshaling the tension between the world and its bundles of properties to horrific effect has been achieved, I think, in the work of H. P. Lovecraft. Lovecraft is often chided for his overuse of adjectives, such as ‘cyclopean’, ‘eldritch’, ‘squamous’, or ‘blasphemous’. But from the point of view of ‘deconceptualizing’ the world, his work is that of replacing the familiar with the unknown: explicitly substituting elements of the bundles of properties we immediately call to mind when reading descriptions with more ill-fitting ones, creating a kind of friction between stuff in its unspeakable reality and the properties normally featured in its Humean approximation.

We may think of these properties as the tip of the iceberg: that part of the object that juts out into the world of our discourse, that can be freely shared among members of a linguistic community. By this substitution, these objects are alienated from their usual form, ever so slightly becoming something else. Thus, this technique serves as a remainder of the gulf between the object-as-bundle, and the reality it approximates.

The effect can be increased by introducing properties that stand in mutual tension, or are openly paradoxical. When Lovecraft speaks of an ‘angle which was acute, but behaved as if it were obtuse’, or when he combines ‘simultaneous pictures of an octopus, a dragon, and a human caricature’, there is nothing there that these bundles of properties could approximate—at least, not in our usual understanding of these properties. The effect is like that of an inconsistent geometric shape, such as a Penrose triangle: locally, at each vertex, nothing is wrong with the figure—but the overall shape is impossible, and highlights the unreality of the construction. Similarly, the inconsistent bundle-like descriptions highlight their own unreality, and thus, indicate the approximate nature of all such descriptions. The reality these inconsistent bundles approximate, the unspeakables they evoke, are not everyday objects, but literal things that should not be.

In Lovecraft’s fiction, then, we have a way to make vivid the gap between the world of objects we ordinarily take ourselves to inhabit, and the nature of that world as ultimately mere approximation to a reality that does not cleanly decompose into human concepts. Lovecraft indicates the unspeakable by remixing, retouching, and realigning speakable parts into bundles of properties that approximate no familiar reality, thus both evoking new, horrific unspeakables and highlighting the gap between our ordinary concepts and the unspeakable reality behind them even as concerns objects as familiar as apples (or angles).

What does this mean for Wittgenstein? We need not be silent regarding that of which we cannot speak. While we cannot directly refer to it, we can evoke—as in a ritual—the unspeakable by skillful manipulation of the speakable. But—keeping the fate of many Lovecraftian narrators in mind—while not silent, we ought perhaps be careful.