by Emrys Westacott

If, for a long time now, you’ve been getting up early in the morning, setting off to school or your workplace, getting there at the required time, spending the day performing your assigned tasks (with a few scheduled breaks), going home at the pre-ordained time, spending a few hours doing other things before bedtime, then getting up the next morning to go through the same routine, and doing this most days of the week, most weeks of the year, most years of your life, then the working life in its modern form is likely to seem quite natural. But a little knowledge of history or anthropology suffices to prove that it ain’t necessarily so.

If, for a long time now, you’ve been getting up early in the morning, setting off to school or your workplace, getting there at the required time, spending the day performing your assigned tasks (with a few scheduled breaks), going home at the pre-ordained time, spending a few hours doing other things before bedtime, then getting up the next morning to go through the same routine, and doing this most days of the week, most weeks of the year, most years of your life, then the working life in its modern form is likely to seem quite natural. But a little knowledge of history or anthropology suffices to prove that it ain’t necessarily so.

Work, and the way it fits into one’s life, can be and often has been, less rigid and routinized than is common today. In modernized societies, work is organized around the clock, and most jobs are shoehorned into the same eight-hour schedule. In the past, and in some cultures still today, other factors–the seasons, the weather, tradition, the availability of light, the availability of labor–determine which tasks are done when.

Nevertheless, it is reasonable to see the basic overarching pattern–a tripartite division of the day into work, leisure, and sleep–as having deep evolutionary roots. After all, the daily routine of primates like chimpanzees exhibits a similar pattern. Work for them consists of foraging, hunting, and building nests for sleeping. Leisure activities consist of playing, grooming, and other forms of socializing, including sex. They typically sleep rather more than us; but the structure of their days roughly maps onto that of most humans. The major difference between us and our closest relatives in the animal kingdom lies not so much in how we divide up our day as in the variety and complexity of our work and leisure activities.

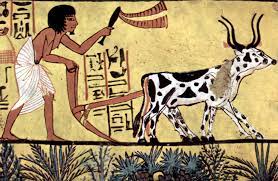

Paleontologists named one of our evolutionary ancestors “homo ergaster” (working man) because this species, which emerged in Africa nearly two million years ago, appears to have made stone tools that were more sophisticated than those produced by their homonin predecessors. Yet it would be misleading to view the fashioning of tools as the beginning of what we call work, given the need for most primates to spend many hours seeking food. The transformative event that set homo sapiens on the path toward clocking-on cards, production quotas, and efficiency reports was not the development of tools but the first agricultural revolution. Archeologists now believe that this began roughly twelve thousand years ago in Western Asia and spread from there over the next few millennia. Like the industrial revolution at the end of the eighteenth century, it greatly expanded the possibilities open to human beings in general, but for many of those living through it, especially early on, it quite possibly meant unprecedented misery.

In his sweeping historical narrative Sapiens, Yuval Noah Harari explains the two-edged character of the agricultural revolution. Planting crops and domesticating livestock must have seemed like a good idea to hunter-gatherers and nomads since it promised a more reliable and more abundant supply of food. That was the obvious benefit. But there were also significant costs, and these were not so obvious, at least not at first. Farming, which involves the arduous labor of clearing, ploughing, planting, weeding, irrigating, fertilizing, harvesting, and storing, is much harder work than foraging; it requires more time, energy, and strength. The work itself is tedious and unstimulating. It is more likely to cause physical ailments like slipped disks and arthritis. It leads to a less varied diet. And it renders the population vulnerable to crop failures.

The deepest problems created by the agricultural revolution, however, arose out of what would appear to be its successes. It made it possible for communities to support larger populations; and it led to economic growth, with stockpiled produce, increased production of tools, and new opportunities for trade. But population growth also meant an increased need for food, and this increased need sealed off any possibility of a return to the easier, simpler, hunter-gather way of life. The more mouths there were to feed, the harder people had to work. As for the new abundance of stored food, this meant that villages were more likely to be attacked and plundered. The solution to this problem was the creation of organized states with rulers and government officials organizing protection, keeping order, and levying taxes.

The growth of the state–i.e. some sort of public administration–introduced a new division of labor. There had always been some division of labor between the sexes. Now there were those who performed the basic necessary manual labor involved in producing the means of life, and others–rulers, officials, record keepers, priests, and other intellectuals, along with soldiers–who received the fruits of that labor while doing something else entirely. This division of labor led to increasingly hierarchical societies, with mental work, including governance and priestcraft, being esteemed and rewarded much more than manual work. Once the hierarchy was established, those at the top inevitably dominated those at the bottom, even though they were parasitically dependent on the latter’s labors. But here, too, there was no going back to the simpler, fairer, and more egalitarian form of life. Governments, ruling classes, hierarchies, parasitism, and exploitation were here to stay.

We today can, of course, happily applaud the agricultural revolution since it laid the foundations for what we call civilization with all its interesting and enjoyable cultural fruits. But it contained a paradox. In searching for an easier life, the people who made the agricultural revolution introduced a form of life that ended up being not easier but more laborious. Aiming for a secure existence, they made themselves vulnerable to new kinds of insecurity.

This is why Harari describes the agricultural revolution as “history’s biggest fraud.” For a very long time it actually made most individuals worse off than their foraging forebears. Moreover, this same paradox has bedeviled other periods of momentous technological progress. The industrial revolution promised the vastly more efficient production of useful items, which would then cost much less to buy. And it delivered on this promise. At the same time, though, it forced millions from the countryside into the filthy, congested squalor of the rapidly growing industrial cities, destroying cherished traditional ways of living and working in the process. Today we enjoy our mass-produced commodities while still being capable of feeling nostalgia for a (admittedly idealized) pre-industrial world in which people enjoyed a closer connection with their natural surroundings.

The computer revolution appears to be another of these world-changing transformations. It is already becoming difficult to remember quite how we used to do such things as arranging meetings or conducting research before the advent of e-mail, the internet and the cell phone. The benefits of this revolution are obvious: just consider the speed at which scientists and public health administrators were able to coordinate the development, production, and distribution of vaccines to combat COVID-19. But there are obvious costs as well, such as cell phone addiction, ubiquitous misinformation, the stress of keeping up with technological change, and large numbers of people left on the unemployment scrapheap due to automation.

The computer revolution appears to be another of these world-changing transformations. It is already becoming difficult to remember quite how we used to do such things as arranging meetings or conducting research before the advent of e-mail, the internet and the cell phone. The benefits of this revolution are obvious: just consider the speed at which scientists and public health administrators were able to coordinate the development, production, and distribution of vaccines to combat COVID-19. But there are obvious costs as well, such as cell phone addiction, ubiquitous misinformation, the stress of keeping up with technological change, and large numbers of people left on the unemployment scrapheap due to automation.

As before, though, once the revolution is underway, there is simply no going back. We have already become entirely dependent on the new technology. Were it now to fail in some way, we would be largely helpless, as Emily St. John Mandel’s novel Station Eleven, which depicts a world following a devastating pandemic, makes dramatically clear. The lack of electricity alone would suffice to destroy our present civilization.

The impetus behind most technological innovation that affects the workplace, from the axe to AI, is to make it possible to accomplish some task more easily, or more efficiently. Many theorists have thus looked forward to a time when long hours of laborious labour will be a thing of the past since machines will do most of the work that needs to be done. In 1930 John Maynard Keynes speculated that by 2030 we would only need to work for fifteen hours a week. Bertrand Russell argued in 1932 that “the road to happiness and prosperity lies in an organized diminution of work,” and that the only thing blocking this road was a foolish and outmoded belief in the virtuousness of work.

There has been a great deal of talk over the past few decades about the effect of the computer revolution on work and the workplace. Much of it, like Jeremy Rifkin’s The End of Work, is in the foreboding mode: the machines are coming to get your jobs. This is understandable, since the roadblock that Russell spoke of is clearly still with us. Russell, it should be noted, advocated an “organized diminution of work” (italics added). But our current socio-economic system makes this hard to envisage. Politicians of all stripes preach versions of the traditional work ethic (hard work should be rewarded; slackers should not be subsidized), and they propose policies that they say will create lots of “good jobs.” By “good jobs,” they mean full-time, forty-hour a week jobs that pay decent wages and perhaps offer the prospect of overtime, not twenty-hour a week jobs that, combined with other progressive measures such as free universal health care and free universal child care, pay just enough for people to live comfortably and with ample leisure.

I am not saying that progressive politicians would be well advised right now to campaign on a platform based around the notion of a radical reduction in how much people need to work. To do so would probably be political suicide. Yet the main obstacle to the more leisured lifestyle that many people say they would prefer lies, ultimately, in the way we think rather in any actual practical limitation on what is possible. Most modernized, technologized societies are, after all, wealthier than ever before in the sense that there is more money than ever sloshing around the system. And computerization has massively increased how much each individual worker can accomplish in many workplaces. Logically, then, the necessary conditions are present for us to organize things in the direction Russell recommends. Why we don’t appear to be embracing this opportunity is an interesting and important question.