Karen Hao in the MIT Technology Review:

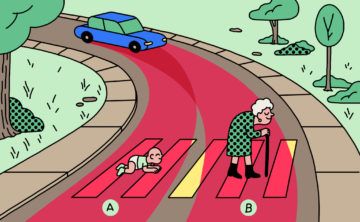

In 2014 researchers at the MIT Media Lab designed an experiment called Moral Machine. The idea was to create a game-like platform that would crowdsource people’s decisions on how self-driving cars should prioritize lives in different variations of the “trolley problem.” In the process, the data generated would provide insight into the collective ethical priorities of different cultures.

In 2014 researchers at the MIT Media Lab designed an experiment called Moral Machine. The idea was to create a game-like platform that would crowdsource people’s decisions on how self-driving cars should prioritize lives in different variations of the “trolley problem.” In the process, the data generated would provide insight into the collective ethical priorities of different cultures.

The researchers never predicted the experiment’s viral reception. Four years after the platform went live, millions of people in 233 countries and territories have logged 40 million decisions, making it one of the largest studies ever done on global moral preferences.

A new paper published in Nature presents the analysis of that data and reveals how much cross-cultural ethics diverge on the basis of culture, economics, and geographic location.

The classic trolley problem goes like this: You see a runaway trolley speeding down the tracks, about to hit and kill five people. You have access to a lever that could switch the trolley to a different track, where a different person would meet an untimely demise. Should you pull the lever and end one life to spare five?

More here.