by Ali Minai

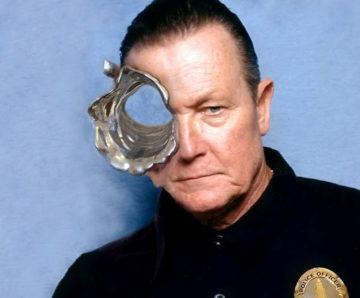

One of the most interesting and memorable characters in sci-fi films is the T-1000, the shape-shifting, nearly indestructible robot from the classic film Terminator 2: Judgment Day, starring Arnold Schwarzenegger. There are other, less prominent examples of shape-shifting intelligent beings in sci-fi – for example Odo, the chief of security on Star Trek’s Deep Space Nine, or the electromagnetic, gaseous, and otherwise inchoate life-forms encountered in various Star Trek episodes. These fictional examples raise the interesting question of whether intelligent beings without a fixed structure are feasible in practice – naturally or through technology (In fact – speaking of Star Trek – the question could potentially be extended to teleportation as well since that would presumably involve the re-assembly of a disassembled body, but that is too remote a possibility to consider for now.)

One of the most interesting and memorable characters in sci-fi films is the T-1000, the shape-shifting, nearly indestructible robot from the classic film Terminator 2: Judgment Day, starring Arnold Schwarzenegger. There are other, less prominent examples of shape-shifting intelligent beings in sci-fi – for example Odo, the chief of security on Star Trek’s Deep Space Nine, or the electromagnetic, gaseous, and otherwise inchoate life-forms encountered in various Star Trek episodes. These fictional examples raise the interesting question of whether intelligent beings without a fixed structure are feasible in practice – naturally or through technology (In fact – speaking of Star Trek – the question could potentially be extended to teleportation as well since that would presumably involve the re-assembly of a disassembled body, but that is too remote a possibility to consider for now.)

Recently, several research groups have worked on building robots that can reconfigure themselves autonomously into different shapes and perform different types of actions suitable to their current form. For example, a robot consisting of a large group of small, identical modules could turn itself into a compact sphere to roll down smooth surfaces, flatten itself to slide under doors, grow limbs to climb stairs, or take a snake-like form to crawl away. Such robots, with various degrees of reconfigurability, have now been implemented extensively, both in simulation and in actuality. Some of these robots are, in fact, controlled by external computers to which they are connected or by a centralized brain built into the robot, but the more interesting ones are based on distributed autonomous control: Each module in the robot communicates with other modules near it and, based on the information obtained, triggers one of several simple programs it is pre-loaded with. These programs might cause the module to send out a particular signal to its neighbor or make a simple move such as a rotation, alignment, detachment, or attachment. As all these mechanically connected modules signal and move in response to their triggered programs, the robot assumes different shapes and global behaviors such as locomotion or climbing emerge through self-organized coordination.

The primary feature in these robots is what might be termed radical reconfigurability, i.e., no elementary component has a fixed location in the body or is specialized to a task; like Lego pieces, it can serve any role anywhere. However, this property depends on another, more general attribute: radical distributedness. A radically distributed system consists of identical and exchangeable modules with no permanent functional specialization: Any module can take on any role as needed.

This is exactly what radical reconfiguration requires, since no systemic function can depend permanently on any specific component at a particular position, and all such functions must emerge bottom-up from the interaction of autonomous components rather than as a result of top-down control by a centralized controller. As a consequence of these two properties, the system has no centralized brain; no fixed organs, limbs, etc.; and no defined tissues or cell types, etc. The robot is, at its core, a conceptual “blob”.

These self-reconfiguring robots can be seen as very early, very crude approximations to the PT-1000 in Terminator 2 and Odo in DS9 – and their success might suggest that something like this is biologically or technologically feasible. But those fictional beings have a crucial feature lacking in today’s self-reconfiguring robots: Intelligence. The programs triggered in their modules, and the conditions of their triggering, are carefully crafted by human designers to ensure the emergence of interesting behaviors – perhaps in combination with off-line optimization and learning. And, though fairly sophisticated patterns of movement can emerge in these robots, tasks requiring any higher cognition or planning are not (yet) feasible without at least some centralized control. It is thus interesting to ask whether they can be feasible: Can engineering produce a smart blob like the T-1000? Can biology evolve shape-shifters or intelligent electromagnetic clouds?

Answering this question requires some careful parsing. At a superficial level, the possibilities and constraints of biology and (today’s) technology are quite distinct, but ultimately, both reduce to physics. To pose the question objectively, one must ask whether a completely self-reconfiguring system where no component is fixed in terms of position or function can be intelligent. This precludes, for example, the assumption of some fixed kernel – such as a core processor or memory – that does not participate in reconfiguration, the possibility of an external memory to and from which new configurations can be downloaded and uploaded, or the existence of an external controller managing the reconfiguration. Posed in this way, the question asks whether a physical artifact with the simultaneous attributes of radical self-reconfigurability and intelligence is feasible.

Why Ask the Question?

One obvious reason for posing the question about the feasibility of radically reconfigurable intelligent systems is that they would be utterly fascinating, quite useful, and extremely dangerous – as Arnold Schwarzenegger discovered in T-2. But the question is also interesting in a more mundane, engineering sense.

Over the last several decades, there has been a great deal of research on engineering highly-distributed complex systems such as swarms of robots or drones, smart structures, smart matter built from particles that can compute, and, of course, self-reconfiguring robots. Much of this research is inspired by the capabilities of natural complex systems such as insect colonies, bird swarms, brains, biological tissues, ecosystems, societies, etc. – all of which have the remarkable ability to produce large-scale structural and functional organization through bottom-up self-organization rather than top-down design. Not all of these systems are radically distributed or radically reconfigurable, but those attributes can be seen as a logical extrapolation of what nature has produced, and many of the engineered systems mentioned above do assume both properties. Indeed, they derive much of their appeal from these, since it is easy to see that distributed, bottom-up self-organization in systems with mutually exchangeable components leads to greater robustness, flexibility, and scalability compared to systems with top-down design, fixed component roles, and centralized control. For example, a group of robots controlled by a single leader or controller can be disabled by any malfunction in the controller (or disruption of communication with the controller), but a swarm of robots that interact peer-to-peer, making their own decisions following simple rules can perform collective tasks and continue to function even if some members of the group are lost or if there are some communication breakdowns. Also, more robots can be added easily to the group without any need for redesigning or upgrading the controller. This is exactly the same intuition as that underlying the reconfigurable robot models discussed earlier.

Given all this, it is interesting that no known natural complex system with complex behavior – and certainly no organism with even minimal intelligence – is radically reconfigurable or radically distributed. It is important to focus here only on radical reconfiguration and radical distributedness. All biological organisms have some reconfigurability that allows them to adapt to physical incapacitation and heal from injury. More importantly, all complex organisms are distributed, but at multiple levels. They are networks of functional modules corresponding to various systems (e.g., the digestive system, endocrine system, nervous system, etc. in mammals). Each system is itself a network of multiple anatomical (and functional) modules, i.e., organs. The organs are made from self-organizing, distributed tissues and often have a modular network architecture with components. For example, the brain consists of the cortex, the cerebellum, the brainstem, the hippocampus, etc., each a self-organizing, distributed network of smaller modules – classes of cells, canonical microcircuits of different cell types, etc. Moving deeper, each cell is also a modular network with a membrane, a nucleus, mitochondria, various channels, receptors, etc., each of which comprises a specific set of molecules (proteins, lipids, DNA, various RNAs, etc.) Thus, multiscale modularity with distributed, network organization at each scale is a universal property of complex Life. Flat, radical distributedness is not – and it is interesting to ask why.

Preliminaries

To begin answering the question of whether a smart blob is possible, we can turn to an exceptionally humble but exceptionally interesting organism – in fact, a super-organism: The slime mold. This fungal creature that is occasionally encountered on rotting trees and flower beds is actually a collection of individual fungal organisms, but for our purposes, it can be seen as a single organism comprising a large number of identical single-celled micro-organisms. Different groups of these micro-organisms can, in fact specialize to perform various functions such as anchoring the slime mold to its support or propagating spores into the air. But the key point is that, in principle, any micro-organism in the slime mold can specialize to any function or remain unspecialized. The specialization is not fixed. If the slime mold is split into two, each piece can be its own slime mold with all the necessary functions. The slime mold is a radically distributed and reconfigurable organism.

Compare this with, say, a complex animal such as a fish or a human, which also is a collection of cells, but whose cells have specialized permanently to specific functions, taken up specific positions in the body, and become part of specific organs. This is exactly what creates a module such as the nervous system or the heart. And if such an animal is split into two, it cannot survive because some critical organs will be missing from each part. The fixed modularity of a complex animal, it seems, comes at a high price in terms of survivability – creating a life-and-death imperative to stay intact. Would it not have been better if we humans could, like slime molds, have every cell in our body potentially available for any function, so that losing a few billion cells would not cause death, but just result in a mini-me? And, in fact, there are such animals – most famously the hydra, which can be cut into half and each half becomes a living hydra, albeit by regeneration rather than just reconfiguration. This is also true of many plans that can be propagated by cuttings. But, alas, it is not true of humans or fish.

Now, evolution is pitiless tyrant when it comes to letting bad choices persist. The fact that the high degree of fixed specialization – in spite of the risks it brings – has persisted universally in complex organisms for hundreds of millions of years means that it must have some extraordinary benefit. This question can be considered at the level of all functional aspects of organisms, but the discussion here will focus only on the nervous system and the function of intelligence.

Defining Intelligence

It might be said (and probably has been said) that trying to define intelligence is a fool’s errand, but it is useful to have at least a provisional definition that establishes the functional role of intelligence. A minimal and very broad definition that will suffice for the present discussion is the following:

Intelligence is the ability of an autonomous organism to map its sensory inputs to a large repertoire of useful complex behaviors in complex, dynamic environments.

This definition of intelligence is fundamentally embodied: It is rooted in behavior, not abstract thought. Only an agent capable of behaving can demonstrate intelligence, and intelligence that cannot be demonstrated is practically non-existent.

There are several terms in this definition that are themselves in need of definition. First, the stipulation of autonomy: The capacity to make individual decisions without external control. Since intelligence is demonstrated ultimately in “good” choices, the ability to make decisions – autonomy – is a prerequisite. Without getting tangled up in questions of free will, the commonly agreed notion of autonomy limits the definition to complex animals – and excludes bacteria, fungi, plants, and animals from amoeba to anemones that most people would agree are outside the ambit of intelligence (A discussion about decision-making in plants or bacteria would be interesting, but will be skipped here.)

The next two terms that require clarification are useful behaviors and complex behaviors. The utility of behaviors, at the most fundamental level, must be defined in evolutionary terms: A behavior that enhances the ability to survive and reproduce is useful. But as organisms have become more complex, utility has ramified. In humans, for example, one might consider the ability to write poetry or prove theorems to be somewhat useful as well. Utility can also have a collective aspect such as improving group fitness, or cumulative value where individually low-utility behaviors – such as narrowly-focused scientific research – collectively provide much greater utility. But utility alone is not sufficient to define intelligence, since all organisms – intelligent or not – evolve to have useful behaviors. Intelligent behavior requires the further attribute of complexity. Behavioral complexity too is something that is easier to understand than to define, but here it is construed broadly to include behaviors that have the following attributes: Non-trivial integration of information from multiple sources; specific combinations or sequences of diverse micro-actions; and the generation of organized – though possibly non-material – artifacts or effects. The autonomic regulation of physiological functions – no matter how complex – is excluded. Thus, the spinning of a web by a spider is a complex behavior, as is a bird finding food to feed its young, or a person playing a sonata on the piano. A slime mold reconfiguring itself might be seen as a complex behavior too, but it is not part of a large repertoire and is more akin to autonomic regulation. Effectively, the line for intelligence is drawn provisionally somewhere between worms (not intelligent) and molluscs (intelligent).

The last component of the definition – a complex, dynamic environment – is much easier to explain. The simplest example is the real world, which is extremely complex and ever-changing, but one can also conceive of other worlds, such as cyberspace, that would meet the criterion. The main point is that the complexity of the environment imposes a need for complex information processing and long-term learning from experience – and hence the need for intelligence.

Bringing all this together, the proposed definition of intelligence implies that it is the attribute that enables productive behavioral complexity in autonomous agents operating in complex environments.

The Argument

The main assertion made in this article is that a radically distributed, radically reconfigurable organism cannot meet the requirements for intelligence because intelligence requires a stable, complex organization of information flows that can only be supported by a highly-optimized, stable, differentiated structure.

The definition of intelligence which this discussion is based on implies that the organism receives rich streams of information from a variety of sources and makes choices from a large number of possibilities in real-time. At an abstract level, this can be seen as defining of set of very complex algorithms for information processing and decision-making – and for learning from experience to improve the quality of behavior. For successful operation, these algorithms must ensure that the right information streams are processed appropriately, and the results arrive at the right muscles to produce useful behavior. This structuring of the information flow – both from input to output and also any feedback generated at intermediate levels – is crucial to performance: The information coming in through the eyes must eventually guide the path of a hand reaching for a glass of water on the table. Along the way, it must be processed in a variety of ways, separated (e.g., into information about color, texture, intensity, movement), merged with other information (e.g., information from hearing, touch, and orientation), fed back, compared with memories of past experience, reconciled, and transformed. These operations require very specific, distinct mechanisms, and thus, very different processing structures – which is exactly what one sees in different parts of the nervous system.

In a system controlled by a digital processor – such as a robot – the algorithms for all sorts of behaviors can be programmed in principle (though often not in practice) on a single, general-purpose processor, and modified, upgraded, or replaced as needed to get the appropriate behavior. However, this is not a particularly useful way to look at real organisms. An organism is a physical system that computes through its physics in real-time – a very complex and adaptive analog computer, so to speak, not a digital computer with a clear separation between program, data, and processor, where different programs can be loaded and run instantaneously at will. The organism embodies its programs, and processes information implicitly as a consequence of its structure. Thus, to achieve the input to output mapping required for intelligent behavior, the organism must structure its physics – its anatomy and physiological processes – such that information is processed appropriately by default as it flows through the system. There are no if-then statements, no for-loops, no temporary variables, no offline storage, and no reboots in the organism. The information flows continuously.

All this requires an astounding amount of complex structural organization from the system level all the way down to the molecular level. The right sensors have to connect with the right neurons (nervous system cells), which, in turn, must connect with the right muscles. The fruit fly has about 100,000 neurons while the human brain has about 86 billion. Organizing systems of this complexity to achieve the right information flows and the many different types of processing needed at various stages is truly an immense task.

So where does this extremely specific and intricate organization come from, and at what cost? It is the result of several levels of engineering at very different time-scales. First, evolution configures the overall structure of an organism at all levels and encodes it in the organism’s DNA. This precise arrangement is the result of hundreds of millions of years of competition and natural selection. Then this coded blueprint is instantiated in each individual organism by the process of development, which “reads out” the instructions of the genetic code in the context of the growing organism and its environment to take it from the initial fertilized egg to a complete body that matures over stages to adulthood. Then there is further fine-tuning of this structure throughout the lifetime of the organism by the process of learning – modifying the behavior of neurons, strengthening and weakening muscles, and changing the connectivity between all of these in response to internal and external signals. And finally, there is rapid real-time adaptation, such as the change in pupil size in response to ambient light or the temporary fatiguing of muscles after exertion. The physical structuring produced by all these processes is what enables the organism to produce useful complex behavior – and thus intelligence.

The crucial lesson to draw from all this is that the organism becomes intelligent by instantiating a particular, very specific, very intricate structure that is both differentiated and persistent. The differentiation, i.e., specialization of different parts to specific structures and functions, is necessary because the processing needed is of many types. Persistence is necessary for (at least) two reasons. First – superficially – the detailed organization needed for intelligent behavior is too complex to be assembled and reassembled repeatedly: It is the work of eons, not seconds. But more deeply, persistence of structure is necessary because, for an organism, its structure is its function. A persistent structure allows the organism to retain a stable functional relationship with the world throughout its lifetime, building upon what it learns at each stage of development to become ever better-adapted by exploiting the highly effective structure it is endowed with through evolution. Absent this structural persistence, no memory or learning would be possible – unless the organism has access to some other stable information storage, which would violate radical reconfigurability. Ultimately, every organism can be seen as embodying a memory in the physical matrix of the world – a persistent local organization of matter that retains its structure over an extended duration and, as a consequence, behaves in useful ways.

To make this concrete in human terms, consider the relationship between the eye and the hands. The retina of the eye consists of fixed visual receptor cells – rods and cones – set at fixed positions. When the eye looks at a scene, each receptor picks up light from a very small region in the visual field. Two receptors next to each other get input from adjacent positions in the scene corresponding exactly to their relative position on the retina. Thus, as a whole, the retina captures an (inverted) image of the scene exactly as a film did in old cameras or a sensor array does in today’s digital cameras. This type of representation, called retinotopic because it is found in the retina, maintains the topological relationships in the view being seen: It keeps track of what is to the left or right, above or below, next to or far from what other things. This information is crucial for the neurons activating the muscles that control the hands to reach for objects in the visual field. Through initial configuration and subsequent learning, a stable relationship develops between the appropriate groups of retinal receptors and the neurons controlling the hand (in fact, though a very complex set of intermediate systems). Now imagine if neither the cells of the retina nor the neurons of the hand controller were fixed: The retinal receptors could swap positions with each other every day and the neurons that control the right hand today might control the left foot tomorrow. The stable relationships needed for learning hand-eye coordination could never develop in such a system, and the most basic requirement for intelligent behavior would not be met.

Indeed, the consequences of disturbing structural stability are seen when someone has a stroke or loses a limb. The resulting “reconfiguration” of the body – which is nowhere near as disruptive as radical reconfiguration – creates problems that can take years to recover from even partially. That is not an incidental limitation of the body; it is a fundamental one imposed by the complexity of the problem.

The Role of Multiscale Modularity

To complete the argument of why radical distributedness is not compatible with intelligence, it is also necessary to consider why organisms have the structure that is actually observed, defined fundamentally by modularity.

Modularity – the use of well-defined structural and functional blocks – plays a central role in all non-trivial complex systems. As pointed out earlier, biological organisms are organized into networks of modules at many levels, ranging from molecules within cells all the way to the organism as a whole. Indeed, one can even extend this hierarchy to larger scales, from organisms to populations, species, ecosystems, and the entire biosphere, i.e., the super-ecosystem of all life on Earth in interaction with the physical planet. This style of organization can be termed multiscale networked modularity, and one might ask why it is so universally present in complex systems? This is, in fact, one of the deepest issues in the study of complex systems, and a detailed discussion is best left to another time. Here, it suffices to note that recursive modularization is an extremely efficient, general, and productive method for building complex systems of potentially unlimited complexity – and thus of greater intelligence.

Recursive modularization is the process of repeatedly designating identifiable structural and/or functional units that are then treated as building blocks for more complex structures. For example, a building is, at its basic level, an assembly of simple modules such as pillars, beams, bricks, etc. These can be combined to define larger modules such as walls, facades, doorways, ceilings, and floors. These, in turn can be combined into modules such as rooms, foyers, stairwells, and hallways. The building can then be seen as an arrangement of these high-level modules. This illustrates three levels of recursive modularization, where the modules from each level are subsumed into modules at the next higher level. A key thing that enables an architect to imagine and design a very complex building is the ability to first do so in terms of high-level modules such as rooms and hallways rather than having to think at the level of bricks and beams – though that is how the building will eventually be specified and built. All engineering above the level of the simplest components is done implicitly in a recursively modularized framework. Designers of aircraft and electronic chips do not work at the level of molecules, but with a hierarchy of interacting sub-systems and components.

Nature too follows the same strategy. In fact, for an “engineer” that builds bottom-up through self-organization rather than through top-down design, this is the only feasible pathway to success. In building highly structured and differentiated systems, evolution has been an inveterate recursive modularizer, creating ever more complex bodies and processes over billions of years by combining modules at lower levels. As a result, organisms of increasing complexity have ended up with ever deeper levels of modularity rather than the flat, homogeneous, non-modular structure implied by radical distributedness.

The Centrality of Embodiment

Though it has not explicitly been cast as such, the argument presented above is fundamentally about the central role of embodiment in the emergence of the mind, and therefore, of intelligence. Embodiment – first developed systematically by the French philosopher, Maurice Merleau-Ponty, but traceable to earlier philosophers – is the idea that the body in its specific form is central to an organism’s experience of the world in perception, cognition, and behavior. This idea has been developed much further in psychology and cognitive science into detailed theories about the basis of perception and cognition, but a reasonable definition is given by Körner et al, who define embodiment as:

“… an effect where the body, its sensorimotor state, its morphology, or its mental representation play an instrumental role in information processing.”

This view privileges the body and its physics as the basis of all mental phenomena, and information processing as physical dynamics rather than computation where program and processor can be distinguished.

However, in most discussions of embodiment, there is a tendency to focus on the macro-level – the overall form of the body, the arrangements of muscles and joints, the positions and modalities of sensors, etc. But the mind – like all the processes of the organism – depends on all the levels of embodiment in the system – from the whole body down to the intricate molecular networks within individual cells. The divide between embodiment and physiology that is seen in many arguments over the nature of cognition is both artificial and arbitrary. The structure of a neural network in a particular part of the brain – indeed, even the structure of receptors, channels, and membrane features within an individual neuron – is part of the organism’s embodiment, with a role in determining its perceptual, cognitive, and behavioral processes. This embodiment is not just the form of the organism, it is also its memory – the generator, basis, and repository of its experience of the world. Unless one has a dualist view of mind and body, even the deepest aspects of the mind such as will and the self are defined by this embodiment, though we may not yet understand how. Changing this embodiment radically – even at, say, the cellular level – destroys the very basis of the organism as itself. It also precludes any real learning or memory beyond the most primitive level, since memory, by definition, requires some stability at some level. The slime mold – for all its robust efficiency – can never learn anything complex from experience (though it does learn simple things), build memories, have awareness, or develop a personality over a lifetime. A mind requires a deeper embodiment than radical distributedness can supply.

Some might object that all things – even computers – function only because of their physical form in the context of the world. True, but for computers, technology has developed the means to represent processes as disembodied information – programs and data – that can be mapped onto the embodiment of silicon circuits. Not so – yet – for living organisms. But even if that ever becomes possible – as some proponents of the Singularity expect – it will require a stable physical substrate, much as a computer requires a fixed electronic substrate to run its infinitely reconfigurable programs.

Of all the special features of the nervous system, the most special is its global structural stability. Cells in most other body tissues such the skin communicate with each other, but only within a limited group in their neighborhood. Or – as with immune system cells in the bloodstream and lymph – they communicate with a lot of other cells, but non-specifically and randomly in passing: No two individual cells develop any stable connection. Nervous system tissue is the only tissue in the body whose cells can make specific, long-lasting and adaptive cell-to-cell connections over long distances – millimeters to several feet in humans. It is also the most diverse tissue, with the most distinct cell types. These are precisely the attributes that makes the nervous system capable of supporting intelligence in a physical body. Take away either stability or diversity, and all you have is a slime mold.

Virtual Reconfiguration

Having argued why radical reconfiguration is incompatible with intelligence, let us end by briefly considering reconfiguration in another sense that is, in fact, enabled by intelligence: The control of a complex physical body.

Consider a sea anemone. It is embodied with a stable configuration and significant cellular differentiation, but its behavioral repertoire is limited. In technical language, one might say that it has few degrees of freedom – different ways it can move parts of its body. Its embodiment is conceptually quite shallow: It is largely stuck with the shape it is, and, as a result, can only do a few simple things. Contrast that with an eagle – an organism with a much more complex embodiment and many degrees of freedom. It can stretch its wings to float on air currents, or it can fold its wings and drop like a rock to catch its prey. It can also do all sorts of other complicated things in the air and on the ground, such as, landing on a branch, walking around, tearing the flesh of its prey, building a nest, feeding its young, and flying away. The way it does all that is by temporarily “reconfiguring” its body without disturbing its essential embodiment. And it can do this not only because it has more muscles and joints than the sea anemone, but also because it has a more complex nervous system. The degrees of freedom in the body can only be exploited usefully when matched by an equivalent complexity in the embodiment of the brain. And while the embodiment of joints and muscles is mostly fixed, the embodiment of the eagle’s brain is much more flexible since neurons can make, break and modify the connections between them based on experience. This allows the eagle, over time, to gradually alter its overall embodiment and become a better flyer and hunter. In a sense, it learns and fine-tunes embodied “programs” for controlled, temporary reconfigurations of its body – such as folding its wings – to achieve specific behaviors. But it can only do this because the basis of its overall embodiment is secure and stable.

Another interesting case is the complex camouflage demonstrated by octopuses and cuttlefish, which map complex chromatic configurations onto their bodies. While this may appear to be a more radical reconfiguration than an eagle stretching or folding its wings, it is no different in principle. The underlying substrate of the body remains stable – only the pigments expressed by individual cells change, just as the contraction states of individual muscles does for the eagle. Both the eagle and the cephalopod have nervous systems that can remember these chromatic or muscular patterns of activation and recreate them as needed through their deep embodiment.

The Future

So what is the future of radical reconfiguration? Will technology eventually enable us to achieve full reconfiguration – or almost full reconfiguration? This question can only be answered speculatively. Since technological artifacts are the result of an explicit design process by humans, they are not subject to all the same constraints as organisms produced by evolution, but the fundamental argument made in this article remains valid: A system without differentiated structural stability cannot be intelligent in the real world because it cannot maintain the complex embodiment necessary for intelligence. In the near term, reconfigurable robots will keep getting more sophisticated through the use of smart materials, machine learning methods, and artificial evolutionary algorithms without becoming autonomously intelligent. However, something close to radical reconfigurability could, in principle, be achieved by a system that has a small, extremely computationally powerful core that can learn stable memories and map them to a radically distributed body.

An even more speculative question to consider is whether stable, extremely complex memories can be preserved even in a radically reconfigurable system if its modules are computationally powerful enough. In other words, can such a system learn and deploy an almost limitless number of complex embodiments through brute computational power – replacing, in a sense, the tyranny of physics by the freedom of computation? It is hard to imagine such a technology, but predicting the future of technology has always been a risky business. The Terminator has already been imagined, and humans have an excellent record of turning imagination into reality.

Be very afraid!