by Jochen Szangolies

In the beginning there was nothing, which exploded.

At least, that’s how the current state of knowledge is summarized by the great Terry Pratchett in Lords and Ladies. As far as cosmogony goes, it certainly has the virtue of succinctness. It also poses—by virtue of summarily ignoring—what William James called the ‘darkest question’ in all philosophy: the question of being, of how it is that there should be anything at all—rather than nothing.

Different cultures, at different times, have found different ways to deal with this question. Broadly speaking, there are mythological, philosophical, and scientific attempts at dealing with the puzzling fact that the world, against all odds, just is, right there. Gods have been invoked, wresting the world from sheer nothingness by force of will; necessary beings, whose nonexistence would be a contradiction, have been posited; the quantum vacuum, uncertainly fluctuating around a mean value of nothing, has been appealed to.

A repeat motif, echoing throughout mythologies separated by centuries and continents, is that of the split: that whatever progenitor of the cosmos there might have been—chaos, the void, some primordial entity—was, in whatever way, split apart to give birth to the world. In the Enūma Eliš, the Babylonian creation myth, Tiamat and Apsu existed ‘co-mingled together’, in an ‘unnamed’ state, and Marduk eventually divides Tiamats body, creating heaven and earth. In the Daoist tradition, the Dao first exists, featureless yet complete, before giving birth to unity, then duality, and ultimately, ‘the myriad creatures’. And of course, according to Christian belief, the world starts out void and without form (tohu wa-bohu), before God divides light from darkness.

In such myths, the creation of the world is a process of differentiation—an initial formless unity is rendered into distinct parts. This can be thought of in informational terms: information, famously, is ‘any difference that makes a difference’—thus, if creation is an act of differentiation, it is an act of bringing information into being.

In the first entry to this series, I described human thought as governed by two distinct processes: the fast, automatic, frequent, emotional, stereotypic, unconscious, neural network-like System 1, exemplified in the polymorphous octopus, and the slow, effortful, infrequent, logical, calculating, conscious, step-by-step System 2, as portrayed by the hard-shelled lobster with its grasping claws.

Conceiving of human thought in this way is, at first blush, an affront: it suggests that our highly prized reason is, in the last consequence, not the sole sovereign of the mental realm, but that it shares its dominion with an altogether darker figure, an obscure éminence grise who, we might suspect, rules from behind the scenes. But it holds great explanatory power, and in the present installment, we will see how it may shed light on James’ darkest question, by dividing nothing into something—and something else.

But first, we need a better understanding of System 2, its origin, and its characteristics.

GOFAI, The Sphex Wasp, and the Frame Problem

To achieve human-level intelligence, AI theorist Ben Goertzel proposes, requires ‘bridging the gap between symbolic and subsymbolic representations’—combining, in other words, a neural network-style System 1 with a System 2 based on explicit, symbolic manipulation of information. But how could something like this work?

Early approaches to AI essentially attempted to codify vast domains of knowledge using explicit sets of rules. This culminated in the creation of so-called ‘expert systems’: essentially, vast decision trees evaluating data on the basis of fixed criteria—like a thermostat which adjusts the temperature based on predefined reference values, but much more complex.

One might then propose that a candidate for System 2 could be built on this principle: that the path to true AI might lie in combining present-day approaches with ‘good, old-fashioned AI’ (GOFAI). But this runs into the so-called Frame problem: the world is rather inexhaustible, and no matter what set of rules you choose, you will be confronted with circumstances outside of their purview.

Consider, to illustrate, the Sphex wasp. Sphex is a burrowing wasp that has acquired a bit of notoriety in philosophical circles, most notably due to the writings of Daniel Dennett. It has a peculiar cycle of behavior: after a successful hunt, it will drop its prey near its nest, inspect it, only to then draw its—still alive, but paralyzed—prey in. But suppose a human experimenter moves the prey, while the wasp is busy inspecting the nest. Upon reemerging, it will then proceed to drag the prey towards the nest, drop it off, and begin its inspection anew—as if the breaking of the intended sequence of actions causes some simple program within the wasp to reset and restart. The cycle can be repeated: the wasp never gets wise to the experimenter’s interference.

This shows the problem with relying on fixed rules: faced with unforeseen circumstances, they cannot adapt. A System 2 built on explicitly hard-coded rules then would lead to a stunted being, incapable of engaging with novelty, forever stuck in the same rut. And while, yes, I also know some people like that, I think it’s fair to say that humans at least have the potential for genuine novelty, for breaking out of a rut, for creativity, play, and experimentation. Indeed, we do not need to move up the phylogenetic tree very far to encounter behavior that isn’t sphexish, as Douglas Hofstadter calls it.

An organism hoping to engage with the world in all its richness and variety then cannot rely on some prefabricated set of rules. It must, instead, be capable of forming adaptable, flexible, interactive and meaningful models of the world. In some way, the world must be present to it—must be re-presented within its own mental space. We might think—although this way of thinking is dangerous, for reasons that will soon become clear—of each organism as hosting some tiny copy of its surroundings within itself, to use, like a map, for planning, strategizing, or just to find its way around.

Having access to the world directly, such an organism could then react to changes in a flexible and adaptable way, rather than having to rely on a fixed set of rules to guide its action. But this runs into new difficulties.

The Dolphin and the Things Themselves

Dolphins are fascinating creatures: erstwhile émigrés to land, they, alongside the other cetaceans, ultimately chose to return to the oceans—which has the somewhat curious consequence that their closest land-dwelling cousins today are the hippopotamuses (not hippopotami). Their encephalization quotient—a measure for the ratio of observed to expected brain size for an animal of similar mass, sort of an ‘excess braininess’—is second only to humans, and as such, they are exceptionally intelligent beings. Indeed, ongoing programs pursue the goal of enabling true communication between humans and dolphins.

However, dolphin language may work differently from human language in one crucial way, owing to the different sensorium of the dolphin. They are capable of surveying their surroundings using echolocation—sonar. Thus, they send out sequences of sounds and clicks, and are capable of using the returning echo to ‘see’ the world around them. This alone must mean that their mind differs from ours in crucial ways—indeed, famously, Thomas Nagel has argued that we can never precisely know ‘what it is like’ to be a creature experiencing the world so differently from us (although his example was that of a bat).

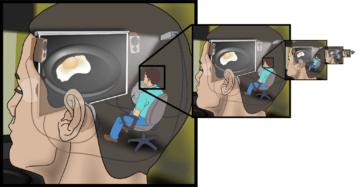

But moreover, it is sometimes proposed that dolphins are not only capable of interpreting the echoes they receive, they are also able to mimic specific echoes: if I were a dolphin, and wanted to describe my new car to you, I could just recreate its sonar shadow, and you would ‘see’ the car just as if you had echolocated it yourself. It’s as if humans were equipped with viewscreens embedded in their foreheads, upon which we could show one another what we’ve seen previously—or indeed, imagined out of the whole cloth.

Human communication, by contrast, occurs always one step further removed from the things themselves—occurs by symbols, such as the weird little squiggles your eyes are passing over right now which, by some wizardry, serve to (hopefully!) convey ideas to you. There is an intermediate step of interpretation—usually, occurring without conscious effort—which the dolphin could dispense with. You need to interpret the symbol ‘dog’ as referring to a certain sort of four-legged, furry animal, for us to communicate about dogs, whereas a dolphin could just show another what one sounds like.

System 2, in humans, then is neither sphexish nor dolphinesque: we are not limited to some fixed set of rules, but neither do we have access to the unvarnished world, so to speak. Rather, we use the somewhat mysterious relationship between symbols and the things they represent to ‘internalize’ the world.

The World Represented

Lacking immediate access to the world, the success with which we navigate it indicates that nevertheless, something within us must closely track its structure. Even if we can’t know the terrain directly, we can still find our way around, provided we have a good map—or, more broadly, a model. Thus, in some sense our symbolic System 2 must provide us with a representation of the world outside. The question of how, exactly, this works is closely related to the puzzle of intentionality—the other problem of the mind, beside what’s simply known as the ‘hard problem’.

Luckily, we won’t have to solve this problem here—it’s enough, for our purposes, to study the simpler issue of modeling. A theory of modeling was formulated by Robert Rosen, the ‘Newton of biology’, in his seminal study Life Itself. Before discussing it, we need to take a step back, and appreciate a certain self-referential oddity that will soon become important: as a theory is itself a model, a theory of modeling is a model of modeling—that is, it stands to its object in the same relation it describes between model and the system modeled (the object).

Rosen’s theory is best introduced by example. Consider the set of books on your shelf, ordered by thickness: there is a relation that obtains between any two members of this set, according to which one is thicker. That is, the set has a certain structure (mathematically speaking, a well-ordering). Then, consider the set of your maternal ancestors: there is, likewise, a relation between any pair, which we might term ‘seniority’. You can then pair members of one set up with members of the other, such that whenever one book is thicker than another, the corresponding ancestor is the more senior one.

By this identification, the set of books on your shelf becomes a model of your maternal ancestry: the thinnest volume is mapped to your mother, the next thinnest one to your grandmother, and so on. Using this model, you can immediately settle questions of seniority—to tell whether Ethel is an ancestor of Mildred, it suffices to compare the thickness of the corresponding volumes.

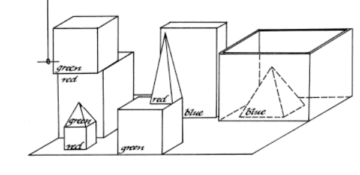

This is how models, in general, may be used to derive conclusions about the objects they model—by, roughly, an equivalence of structure. An orrery models the solar system, because the structure of the little metal beads, and their relative movement, models that of the planets around the sun.

But the account tells us nothing about how, ultimately, any given book comes to be identified with a certain maternal ancestor. After all, we could’ve played the same game using the set of paternal ancestors, instead: there is nothing inherent to either set of objects that connects them. It is a question of interpretation.

We might think of this in terms of a code. That ‘un chien’ means ‘a dog’ is due to the existence of a map taking French terms, and returning English ones. Such a map can be represented mathematically—most simply, in the form of a large lookup table. So we might propose that the interpretation that maps, say, ‘Moby Dick’ to your great-great-…-grandmother is instantiated in the mind by means of such a table.

But this runs into immediate problems. You understand ‘un chien’ by translating it as ‘a dog’, but how do you understand ‘a dog’? If you translate it into some appropriate mental symbols, then how are those, in turn, understood? We seem to be met with a cascading chain of higher levels of understanding, each necessary to ground the level below. This is the devious homunculus problem: we have essentially appealed to modeling in order to understand the notion of modeling—we have introduced a ‘little man’ who does the work of connecting symbols with their meanings, which explains our thought process—but only by virtue of leaving the homunculus’ unanalyzed.

It seems that there is a necessary lacuna here in the capacity of modeling: we can model everything—except modeling itself. Once we try, we find only a hall of mirrors. Thus, System 2 has an unavoidable blind spot: it can never explain itself to itself (this argument can be made more stringently). And, strange though it may seem, the whole world as we know it arises from this inability.

Nothing, Cleft In Two

Borges’ Library of Babel contains every possible book written using an alphabet of 25 characters. Yet, strangely, it contains almost no information: the entire library could be reconstructed using just the previous description, and hence, that is all the information it contains. Moreover, removing any particular volume from the library serves to increase its information content—by precisely the amount of information needed to uniquely describe that volume.

Information has the curious property that a set of objects can contain less of it than any particular member. Information arises from distinctions, and in the limit, the set of everything contains no information at all—as there are no distinctions to be made. ‘Everything’ then has the same information content as ‘nothing’—and thus, something may come from nothing by a process of removal—of splitting off parts.

This get us right back to the beginning (literally). As we’ve seen above, every System 2-model must omit at least one element—modeling itself. But every omission, every distinction creates information. What was formless and void becomes differentiated, obtains a particular character—it becomes something capable of being talked about, being described, being referred to—it becomes something, in other words.

‘The world’, as an undifferentiated whole, by contrast, is void of any information—it does not have any particular qualities in itself. However, any model of the world, due to its (necessary) incompleteness, does acquire a particular character, a certain information content. Hence, the world we can talk about, or refer to—the world of our models—appears to us as filled with information—as embodied in distinctions between its different parts, between the objects in the world. Creation is then not something that has occurred at some point in the immeasurably distant past, but occurs whenever the attempt is made to encompass the world’s inexhaustibility within a model.

The objective world arises before System 2 via the expulsion of the subject. ‘Nothing’ is split into subject and object, and by removing the subject, the objective world attains a nonzero information content—it decomposes into dogs, stars, cars, trees, lobsters, and octopodes: the named (modeled) is the mother of ten thousand things.

In this sense, as Russell K. Standish makes the point in Theory of Nothing, ‘something’ is the ‘inside view’ of ‘nothing’. We are books in Borges’ Library, able to read anything but themselves—and as a result, the library seems filled with information to us.