David G. Stork in The MIT Press Reader:

Last month at the San Francisco Museum of Modern Art I saw “2001: A Space Odyssey” on the big screen for my 47th time. The fact that this masterpiece remains on nearly every relevant list of “top ten films” and is shown and discussed over a half-century after its 1968 release is a testament to the cultural achievement of its director Stanley Kubrick, writer Arthur C. Clarke, and their team of expert filmmakers.

Last month at the San Francisco Museum of Modern Art I saw “2001: A Space Odyssey” on the big screen for my 47th time. The fact that this masterpiece remains on nearly every relevant list of “top ten films” and is shown and discussed over a half-century after its 1968 release is a testament to the cultural achievement of its director Stanley Kubrick, writer Arthur C. Clarke, and their team of expert filmmakers.

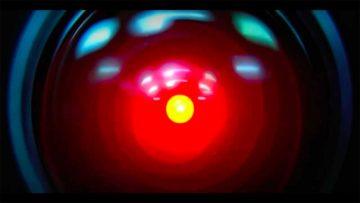

As with each viewing, I discovered or appreciated new details. But three iconic scenes — HAL’s silent murder of astronaut Frank Poole in the vacuum of outer space, HAL’s silent medical murder of the three hibernating crewmen, and the poignant sorrowful “death” of HAL — prompted deeper reflection, this time about the ethical conundrums of murder by a machine and of a machine. In the past few years experimental autonomous cars have led to the death of pedestrians and passengers alike. AI-powered bots, meanwhile, are infecting networks and influencing national elections. Elon Musk, Stephen Hawking, Sam Harris, and many other leading AI researchers have sounded the alarm: Unchecked, they say, AI may progress beyond our control and pose significant dangers to society.

And what about the converse: humans “killing” future computers by disconnection?

More here.