Gary Marcus and Ernest Davis in the New York Times:

Artificial intelligence has a trust problem. We are relying on A.I. more and more, but it hasn’t yet earned our confidence.

Artificial intelligence has a trust problem. We are relying on A.I. more and more, but it hasn’t yet earned our confidence.

Tesla cars driving in Autopilot mode, for example, have a troubling history of crashing into stopped vehicles. Amazon’s facial recognition system works great much of the time, but when asked to compare the faces of all 535 members of Congress with 25,000 public arrest photos, it found 28 matches, when in reality there were none. A computer program designed to vet job applicants for Amazon was discovered to systematically discriminate against women. Every month new weaknesses in A.I. are uncovered.

The problem is not that today’s A.I. needs to get better at what it does. The problem is that today’s A.I. needs to try to do something completely different.

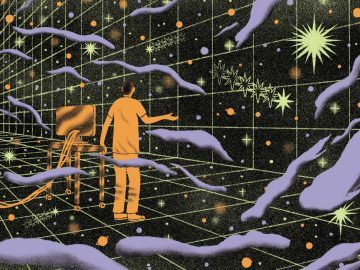

In particular, we need to stop building computer systems that merely get better and better at detecting statistical patterns in data sets — often using an approach known as deep learning — and start building computer systems that from the moment of their assembly innately grasp three basic concepts: time, space and causality.

More here.