Karen Hao in the MIT Technology Review:

David Duvenaud was collaborating on a project involving medical data when he ran up against a major shortcoming in AI.

David Duvenaud was collaborating on a project involving medical data when he ran up against a major shortcoming in AI.

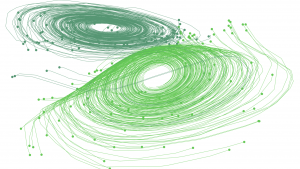

An AI researcher at the University of Toronto, he wanted to build a deep-learning model that would predict a patient’s health over time. But data from medical records is kind of messy: throughout your life, you might visit the doctor at different times for different reasons, generating a smattering of measurements at arbitrary intervals. A traditional neural network struggles to handle this. Its design requires it to learn from data with clear stages of observation. Thus it is a poor tool for modeling continuous processes, especially ones that are measured irregularly over time.

The challenge led Duvenaud and his collaborators at the university and the Vector Institute to redesign neural networks as we know them. Last week their paper was among four others crowned “best paper” at the Neural Information Processing Systems conference, one of the largest AI research gatherings in the world.

More here.