Noah Smith over at his website (via Crooked Timber):

Consider Proposition H: “God is watching out for me, and has a special purpose for me and me alone. Therefore, God will not let me die. No matter how dangerous a threat seems, it cannot possibly kill me, because God is looking out for me – and only me – at all times.”

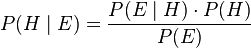

Suppose that you believe that there is a nonzero probability that H is true. And suppose you are a Bayesian – you update your beliefs according to Bayes' Rule. As you survive longer and longer – as more and more threats fail to kill you – your belief about the probability that H is true must increase and increase. It's just mechanical application of Bayes' Rule:

Here, E is “not being killed,” P(E|H)=1, and P(H) is assumed not to be zero. P(E) is less than 1, since under a number of alternative hypotheses you might get killed (if you have a philosophical problem with this due to the fact that anyone who observes any evidence must not be dead, just slightly tweak H so that it's possible to receive a “mortal wound”).

So P(H|E) is greater than P(H) – every moment that you fail to die increases your subjective probability that you are an invincible superman, the chosen of God. This is totally and completely rational, at least by the Bayesian definition of rationality.

If you think this is weird, consider a closely related question: Suppose H was true, and you were an invincible God-protected superman. How would you know? Simple: you'd go get in a bunch of dangerous situations, and when you failed to die, you'd gradually realize the truth. The more dangerous the situations you chose, the faster the truth would be revealed – if you jumped in front of a train and failed to die, that would be stronger evidence than if you just drove to the store without a seat belt and made it back safely.

But nevertheless, every moment contains some probability of death for a non-superman. So every moment that passes, evidence piles up in support of the proposition that you are a Bayesian superman. The pile will probably never amount to very much, but it will always grow, until you die.

So does this mean that any true Bayesian will eventually accept invincibility as his working hypothesis? No. There are other hypotheses that explain your failure to die just as well as H does, and which are mutually exclusive with H. The ratio of the likelihood of H to the likelihood of these alternatives will not change as you continue to live longer and longer. But this gets into a philosophical thing that I've never quite understood about statistical inference, Bayesian or otherwise, which is the question of how to choose the set of hypotheses, when the set of possible hypotheses seems infinite.

More here.