by John Allen Paulos

Every time I read or watch anything about the election I hear some variant of the phrase “margin of error.” My mathematically attuned ears perk up, but usually it’s just a slightly pretentious way of saying the election is very close or else that it’s not very close. Schmargin of error might be a better name for metaphorical uses of the phrase.

Every time I read or watch anything about the election I hear some variant of the phrase “margin of error.” My mathematically attuned ears perk up, but usually it’s just a slightly pretentious way of saying the election is very close or else that it’s not very close. Schmargin of error might be a better name for metaphorical uses of the phrase.

To be fair, the phrase is often supplemented with precise numbers (plus or minus 1.5%, for example) that purport to quantify exactly how tight the race is (or isn’t). Unfortunately these numbers are not as reliable as they might seem. The problem is that an enabling condition for this precision is that a random sample of voters be polled and the larger it is, the better.

A few technical remarks on the meaning of the margin of error in the next three paragraphs, which can be skimmed or skipped.

The basic qualitative idea: If we imagine many random samples of voters being taken, the sample percentages supporting a candidate will vary from sample to sample, of course, but these sample percentages will naturally cluster around the true percentage, P, of voters supporting the candidate in the whole population.

Importantly, this clustering of the sample probabilities can be described more quantitatively if we’re dealing with random samples of voters. In fact, if we assume that p is the percentage of voters supporting candidate A in a random sample and n is the number of voters in the sample, then we can get a good estimate of P, which is what we really want to know.

Specifically, the interval ranging from -2√[p(1-p)/n] to +2√[p(1-p)/n] will encompass, P, the percentage of voters in the whole population supporting candidate A, about 95% of the time.

Half of the above interval, which will vary a bit depending on p in the particular sample taken, is the margin of error. Since n appears in the denominator, the larger the sample is, the narrower the interval encompassing P.

If we are concerned not only with a good estimate of a candidate’s support, but also with of the ranking of the support for two candidates, A and B, (i.e., who’s leading and by how much) then the margin of error of this difference between them is generally about twice as wide since the estimates of both candidates’ support will vary.

Enough of these details. Since a random sample is needed to make the formal statistics work, the question is, how do we get at least a reasonable approximation of one? It’s not as easy as taking a sip (i.e., a random sample) from a bowl of tomato soup and concluding from it whether or not we will like the whole bowl.

Happily the voting population cannot be puréed, and the drawing of conclusions from approximately random samples is nowhere near as simple as it is for soup. One salient reason is that it’s become increasingly common for people not to respond to pollsters’ calls. Moreover, even if they do respond, many people will lie about their preferences or even whether or not they’re likely to vote. There are, after all, probably significant differences between those who do answer their phones and those who don’t. How do we estimate these differences and adjust for their effect?

A further difficulty is that the demographic and educational composition of a region may change from one election to the next. People move into and out of neighborhoods, counties, and states, and as they do, the relative proportions that favor the two candidates will change as well. Topical news events and long term developments will obviously impact demographic groups quite differently. How do we account for these imponderables?

Another factor is that different pollsters may word their questions about voters’ preferences differently (and sometimes tendentiously). They probably shouldn’t ask voters, “Are you ignorant or apathetic of both?” It invites the response, “I don’t know, and I don’t care.”

So how do pollsters deal with these and other issues? Some of the ways they try:

They can look at past voting records for the state or region to make up for gaps in their polling. This assumes a static population or at least an estimate of how it has changed.

They can try to account for voters’ different response rates to telephone queries, which also requires an estimate of the difference.

They can look at sub-samples of various demographic groups (ethnics, older voters, college graduates, and so on) and weigh them accordingly, but perhaps arbitrarily.

They can pose scrupulously neutral questions (a task a bit harder in these polarized times) and they can, of course, try to discern trends (or, in a few cases, rely on congenial narratives as betting markets do).

Whatever pollsters do to bolster their claims, a stubborn and embarrassing little secret remains: there is intrinsically and inevitably a lot of guesswork involved in polling

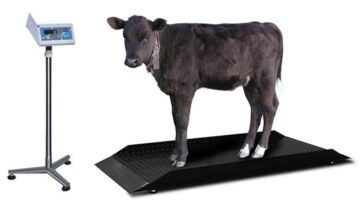

The math may be impeccable for truly random samples, but if the underlying assumptions aren’t met and/or if the workarounds are dubious, then we have a situation that reminds me of a story I related once a book of mine. The apocryphal tale concerns the way cows were weighed in the Old West. First the cowboys would find a long, thick plank and place the middle of it on a large, high rock. Then they’d attach the cow to one end of the plank with ropes and tie a large boulder to the other end. They’d carefully measure the distance from the cow to the rock and from the boulder to the rock. If the plank didn’t balance, they’d try another big boulder and measure again.

They’d keep this up until a boulder exactly balanced the cattle. After solving the resulting equation that expresses the cow’s weight in terms of the distances and the weight of the boulder, there would be only one thing left for them to do: They would have to guess the weight of the boulder.

Once again, the math may be exact, but the judgments, guesses, and estimates supporting its applications are anything but exact. In short, there is a lot of guessing about the weight of the boulders that go into the headline “Breaking News: plus or minus 1.5%.”

***

John Allen Paulos is an emeritus Professor of Mathematics at Temple University and the author of Innumeracy and A Mathematician Reads the Newspaper. These and his other books are available here.

Enjoying the content on 3QD? Help keep us going by donating now.